工具

探索可支援並加速 TensorFlow 工作流程的工具。

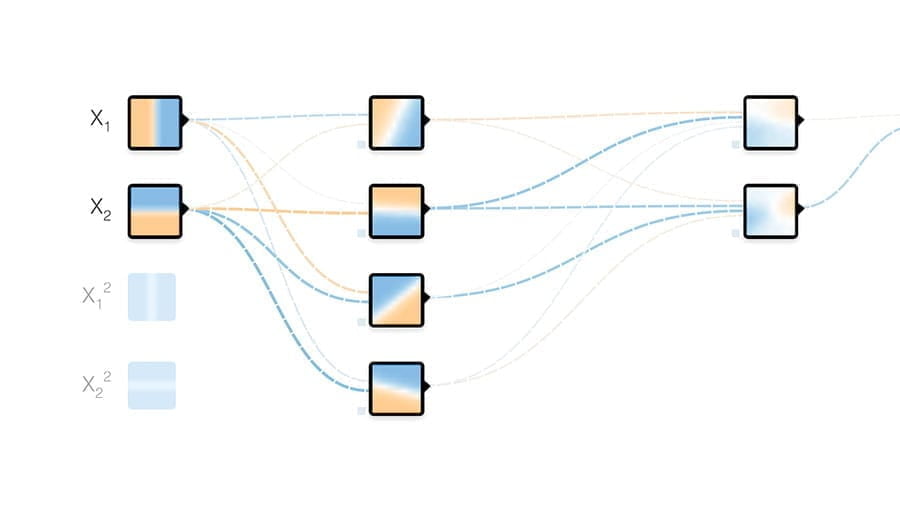

Visual Blocks

A visual coding web framework to prototype ML workflows using I/O devices, models, data augmentation, and even Colab code as reusable building blocks.

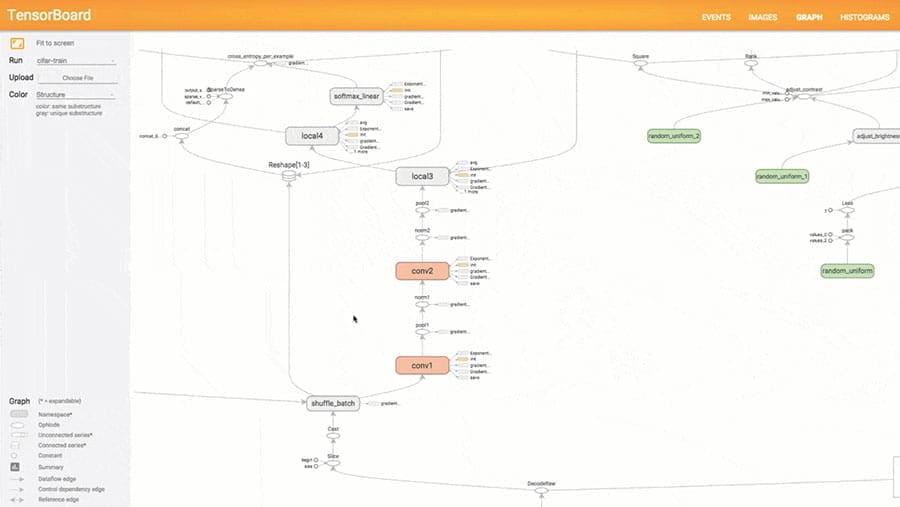

What-If Tool

不必撰寫程式碼就能探測機器學習模型的工具,對於瞭解模型、進行偵錯和維持機器學習公平性很有幫助。TensorBoard 和 Jupyter/Colab 筆記本皆有提供這項工具。

TPU Research Cloud

TPU Research Cloud (TRC) 計畫可讓研究人員申請免費存取具有超過 1,000 個 Cloud TPU 的叢集,加速取得下一波研究突破。