本笔记本将教您如何使用 MoveNet 和 TensorFlow Lite 训练姿势分类模型。结果是一个新的 TensorFlow Lite 模型,该模型接受来自 MoveNet 模型的输出作为其输入,并输出姿势分类,例如瑜伽姿势的名称。

本笔记本中的流程由 3 个部分组成:

- 第 1 部分:将姿势分类训练数据预处理为 CSV 文件,该文件指定 MoveNet 模型检测到的特征点(身体关键点)以及基准真相姿势标签。

- 第 2 部分:构建和训练姿势分类模型,该模型将 CSV 文件中的特征点坐标作为输入,并输出预测的标签。

- 第 3 部分:将姿势分类模型转换为 TFLite。

默认情况下,此笔记本使用一个带有瑜伽姿势标签的图像数据集,但我们在第 1 部分中也包括了一个部分,在那里你可以上传您自己的姿势图像数据集。

在 TensorFlow.org 上查看 在 TensorFlow.org 上查看 |

在 Google Colab 运行 在 Google Colab 运行 |

在 Github 上查看源代码 在 Github 上查看源代码 |

下载笔记本 下载笔记本 |

查看 TF Hub 模型 查看 TF Hub 模型 |

准备

在本部分中,您将导入必要的库并定义几个函数,以将训练图像预处理为包含特征点坐标和基准真相标签的 CSV 文件。

这里没有发生任何可观察到的事情,但是您可以展开隐藏的代码单元,以查看我们稍后将调用的一些函数的实现。

如果您只想在不了解所有细节的情况下创建 CSV 文件,只需运行本部分并继续第 1 部分。

pip install -q opencv-pythonimport csv

import cv2

import itertools

import numpy as np

import pandas as pd

import os

import sys

import tempfile

import tqdm

from matplotlib import pyplot as plt

from matplotlib.collections import LineCollection

import tensorflow as tf

import tensorflow_hub as hub

from tensorflow import keras

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score, classification_report, confusion_matrix

2022-08-30 23:59:04.246031: E tensorflow/stream_executor/cuda/cuda_blas.cc:2981] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered 2022-08-30 23:59:05.018380: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer.so.7'; dlerror: libnvrtc.so.11.1: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /tmpfs/src/tf_docs_env/lib/python3.9/site-packages/cv2/../../lib64: 2022-08-30 23:59:05.018661: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer_plugin.so.7'; dlerror: libnvrtc.so.11.1: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /tmpfs/src/tf_docs_env/lib/python3.9/site-packages/cv2/../../lib64: 2022-08-30 23:59:05.018675: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Cannot dlopen some TensorRT libraries. If you would like to use Nvidia GPU with TensorRT, please make sure the missing libraries mentioned above are installed properly.

使用 MoveNet 运行姿势预测的代码

Functions to run pose estimation with MoveNet

# Download model from TF Hub and check out inference code from GitHub

!wget -q -O movenet_thunder.tflite https://tfhub.dev/google/lite-model/movenet/singlepose/thunder/tflite/float16/4?lite-format=tflite

!git clone https://github.com/tensorflow/examples.git

pose_sample_rpi_path = os.path.join(os.getcwd(), 'examples/lite/examples/pose_estimation/raspberry_pi')

sys.path.append(pose_sample_rpi_path)

# Load MoveNet Thunder model

import utils

from data import BodyPart

from ml import Movenet

movenet = Movenet('movenet_thunder')

# Define function to run pose estimation using MoveNet Thunder.

# You'll apply MoveNet's cropping algorithm and run inference multiple times on

# the input image to improve pose estimation accuracy.

def detect(input_tensor, inference_count=3):

"""Runs detection on an input image.

Args:

input_tensor: A [height, width, 3] Tensor of type tf.float32.

Note that height and width can be anything since the image will be

immediately resized according to the needs of the model within this

function.

inference_count: Number of times the model should run repeatly on the

same input image to improve detection accuracy.

Returns:

A Person entity detected by the MoveNet.SinglePose.

"""

image_height, image_width, channel = input_tensor.shape

# Detect pose using the full input image

movenet.detect(input_tensor.numpy(), reset_crop_region=True)

# Repeatedly using previous detection result to identify the region of

# interest and only croping that region to improve detection accuracy

for _ in range(inference_count - 1):

person = movenet.detect(input_tensor.numpy(),

reset_crop_region=False)

return person

Cloning into 'examples'... remote: Enumerating objects: 22172, done. remote: Counting objects: 100% (234/234), done. remote: Compressing objects: 100% (159/159), done. remote: Total 22172 (delta 64), reused 156 (delta 38), pack-reused 21938 Receiving objects: 100% (22172/22172), 37.55 MiB | 21.53 MiB/s, done. Resolving deltas: 100% (12125/12125), done. INFO: Created TensorFlow Lite XNNPACK delegate for CPU.

Functions to visualize the pose estimation results.

def draw_prediction_on_image(

image, person, crop_region=None, close_figure=True,

keep_input_size=False):

"""Draws the keypoint predictions on image.

Args:

image: An numpy array with shape [height, width, channel] representing the

pixel values of the input image.

person: A person entity returned from the MoveNet.SinglePose model.

close_figure: Whether to close the plt figure after the function returns.

keep_input_size: Whether to keep the size of the input image.

Returns:

An numpy array with shape [out_height, out_width, channel] representing the

image overlaid with keypoint predictions.

"""

# Draw the detection result on top of the image.

image_np = utils.visualize(image, [person])

# Plot the image with detection results.

height, width, channel = image.shape

aspect_ratio = float(width) / height

fig, ax = plt.subplots(figsize=(12 * aspect_ratio, 12))

im = ax.imshow(image_np)

if close_figure:

plt.close(fig)

if not keep_input_size:

image_np = utils.keep_aspect_ratio_resizer(image_np, (512, 512))

return image_np

Code to load the images, detect pose landmarks and save them into a CSV file

class MoveNetPreprocessor(object):

"""Helper class to preprocess pose sample images for classification."""

def __init__(self,

images_in_folder,

images_out_folder,

csvs_out_path):

"""Creates a preprocessor to detection pose from images and save as CSV.

Args:

images_in_folder: Path to the folder with the input images. It should

follow this structure:

yoga_poses

|__ downdog

|______ 00000128.jpg

|______ 00000181.bmp

|______ ...

|__ goddess

|______ 00000243.jpg

|______ 00000306.jpg

|______ ...

...

images_out_folder: Path to write the images overlay with detected

landmarks. These images are useful when you need to debug accuracy

issues.

csvs_out_path: Path to write the CSV containing the detected landmark

coordinates and label of each image that can be used to train a pose

classification model.

"""

self._images_in_folder = images_in_folder

self._images_out_folder = images_out_folder

self._csvs_out_path = csvs_out_path

self._messages = []

# Create a temp dir to store the pose CSVs per class

self._csvs_out_folder_per_class = tempfile.mkdtemp()

# Get list of pose classes and print image statistics

self._pose_class_names = sorted(

[n for n in os.listdir(self._images_in_folder) if not n.startswith('.')]

)

def process(self, per_pose_class_limit=None, detection_threshold=0.1):

"""Preprocesses images in the given folder.

Args:

per_pose_class_limit: Number of images to load. As preprocessing usually

takes time, this parameter can be specified to make the reduce of the

dataset for testing.

detection_threshold: Only keep images with all landmark confidence score

above this threshold.

"""

# Loop through the classes and preprocess its images

for pose_class_name in self._pose_class_names:

print('Preprocessing', pose_class_name, file=sys.stderr)

# Paths for the pose class.

images_in_folder = os.path.join(self._images_in_folder, pose_class_name)

images_out_folder = os.path.join(self._images_out_folder, pose_class_name)

csv_out_path = os.path.join(self._csvs_out_folder_per_class,

pose_class_name + '.csv')

if not os.path.exists(images_out_folder):

os.makedirs(images_out_folder)

# Detect landmarks in each image and write it to a CSV file

with open(csv_out_path, 'w') as csv_out_file:

csv_out_writer = csv.writer(csv_out_file,

delimiter=',',

quoting=csv.QUOTE_MINIMAL)

# Get list of images

image_names = sorted(

[n for n in os.listdir(images_in_folder) if not n.startswith('.')])

if per_pose_class_limit is not None:

image_names = image_names[:per_pose_class_limit]

valid_image_count = 0

# Detect pose landmarks from each image

for image_name in tqdm.tqdm(image_names):

image_path = os.path.join(images_in_folder, image_name)

try:

image = tf.io.read_file(image_path)

image = tf.io.decode_jpeg(image)

except:

self._messages.append('Skipped ' + image_path + '. Invalid image.')

continue

else:

image = tf.io.read_file(image_path)

image = tf.io.decode_jpeg(image)

image_height, image_width, channel = image.shape

# Skip images that isn't RGB because Movenet requires RGB images

if channel != 3:

self._messages.append('Skipped ' + image_path +

'. Image isn\'t in RGB format.')

continue

person = detect(image)

# Save landmarks if all landmarks were detected

min_landmark_score = min(

[keypoint.score for keypoint in person.keypoints])

should_keep_image = min_landmark_score >= detection_threshold

if not should_keep_image:

self._messages.append('Skipped ' + image_path +

'. No pose was confidentlly detected.')

continue

valid_image_count += 1

# Draw the prediction result on top of the image for debugging later

output_overlay = draw_prediction_on_image(

image.numpy().astype(np.uint8), person,

close_figure=True, keep_input_size=True)

# Write detection result into an image file

output_frame = cv2.cvtColor(output_overlay, cv2.COLOR_RGB2BGR)

cv2.imwrite(os.path.join(images_out_folder, image_name), output_frame)

# Get landmarks and scale it to the same size as the input image

pose_landmarks = np.array(

[[keypoint.coordinate.x, keypoint.coordinate.y, keypoint.score]

for keypoint in person.keypoints],

dtype=np.float32)

# Write the landmark coordinates to its per-class CSV file

coordinates = pose_landmarks.flatten().astype(np.str).tolist()

csv_out_writer.writerow([image_name] + coordinates)

if not valid_image_count:

raise RuntimeError(

'No valid images found for the "{}" class.'

.format(pose_class_name))

# Print the error message collected during preprocessing.

print('\n'.join(self._messages))

# Combine all per-class CSVs into a single output file

all_landmarks_df = self._all_landmarks_as_dataframe()

all_landmarks_df.to_csv(self._csvs_out_path, index=False)

def class_names(self):

"""List of classes found in the training dataset."""

return self._pose_class_names

def _all_landmarks_as_dataframe(self):

"""Merge all per-class CSVs into a single dataframe."""

total_df = None

for class_index, class_name in enumerate(self._pose_class_names):

csv_out_path = os.path.join(self._csvs_out_folder_per_class,

class_name + '.csv')

per_class_df = pd.read_csv(csv_out_path, header=None)

# Add the labels

per_class_df['class_no'] = [class_index]*len(per_class_df)

per_class_df['class_name'] = [class_name]*len(per_class_df)

# Append the folder name to the filename column (first column)

per_class_df[per_class_df.columns[0]] = (os.path.join(class_name, '')

+ per_class_df[per_class_df.columns[0]].astype(str))

if total_df is None:

# For the first class, assign its data to the total dataframe

total_df = per_class_df

else:

# Concatenate each class's data into the total dataframe

total_df = pd.concat([total_df, per_class_df], axis=0)

list_name = [[bodypart.name + '_x', bodypart.name + '_y',

bodypart.name + '_score'] for bodypart in BodyPart]

header_name = []

for columns_name in list_name:

header_name += columns_name

header_name = ['file_name'] + header_name

header_map = {total_df.columns[i]: header_name[i]

for i in range(len(header_name))}

total_df.rename(header_map, axis=1, inplace=True)

return total_df

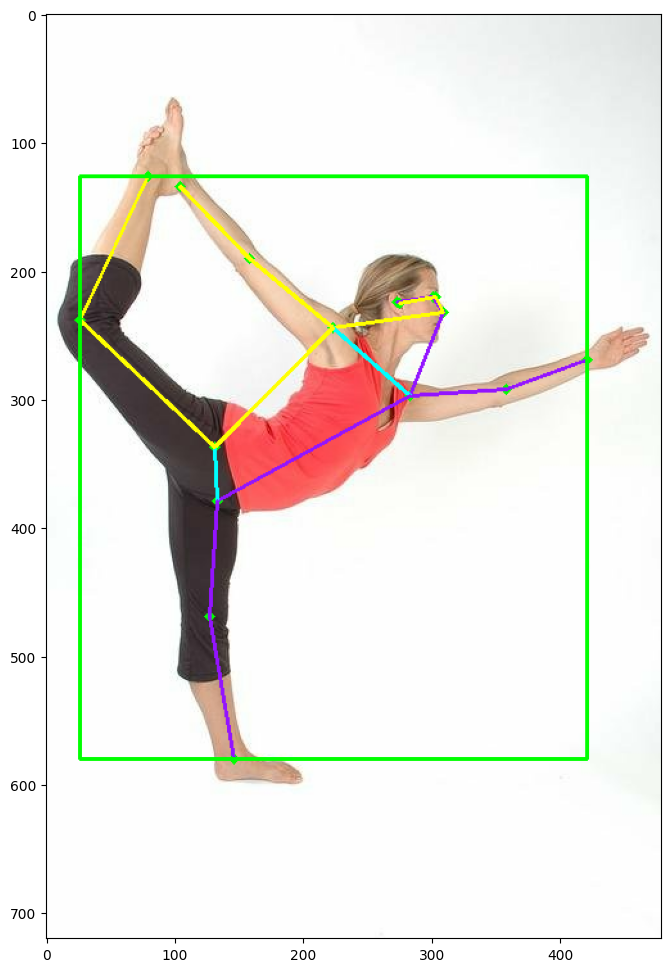

(Optional) Code snippet to try out the Movenet pose estimation logic

test_image_url = "https://cdn.pixabay.com/photo/2017/03/03/17/30/yoga-2114512_960_720.jpg"

!wget -O /tmp/image.jpeg {test_image_url}

if len(test_image_url):

image = tf.io.read_file('/tmp/image.jpeg')

image = tf.io.decode_jpeg(image)

person = detect(image)

_ = draw_prediction_on_image(image.numpy(), person, crop_region=None,

close_figure=False, keep_input_size=True)

--2022-08-30 23:59:09-- https://cdn.pixabay.com/photo/2017/03/03/17/30/yoga-2114512_960_720.jpg Resolving cdn.pixabay.com (cdn.pixabay.com)... 172.64.150.12, 104.18.37.244, 2606:4700:4400::ac40:960c, ... Connecting to cdn.pixabay.com (cdn.pixabay.com)|172.64.150.12|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 17652 (17K) [image/jpeg] Saving to: ‘/tmp/image.jpeg’ /tmp/image.jpeg 100%[===================>] 17.24K --.-KB/s in 0s 2022-08-30 23:59:09 (83.0 MB/s) - ‘/tmp/image.jpeg’ saved [17652/17652]

第 1 部分:对输入图像进行预处理

因为我们的姿势分类器的输入是来自 MoveNet 模型的输出特征点,所以我们需要通过在 MoveNet 中运行带标签的图像,然后将所有特征点数据和基准真相标签捕获到 CSV 文件中来生成我们的训练数据集。

我们为本教程提供的数据集是由 CG 生成的瑜伽姿势数据集。它包含了多个 CG 生成的模特完成 5 种不同瑜伽姿势的图像。该目录已拆分为 train 数据集和 test 数据集。

因此,在本部分中,我们将下载瑜伽数据集并通过 MoveNet 运行它,这样我们就可以将所有特征点捕获到 CSV 文件中…但是,将我们的瑜伽数据集提供给 MoveNet 并生成此 CSV 文件大约需要 15 分钟。因此,您也可以下载瑜伽数据集的预先存在的 CSV 文件,方法是将下面的 is_skip_step_1 参数设置为 True。这样,您将跳过此步骤,并改为下载将在此预处理步骤中创建的相同 CSV 文件。

另一方面,如果您希望使用您自己的图像数据集来训练姿势分类器,则需要上传您的图像并运行此预处理步骤(让 is_skip_step_1 保持为 False),按照下面的说明上传您自己的姿势数据集。

is_skip_step_1 = False

(可选)上传您自己的姿势数据集

use_custom_dataset = False

dataset_is_split = False

如果您想用自己的带标签姿势训练姿势分类器(它们可以是任何姿势,不仅仅是瑜伽姿势),请按照以下步骤进行操作:

将上面的

use_custom_dataset选项设置为 True。准备一个存档文件(ZIP、TAR 或其他),其中包括内含图像数据集的文件夹。文件夹必须包括您的姿势的排序图像,如下所示。

如果您已将数据集拆分为训练集和测试集,则将 dataset_is_split 设置为 True。也就是说,您的图像文件夹必须包括如下所示的“训练”和“测试”目录:

yoga_poses/ |__ train/ |__ downdog/ |______ 00000128.jpg |______ ... |__ test/ |__ downdog/ |______ 00000181.jpg |______ ...

- 点击左侧的 Files 选项卡(文件夹图标),然后点击 Upload to session storage(文件图标)。

- 选择您的存档文件并等待它完成上传,然后再继续。

- 编辑以下代码块以指定存档文件和图像目录的名称。(默认情况下,我们需要 ZIP 文件,因此如果您的存档是另一种格式,则还需要修改该部分。)

- 接下来,运行笔记本的剩余内容。

import os

import random

import shutil

def split_into_train_test(images_origin, images_dest, test_split):

"""Splits a directory of sorted images into training and test sets.

Args:

images_origin: Path to the directory with your images. This directory

must include subdirectories for each of your labeled classes. For example:

yoga_poses/

|__ downdog/

|______ 00000128.jpg

|______ 00000181.jpg

|______ ...

|__ goddess/

|______ 00000243.jpg

|______ 00000306.jpg

|______ ...

...

images_dest: Path to a directory where you want the split dataset to be

saved. The results looks like this:

split_yoga_poses/

|__ train/

|__ downdog/

|______ 00000128.jpg

|______ ...

|__ test/

|__ downdog/

|______ 00000181.jpg

|______ ...

test_split: Fraction of data to reserve for test (float between 0 and 1).

"""

_, dirs, _ = next(os.walk(images_origin))

TRAIN_DIR = os.path.join(images_dest, 'train')

TEST_DIR = os.path.join(images_dest, 'test')

os.makedirs(TRAIN_DIR, exist_ok=True)

os.makedirs(TEST_DIR, exist_ok=True)

for dir in dirs:

# Get all filenames for this dir, filtered by filetype

filenames = os.listdir(os.path.join(images_origin, dir))

filenames = [os.path.join(images_origin, dir, f) for f in filenames if (

f.endswith('.png') or f.endswith('.jpg') or f.endswith('.jpeg') or f.endswith('.bmp'))]

# Shuffle the files, deterministically

filenames.sort()

random.seed(42)

random.shuffle(filenames)

# Divide them into train/test dirs

os.makedirs(os.path.join(TEST_DIR, dir), exist_ok=True)

os.makedirs(os.path.join(TRAIN_DIR, dir), exist_ok=True)

test_count = int(len(filenames) * test_split)

for i, file in enumerate(filenames):

if i < test_count:

destination = os.path.join(TEST_DIR, dir, os.path.split(file)[1])

else:

destination = os.path.join(TRAIN_DIR, dir, os.path.split(file)[1])

shutil.copyfile(file, destination)

print(f'Moved {test_count} of {len(filenames)} from class "{dir}" into test.')

print(f'Your split dataset is in "{images_dest}"')

if use_custom_dataset:

# ATTENTION:

# You must edit these two lines to match your archive and images folder name:

# !tar -xf YOUR_DATASET_ARCHIVE_NAME.tar

!unzip -q YOUR_DATASET_ARCHIVE_NAME.zip

dataset_in = 'YOUR_DATASET_DIR_NAME'

# You can leave the rest alone:

if not os.path.isdir(dataset_in):

raise Exception("dataset_in is not a valid directory")

if dataset_is_split:

IMAGES_ROOT = dataset_in

else:

dataset_out = 'split_' + dataset_in

split_into_train_test(dataset_in, dataset_out, test_split=0.2)

IMAGES_ROOT = dataset_out

注:如果您使用 split_into_train_test() 拆分数据集,则它需要所有图像为 PNG、JPEG 或 BMP 格式,并忽略其他文件类型。

下载瑜伽数据集

if not is_skip_step_1 and not use_custom_dataset:

!wget -O yoga_poses.zip http://download.tensorflow.org/data/pose_classification/yoga_poses.zip

!unzip -q yoga_poses.zip -d yoga_cg

IMAGES_ROOT = "yoga_cg"

--2022-08-30 23:59:13-- http://download.tensorflow.org/data/pose_classification/yoga_poses.zip Resolving download.tensorflow.org (download.tensorflow.org)... 108.177.121.128, 2607:f8b0:4001:c19::80 Connecting to download.tensorflow.org (download.tensorflow.org)|108.177.121.128|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 102517581 (98M) [application/zip] Saving to: ‘yoga_poses.zip’ yoga_poses.zip 100%[===================>] 97.77M 152MB/s in 0.6s 2022-08-30 23:59:14 (152 MB/s) - ‘yoga_poses.zip’ saved [102517581/102517581]

预处理 TRAIN 数据集

if not is_skip_step_1:

images_in_train_folder = os.path.join(IMAGES_ROOT, 'train')

images_out_train_folder = 'poses_images_out_train'

csvs_out_train_path = 'train_data.csv'

preprocessor = MoveNetPreprocessor(

images_in_folder=images_in_train_folder,

images_out_folder=images_out_train_folder,

csvs_out_path=csvs_out_train_path,

)

preprocessor.process(per_pose_class_limit=None)

Preprocessing chair 0%| | 0/200 [00:00<?, ?it/s]/tmpfs/tmp/ipykernel_35698/1291585794.py:128: DeprecationWarning: `np.str` is a deprecated alias for the builtin `str`. To silence this warning, use `str` by itself. Doing this will not modify any behavior and is safe. If you specifically wanted the numpy scalar type, use `np.str_` here. Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations coordinates = pose_landmarks.flatten().astype(np.str).tolist() 100%|██████████| 200/200 [00:17<00:00, 11.53it/s] Preprocessing cobra 100%|██████████| 200/200 [00:16<00:00, 12.01it/s] Preprocessing dog 100%|██████████| 200/200 [00:16<00:00, 11.91it/s] Preprocessing tree 100%|██████████| 200/200 [00:17<00:00, 11.35it/s] Preprocessing warrior 100%|██████████| 200/200 [00:15<00:00, 12.99it/s] Skipped yoga_cg/train/chair/girl3_chair091.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair092.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair093.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair094.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair096.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair097.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair099.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair100.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair104.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair106.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair110.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair114.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair115.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair118.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair122.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair123.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair124.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair125.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair131.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair132.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair133.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair134.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair136.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair138.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair139.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair142.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/guy2_chair089.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/guy2_chair136.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/guy2_chair140.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/guy2_chair143.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/guy2_chair144.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/guy2_chair145.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/guy2_chair146.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra026.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra029.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra030.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra038.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra040.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra041.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra048.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra050.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra051.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra055.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra059.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra061.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra068.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra070.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra081.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra087.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra088.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra089.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra090.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra091.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra092.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra093.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra094.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra096.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra099.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra102.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra110.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra112.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra115.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra119.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra122.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra128.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra129.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra136.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra140.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl2_cobra029.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl2_cobra046.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl2_cobra050.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl2_cobra053.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl2_cobra108.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl2_cobra117.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl2_cobra129.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl2_cobra133.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl2_cobra136.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl2_cobra140.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra028.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra030.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra032.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra039.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra040.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra051.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra052.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra058.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra062.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra068.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra072.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra076.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra078.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra079.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra082.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra088.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra092.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra097.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra099.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra107.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra129.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra130.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra132.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra134.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra138.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra034.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra042.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra043.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra047.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra053.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra065.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra077.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra078.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra080.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra081.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra084.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra088.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra089.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra102.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra105.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra108.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra139.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl1_dog027.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl1_dog028.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl1_dog030.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl1_dog032.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog075.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog080.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog083.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog085.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog087.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog088.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog090.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog091.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog093.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog094.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog095.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog099.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog100.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog101.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog103.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog104.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog105.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog107.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog111.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog025.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog026.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog027.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog028.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog031.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog033.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog035.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog037.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog040.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog041.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog047.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog052.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog062.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog072.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog074.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog075.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog077.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog081.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog082.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog086.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog088.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog090.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog092.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog094.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog095.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog096.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog100.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog102.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog103.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog104.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog106.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog107.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog111.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/guy1_dog070.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/guy1_dog076.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/guy2_dog070.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/guy2_dog071.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/guy2_dog082.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/girl2_tree119.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/girl2_tree122.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/girl2_tree161.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/girl2_tree163.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy1_tree139.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy1_tree140.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy1_tree141.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy1_tree143.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy2_tree085.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy2_tree086.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy2_tree087.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy2_tree088.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy2_tree090.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy2_tree092.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy2_tree145.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy2_tree147.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior049.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior053.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior064.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior066.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior067.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior072.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior075.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior077.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior080.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior083.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior084.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior087.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior089.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior093.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior094.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior095.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior098.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior099.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior100.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior103.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior108.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior109.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior111.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior112.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior113.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior114.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior116.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior117.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior047.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior049.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior050.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior052.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior057.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior058.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior063.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior068.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior079.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior083.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior085.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior088.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior092.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior096.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior097.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior102.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior106.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior108.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior042.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior043.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior047.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior049.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior051.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior054.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior056.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior057.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior061.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior066.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior067.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior073.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior074.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior075.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior079.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior087.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior089.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior090.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior091.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior092.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior094.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior095.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior096.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior100.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior103.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior107.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior115.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior117.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior134.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior140.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior143.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior043.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior048.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior051.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior052.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior055.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior057.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior062.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior068.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior069.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior073.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior076.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior077.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior080.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior081.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior082.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior088.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior091.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior092.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior093.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior094.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior097.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior118.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior120.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior121.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior124.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior125.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior126.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior131.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior134.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior135.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior138.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior143.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior145.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior148.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior051.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior086.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior111.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior118.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior122.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior129.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior131.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior135.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior137.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior139.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior145.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior148.jpg. No pose was confidentlly detected.

预处理 TEST 数据集

if not is_skip_step_1:

images_in_test_folder = os.path.join(IMAGES_ROOT, 'test')

images_out_test_folder = 'poses_images_out_test'

csvs_out_test_path = 'test_data.csv'

preprocessor = MoveNetPreprocessor(

images_in_folder=images_in_test_folder,

images_out_folder=images_out_test_folder,

csvs_out_path=csvs_out_test_path,

)

preprocessor.process(per_pose_class_limit=None)

Preprocessing chair 0%| | 0/84 [00:00<?, ?it/s]/tmpfs/tmp/ipykernel_35698/1291585794.py:128: DeprecationWarning: `np.str` is a deprecated alias for the builtin `str`. To silence this warning, use `str` by itself. Doing this will not modify any behavior and is safe. If you specifically wanted the numpy scalar type, use `np.str_` here. Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations coordinates = pose_landmarks.flatten().astype(np.str).tolist() 100%|██████████| 84/84 [00:08<00:00, 9.33it/s] Preprocessing cobra 100%|██████████| 116/116 [00:09<00:00, 11.73it/s] Preprocessing dog 100%|██████████| 90/90 [00:08<00:00, 11.22it/s] Preprocessing tree 100%|██████████| 96/96 [00:08<00:00, 11.03it/s] Preprocessing warrior 100%|██████████| 109/109 [00:09<00:00, 11.83it/s] Skipped yoga_cg/test/cobra/guy3_cobra048.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra050.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra051.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra052.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra053.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra054.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra055.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra056.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra057.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra058.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra059.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra060.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra062.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra069.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra075.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra077.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra081.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra124.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra131.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra132.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra134.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra135.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra136.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/dog/guy3_dog025.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/dog/guy3_dog026.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/dog/guy3_dog036.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/dog/guy3_dog042.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/dog/guy3_dog106.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/dog/guy3_dog108.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior042.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior043.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior044.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior045.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior046.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior047.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior048.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior050.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior051.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior052.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior053.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior054.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior055.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior056.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior059.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior060.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior062.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior063.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior065.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior066.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior068.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior070.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior071.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior072.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior073.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior074.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior075.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior076.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior077.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior079.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior080.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior081.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior082.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior083.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior084.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior085.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior086.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior087.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior088.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior089.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior137.jpg. No pose was confidentlly detected.

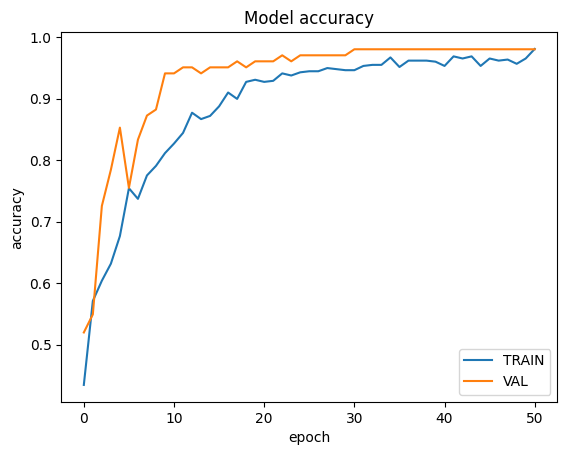

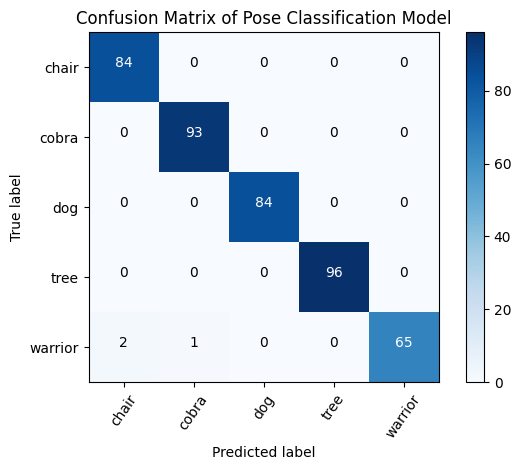

第 2 部分:训练一个以特征点坐标为输入的姿态分类模型,并输出预测的标签。

您将构建一个 TensorFlow 模型,该模型会获取特征点坐标并预测输入图像中的人执行的姿势类。该模型由两个子模型组成:

- 子模型 1 会根据检测到的特征点坐标计算姿势嵌入向量(也称为特征向量)。

- 子模型 2 会通过几个

Dense{/code0 层馈送姿势嵌入向量来预测姿势类别。

然后,您将基于在第 1 部分中进行了预处理的数据集来训练模型。

(可选)如果未运行第 1 部分,请下载经过预处理的数据集

# Download the preprocessed CSV files which are the same as the output of step 1

if is_skip_step_1:

!wget -O train_data.csv http://download.tensorflow.org/data/pose_classification/yoga_train_data.csv

!wget -O test_data.csv http://download.tensorflow.org/data/pose_classification/yoga_test_data.csv

csvs_out_train_path = 'train_data.csv'

csvs_out_test_path = 'test_data.csv'

is_skipped_step_1 = True

将预处理后的 CSV 加载到 TRAIN 数据集和 TEST 数据集中。

def load_pose_landmarks(csv_path):

"""Loads a CSV created by MoveNetPreprocessor.

Returns:

X: Detected landmark coordinates and scores of shape (N, 17 * 3)

y: Ground truth labels of shape (N, label_count)

classes: The list of all class names found in the dataset

dataframe: The CSV loaded as a Pandas dataframe features (X) and ground

truth labels (y) to use later to train a pose classification model.

"""

# Load the CSV file

dataframe = pd.read_csv(csv_path)

df_to_process = dataframe.copy()

# Drop the file_name columns as you don't need it during training.

df_to_process.drop(columns=['file_name'], inplace=True)

# Extract the list of class names

classes = df_to_process.pop('class_name').unique()

# Extract the labels

y = df_to_process.pop('class_no')

# Convert the input features and labels into the correct format for training.

X = df_to_process.astype('float64')

y = keras.utils.to_categorical(y)

return X, y, classes, dataframe

加载并将原始的 TRAIN 数据集拆分为 TRAIN(85% 的数据)和 VALIDATE(剩余 15%)。

# Load the train data

X, y, class_names, _ = load_pose_landmarks(csvs_out_train_path)

# Split training data (X, y) into (X_train, y_train) and (X_val, y_val)

X_train, X_val, y_train, y_val = train_test_split(X, y,

test_size=0.15)

# Load the test data

X_test, y_test, _, df_test = load_pose_landmarks(csvs_out_test_path)

定义函数以将姿势特征点转换为姿势嵌入向量(也称为特征向量)用于姿势分类

接下来,通过以下方式将特征点坐标转换为特征向量:

- 将姿势中心移动到原点。

- 缩放姿势以使姿势大小变为 1

- 将这些坐标展平为特征向量

然后使用该特征向量训练基于神经网络的姿势分类器。

def get_center_point(landmarks, left_bodypart, right_bodypart):

"""Calculates the center point of the two given landmarks."""

left = tf.gather(landmarks, left_bodypart.value, axis=1)

right = tf.gather(landmarks, right_bodypart.value, axis=1)

center = left * 0.5 + right * 0.5

return center

def get_pose_size(landmarks, torso_size_multiplier=2.5):

"""Calculates pose size.

It is the maximum of two values:

* Torso size multiplied by `torso_size_multiplier`

* Maximum distance from pose center to any pose landmark

"""

# Hips center

hips_center = get_center_point(landmarks, BodyPart.LEFT_HIP,

BodyPart.RIGHT_HIP)

# Shoulders center

shoulders_center = get_center_point(landmarks, BodyPart.LEFT_SHOULDER,

BodyPart.RIGHT_SHOULDER)

# Torso size as the minimum body size

torso_size = tf.linalg.norm(shoulders_center - hips_center)

# Pose center

pose_center_new = get_center_point(landmarks, BodyPart.LEFT_HIP,

BodyPart.RIGHT_HIP)

pose_center_new = tf.expand_dims(pose_center_new, axis=1)

# Broadcast the pose center to the same size as the landmark vector to

# perform substraction

pose_center_new = tf.broadcast_to(pose_center_new,

[tf.size(landmarks) // (17*2), 17, 2])

# Dist to pose center

d = tf.gather(landmarks - pose_center_new, 0, axis=0,

name="dist_to_pose_center")

# Max dist to pose center

max_dist = tf.reduce_max(tf.linalg.norm(d, axis=0))

# Normalize scale

pose_size = tf.maximum(torso_size * torso_size_multiplier, max_dist)

return pose_size

def normalize_pose_landmarks(landmarks):

"""Normalizes the landmarks translation by moving the pose center to (0,0) and

scaling it to a constant pose size.

"""

# Move landmarks so that the pose center becomes (0,0)

pose_center = get_center_point(landmarks, BodyPart.LEFT_HIP,

BodyPart.RIGHT_HIP)

pose_center = tf.expand_dims(pose_center, axis=1)

# Broadcast the pose center to the same size as the landmark vector to perform

# substraction

pose_center = tf.broadcast_to(pose_center,

[tf.size(landmarks) // (17*2), 17, 2])

landmarks = landmarks - pose_center

# Scale the landmarks to a constant pose size

pose_size = get_pose_size(landmarks)

landmarks /= pose_size

return landmarks

def landmarks_to_embedding(landmarks_and_scores):

"""Converts the input landmarks into a pose embedding."""

# Reshape the flat input into a matrix with shape=(17, 3)

reshaped_inputs = keras.layers.Reshape((17, 3))(landmarks_and_scores)

# Normalize landmarks 2D

landmarks = normalize_pose_landmarks(reshaped_inputs[:, :, :2])

# Flatten the normalized landmark coordinates into a vector

embedding = keras.layers.Flatten()(landmarks)

return embedding

定义用于姿势分类的 Keras 模型

我们的 Keras 模型会接受检测到的姿势特征点,然后计算姿势嵌入向量并预测姿势类别。

# Define the model

inputs = tf.keras.Input(shape=(51))

embedding = landmarks_to_embedding(inputs)

layer = keras.layers.Dense(128, activation=tf.nn.relu6)(embedding)

layer = keras.layers.Dropout(0.5)(layer)

layer = keras.layers.Dense(64, activation=tf.nn.relu6)(layer)

layer = keras.layers.Dropout(0.5)(layer)

outputs = keras.layers.Dense(len(class_names), activation="softmax")(layer)

model = keras.Model(inputs, outputs)

model.summary()

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 51)] 0 []

reshape (Reshape) (None, 17, 3) 0 ['input_1[0][0]']

tf.__operators__.getitem (Slic (None, 17, 2) 0 ['reshape[0][0]']

ingOpLambda)

tf.compat.v1.gather (TFOpLambd (None, 2) 0 ['tf.__operators__.getitem[0][0]'

a) ]

tf.compat.v1.gather_1 (TFOpLam (None, 2) 0 ['tf.__operators__.getitem[0][0]'

bda) ]

tf.math.multiply (TFOpLambda) (None, 2) 0 ['tf.compat.v1.gather[0][0]']

tf.math.multiply_1 (TFOpLambda (None, 2) 0 ['tf.compat.v1.gather_1[0][0]']

)

tf.__operators__.add (TFOpLamb (None, 2) 0 ['tf.math.multiply[0][0]',

da) 'tf.math.multiply_1[0][0]']

tf.compat.v1.size (TFOpLambda) () 0 ['tf.__operators__.getitem[0][0]'

]

tf.expand_dims (TFOpLambda) (None, 1, 2) 0 ['tf.__operators__.add[0][0]']

tf.compat.v1.floor_div (TFOpLa () 0 ['tf.compat.v1.size[0][0]']

mbda)

tf.broadcast_to (TFOpLambda) (None, 17, 2) 0 ['tf.expand_dims[0][0]',

'tf.compat.v1.floor_div[0][0]']

tf.math.subtract (TFOpLambda) (None, 17, 2) 0 ['tf.__operators__.getitem[0][0]'

, 'tf.broadcast_to[0][0]']

tf.compat.v1.gather_6 (TFOpLam (None, 2) 0 ['tf.math.subtract[0][0]']

bda)

tf.compat.v1.gather_7 (TFOpLam (None, 2) 0 ['tf.math.subtract[0][0]']

bda)

tf.math.multiply_6 (TFOpLambda (None, 2) 0 ['tf.compat.v1.gather_6[0][0]']

)

tf.math.multiply_7 (TFOpLambda (None, 2) 0 ['tf.compat.v1.gather_7[0][0]']

)

tf.__operators__.add_3 (TFOpLa (None, 2) 0 ['tf.math.multiply_6[0][0]',

mbda) 'tf.math.multiply_7[0][0]']

tf.compat.v1.size_1 (TFOpLambd () 0 ['tf.math.subtract[0][0]']

a)

tf.compat.v1.gather_4 (TFOpLam (None, 2) 0 ['tf.math.subtract[0][0]']

bda)

tf.compat.v1.gather_5 (TFOpLam (None, 2) 0 ['tf.math.subtract[0][0]']

bda)

tf.compat.v1.gather_2 (TFOpLam (None, 2) 0 ['tf.math.subtract[0][0]']

bda)

tf.compat.v1.gather_3 (TFOpLam (None, 2) 0 ['tf.math.subtract[0][0]']

bda)

tf.expand_dims_1 (TFOpLambda) (None, 1, 2) 0 ['tf.__operators__.add_3[0][0]']

tf.compat.v1.floor_div_1 (TFOp () 0 ['tf.compat.v1.size_1[0][0]']

Lambda)

tf.math.multiply_4 (TFOpLambda (None, 2) 0 ['tf.compat.v1.gather_4[0][0]']

)

tf.math.multiply_5 (TFOpLambda (None, 2) 0 ['tf.compat.v1.gather_5[0][0]']

)

tf.math.multiply_2 (TFOpLambda (None, 2) 0 ['tf.compat.v1.gather_2[0][0]']

)

tf.math.multiply_3 (TFOpLambda (None, 2) 0 ['tf.compat.v1.gather_3[0][0]']

)

tf.broadcast_to_1 (TFOpLambda) (None, 17, 2) 0 ['tf.expand_dims_1[0][0]',

'tf.compat.v1.floor_div_1[0][0]'

]

tf.__operators__.add_2 (TFOpLa (None, 2) 0 ['tf.math.multiply_4[0][0]',

mbda) 'tf.math.multiply_5[0][0]']

tf.__operators__.add_1 (TFOpLa (None, 2) 0 ['tf.math.multiply_2[0][0]',

mbda) 'tf.math.multiply_3[0][0]']

tf.math.subtract_2 (TFOpLambda (None, 17, 2) 0 ['tf.math.subtract[0][0]',

) 'tf.broadcast_to_1[0][0]']

tf.math.subtract_1 (TFOpLambda (None, 2) 0 ['tf.__operators__.add_2[0][0]',

) 'tf.__operators__.add_1[0][0]']

tf.compat.v1.gather_8 (TFOpLam (17, 2) 0 ['tf.math.subtract_2[0][0]']

bda)

tf.compat.v1.norm (TFOpLambda) () 0 ['tf.math.subtract_1[0][0]']

tf.compat.v1.norm_1 (TFOpLambd (2,) 0 ['tf.compat.v1.gather_8[0][0]']

a)

tf.math.multiply_8 (TFOpLambda () 0 ['tf.compat.v1.norm[0][0]']

)

tf.math.reduce_max (TFOpLambda () 0 ['tf.compat.v1.norm_1[0][0]']

)

tf.math.maximum (TFOpLambda) () 0 ['tf.math.multiply_8[0][0]',

'tf.math.reduce_max[0][0]']

tf.math.truediv (TFOpLambda) (None, 17, 2) 0 ['tf.math.subtract[0][0]',

'tf.math.maximum[0][0]']

flatten (Flatten) (None, 34) 0 ['tf.math.truediv[0][0]']

dense (Dense) (None, 128) 4480 ['flatten[0][0]']

dropout (Dropout) (None, 128) 0 ['dense[0][0]']

dense_1 (Dense) (None, 64) 8256 ['dropout[0][0]']

dropout_1 (Dropout) (None, 64) 0 ['dense_1[0][0]']

dense_2 (Dense) (None, 5) 325 ['dropout_1[0][0]']

==================================================================================================

Total params: 13,061

Trainable params: 13,061

Non-trainable params: 0

__________________________________________________________________________________________________

model.compile(

optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy']

)

# Add a checkpoint callback to store the checkpoint that has the highest

# validation accuracy.

checkpoint_path = "weights.best.hdf5"

checkpoint = keras.callbacks.ModelCheckpoint(checkpoint_path,

monitor='val_accuracy',

verbose=1,

save_best_only=True,

mode='max')

earlystopping = keras.callbacks.EarlyStopping(monitor='val_accuracy',

patience=20)

# Start training

history = model.fit(X_train, y_train,

epochs=200,

batch_size=16,

validation_data=(X_val, y_val),

callbacks=[checkpoint, earlystopping])

Epoch 1/200 37/37 [==============================] - ETA: 0s - loss: 1.5147 - accuracy: 0.4343 Epoch 1: val_accuracy improved from -inf to 0.51961, saving model to weights.best.hdf5 37/37 [==============================] - 1s 13ms/step - loss: 1.5147 - accuracy: 0.4343 - val_loss: 1.3484 - val_accuracy: 0.5196 Epoch 2/200 20/37 [===============>..............] - ETA: 0s - loss: 1.2658 - accuracy: 0.5781 Epoch 2: val_accuracy improved from 0.51961 to 0.54902, saving model to weights.best.hdf5 37/37 [==============================] - 0s 5ms/step - loss: 1.2110 - accuracy: 0.5709 - val_loss: 1.0034 - val_accuracy: 0.5490 Epoch 3/200 37/37 [==============================] - ETA: 0s - loss: 0.9721 - accuracy: 0.6038 Epoch 3: val_accuracy improved from 0.54902 to 0.72549, saving model to weights.best.hdf5 37/37 [==============================] - 0s 5ms/step - loss: 0.9721 - accuracy: 0.6038 - val_loss: 0.8269 - val_accuracy: 0.7255 Epoch 4/200 36/37 [============================>.] - ETA: 0s - loss: 0.8323 - accuracy: 0.6319 Epoch 4: val_accuracy improved from 0.72549 to 0.78431, saving model to weights.best.hdf5 37/37 [==============================] - 0s 5ms/step - loss: 0.8367 - accuracy: 0.6315 - val_loss: 0.7136 - val_accuracy: 0.7843 Epoch 5/200 36/37 [============================>.] - ETA: 0s - loss: 0.7720 - accuracy: 0.6771 Epoch 5: val_accuracy improved from 0.78431 to 0.85294, saving model to weights.best.hdf5 37/37 [==============================] - 0s 5ms/step - loss: 0.7724 - accuracy: 0.6765 - val_loss: 0.6280 - val_accuracy: 0.8529 Epoch 6/200 37/37 [==============================] - ETA: 0s - loss: 0.6802 - accuracy: 0.7543 Epoch 6: val_accuracy did not improve from 0.85294 37/37 [==============================] - 0s 4ms/step - loss: 0.6802 - accuracy: 0.7543 - val_loss: 0.5805 - val_accuracy: 0.7549 Epoch 7/200 37/37 [==============================] - ETA: 0s - loss: 0.6295 - accuracy: 0.7370 Epoch 7: val_accuracy did not improve from 0.85294 37/37 [==============================] - 0s 4ms/step - loss: 0.6295 - accuracy: 0.7370 - val_loss: 0.5190 - val_accuracy: 0.8333 Epoch 8/200 20/37 [===============>..............] - ETA: 0s - loss: 0.6349 - accuracy: 0.7500 Epoch 8: val_accuracy improved from 0.85294 to 0.87255, saving model to weights.best.hdf5 37/37 [==============================] - 0s 5ms/step - loss: 0.5991 - accuracy: 0.7751 - val_loss: 0.4707 - val_accuracy: 0.8725 Epoch 9/200 37/37 [==============================] - ETA: 0s - loss: 0.5581 - accuracy: 0.7907 Epoch 9: val_accuracy improved from 0.87255 to 0.88235, saving model to weights.best.hdf5 37/37 [==============================] - 0s 5ms/step - loss: 0.5581 - accuracy: 0.7907 - val_loss: 0.4248 - val_accuracy: 0.8824 Epoch 10/200 37/37 [==============================] - ETA: 0s - loss: 0.5100 - accuracy: 0.8114 Epoch 10: val_accuracy improved from 0.88235 to 0.94118, saving model to weights.best.hdf5 37/37 [==============================] - 0s 5ms/step - loss: 0.5100 - accuracy: 0.8114 - val_loss: 0.3767 - val_accuracy: 0.9412 Epoch 11/200 19/37 [==============>...............] - ETA: 0s - loss: 0.4280 - accuracy: 0.8553 Epoch 11: val_accuracy did not improve from 0.94118 37/37 [==============================] - 0s 4ms/step - loss: 0.4526 - accuracy: 0.8270 - val_loss: 0.3413 - val_accuracy: 0.9412 Epoch 12/200 19/37 [==============>...............] - ETA: 0s - loss: 0.4664 - accuracy: 0.8322 Epoch 12: val_accuracy improved from 0.94118 to 0.95098, saving model to weights.best.hdf5 37/37 [==============================] - 0s 5ms/step - loss: 0.4423 - accuracy: 0.8443 - val_loss: 0.3089 - val_accuracy: 0.9510 Epoch 13/200 37/37 [==============================] - ETA: 0s - loss: 0.3948 - accuracy: 0.8772 Epoch 13: val_accuracy did not improve from 0.95098 37/37 [==============================] - 0s 4ms/step - loss: 0.3948 - accuracy: 0.8772 - val_loss: 0.2862 - val_accuracy: 0.9510 Epoch 14/200 37/37 [==============================] - ETA: 0s - loss: 0.3855 - accuracy: 0.8668 Epoch 14: val_accuracy did not improve from 0.95098 37/37 [==============================] - 0s 4ms/step - loss: 0.3855 - accuracy: 0.8668 - val_loss: 0.2549 - val_accuracy: 0.9412 Epoch 15/200 37/37 [==============================] - ETA: 0s - loss: 0.3517 - accuracy: 0.8720 Epoch 15: val_accuracy did not improve from 0.95098 37/37 [==============================] - 0s 4ms/step - loss: 0.3517 - accuracy: 0.8720 - val_loss: 0.2370 - val_accuracy: 0.9510 Epoch 16/200 37/37 [==============================] - ETA: 0s - loss: 0.3418 - accuracy: 0.8875 Epoch 16: val_accuracy did not improve from 0.95098 37/37 [==============================] - 0s 4ms/step - loss: 0.3418 - accuracy: 0.8875 - val_loss: 0.2194 - val_accuracy: 0.9510 Epoch 17/200 36/37 [============================>.] - ETA: 0s - loss: 0.3042 - accuracy: 0.9097 Epoch 17: val_accuracy did not improve from 0.95098 37/37 [==============================] - 0s 4ms/step - loss: 0.3037 - accuracy: 0.9100 - val_loss: 0.2039 - val_accuracy: 0.9510 Epoch 18/200 34/37 [==========================>...] - ETA: 0s - loss: 0.2810 - accuracy: 0.8989 Epoch 18: val_accuracy improved from 0.95098 to 0.96078, saving model to weights.best.hdf5 37/37 [==============================] - 0s 5ms/step - loss: 0.2858 - accuracy: 0.8997 - val_loss: 0.1966 - val_accuracy: 0.9608 Epoch 19/200 34/37 [==========================>...] - ETA: 0s - loss: 0.2713 - accuracy: 0.9246 Epoch 19: val_accuracy did not improve from 0.96078 37/37 [==============================] - 0s 4ms/step - loss: 0.2668 - accuracy: 0.9273 - val_loss: 0.1804 - val_accuracy: 0.9510 Epoch 20/200 36/37 [============================>.] - ETA: 0s - loss: 0.2462 - accuracy: 0.9306 Epoch 20: val_accuracy did not improve from 0.96078 37/37 [==============================] - 0s 4ms/step - loss: 0.2454 - accuracy: 0.9308 - val_loss: 0.1697 - val_accuracy: 0.9608 Epoch 21/200 35/37 [===========================>..] - ETA: 0s - loss: 0.2498 - accuracy: 0.9268 Epoch 21: val_accuracy did not improve from 0.96078 37/37 [==============================] - 0s 4ms/step - loss: 0.2490 - accuracy: 0.9273 - val_loss: 0.1577 - val_accuracy: 0.9608 Epoch 22/200 35/37 [===========================>..] - ETA: 0s - loss: 0.2440 - accuracy: 0.9268 Epoch 22: val_accuracy did not improve from 0.96078 37/37 [==============================] - 0s 4ms/step - loss: 0.2412 - accuracy: 0.9291 - val_loss: 0.1498 - val_accuracy: 0.9608 Epoch 23/200 37/37 [==============================] - ETA: 0s - loss: 0.2174 - accuracy: 0.9412 Epoch 23: val_accuracy improved from 0.96078 to 0.97059, saving model to weights.best.hdf5 37/37 [==============================] - 0s 5ms/step - loss: 0.2174 - accuracy: 0.9412 - val_loss: 0.1459 - val_accuracy: 0.9706 Epoch 24/200 37/37 [==============================] - ETA: 0s - loss: 0.2382 - accuracy: 0.9377 Epoch 24: val_accuracy did not improve from 0.97059 37/37 [==============================] - 0s 4ms/step - loss: 0.2382 - accuracy: 0.9377 - val_loss: 0.1350 - val_accuracy: 0.9608 Epoch 25/200 20/37 [===============>..............] - ETA: 0s - loss: 0.2164 - accuracy: 0.9344 Epoch 25: val_accuracy did not improve from 0.97059 37/37 [==============================] - 0s 4ms/step - loss: 0.2022 - accuracy: 0.9429 - val_loss: 0.1345 - val_accuracy: 0.9706 Epoch 26/200 37/37 [==============================] - ETA: 0s - loss: 0.2280 - accuracy: 0.9446 Epoch 26: val_accuracy did not improve from 0.97059 37/37 [==============================] - 0s 4ms/step - loss: 0.2280 - accuracy: 0.9446 - val_loss: 0.1223 - val_accuracy: 0.9706 Epoch 27/200 19/37 [==============>...............] - ETA: 0s - loss: 0.1878 - accuracy: 0.9441 Epoch 27: val_accuracy did not improve from 0.97059 37/37 [==============================] - 0s 4ms/step - loss: 0.1873 - accuracy: 0.9446 - val_loss: 0.1235 - val_accuracy: 0.9706 Epoch 28/200 36/37 [============================>.] - ETA: 0s - loss: 0.1770 - accuracy: 0.9497 Epoch 28: val_accuracy did not improve from 0.97059 37/37 [==============================] - 0s 4ms/step - loss: 0.1767 - accuracy: 0.9498 - val_loss: 0.1164 - val_accuracy: 0.9706 Epoch 29/200 37/37 [==============================] - ETA: 0s - loss: 0.1881 - accuracy: 0.9481 Epoch 29: val_accuracy did not improve from 0.97059 37/37 [==============================] - 0s 4ms/step - loss: 0.1881 - accuracy: 0.9481 - val_loss: 0.1163 - val_accuracy: 0.9706 Epoch 30/200 19/37 [==============>...............] - ETA: 0s - loss: 0.1452 - accuracy: 0.9605 Epoch 30: val_accuracy did not improve from 0.97059 37/37 [==============================] - 0s 4ms/step - loss: 0.1832 - accuracy: 0.9464 - val_loss: 0.1151 - val_accuracy: 0.9706 Epoch 31/200 37/37 [==============================] - ETA: 0s - loss: 0.1848 - accuracy: 0.9464 Epoch 31: val_accuracy improved from 0.97059 to 0.98039, saving model to weights.best.hdf5 37/37 [==============================] - 0s 5ms/step - loss: 0.1848 - accuracy: 0.9464 - val_loss: 0.1037 - val_accuracy: 0.9804 Epoch 32/200 37/37 [==============================] - ETA: 0s - loss: 0.1811 - accuracy: 0.9533 Epoch 32: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.1811 - accuracy: 0.9533 - val_loss: 0.1052 - val_accuracy: 0.9804 Epoch 33/200 19/37 [==============>...............] - ETA: 0s - loss: 0.1694 - accuracy: 0.9572 Epoch 33: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.1698 - accuracy: 0.9550 - val_loss: 0.0994 - val_accuracy: 0.9804 Epoch 34/200 19/37 [==============>...............] - ETA: 0s - loss: 0.1612 - accuracy: 0.9572 Epoch 34: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.1698 - accuracy: 0.9550 - val_loss: 0.0914 - val_accuracy: 0.9804 Epoch 35/200 20/37 [===============>..............] - ETA: 0s - loss: 0.1778 - accuracy: 0.9625 Epoch 35: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.1693 - accuracy: 0.9671 - val_loss: 0.0919 - val_accuracy: 0.9804 Epoch 36/200 37/37 [==============================] - ETA: 0s - loss: 0.1638 - accuracy: 0.9516 Epoch 36: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.1638 - accuracy: 0.9516 - val_loss: 0.0914 - val_accuracy: 0.9804 Epoch 37/200 19/37 [==============>...............] - ETA: 0s - loss: 0.1283 - accuracy: 0.9671 Epoch 37: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.1458 - accuracy: 0.9619 - val_loss: 0.0886 - val_accuracy: 0.9804 Epoch 38/200 20/37 [===============>..............] - ETA: 0s - loss: 0.1492 - accuracy: 0.9531 Epoch 38: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.1421 - accuracy: 0.9619 - val_loss: 0.0831 - val_accuracy: 0.9804 Epoch 39/200 19/37 [==============>...............] - ETA: 0s - loss: 0.1492 - accuracy: 0.9638 Epoch 39: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.1406 - accuracy: 0.9619 - val_loss: 0.0802 - val_accuracy: 0.9804 Epoch 40/200 20/37 [===============>..............] - ETA: 0s - loss: 0.2034 - accuracy: 0.9438 Epoch 40: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.1649 - accuracy: 0.9602 - val_loss: 0.0802 - val_accuracy: 0.9804 Epoch 41/200 20/37 [===============>..............] - ETA: 0s - loss: 0.1474 - accuracy: 0.9469 Epoch 41: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.1327 - accuracy: 0.9533 - val_loss: 0.0789 - val_accuracy: 0.9804 Epoch 42/200 20/37 [===============>..............] - ETA: 0s - loss: 0.1540 - accuracy: 0.9563 Epoch 42: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.1319 - accuracy: 0.9689 - val_loss: 0.0754 - val_accuracy: 0.9804 Epoch 43/200 19/37 [==============>...............] - ETA: 0s - loss: 0.1578 - accuracy: 0.9539 Epoch 43: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.1316 - accuracy: 0.9654 - val_loss: 0.0701 - val_accuracy: 0.9804 Epoch 44/200 19/37 [==============>...............] - ETA: 0s - loss: 0.1159 - accuracy: 0.9638 Epoch 44: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.1151 - accuracy: 0.9689 - val_loss: 0.0699 - val_accuracy: 0.9804 Epoch 45/200 20/37 [===============>..............] - ETA: 0s - loss: 0.1006 - accuracy: 0.9688 Epoch 45: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.1234 - accuracy: 0.9533 - val_loss: 0.0700 - val_accuracy: 0.9804 Epoch 46/200 37/37 [==============================] - ETA: 0s - loss: 0.1178 - accuracy: 0.9654 Epoch 46: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.1178 - accuracy: 0.9654 - val_loss: 0.0672 - val_accuracy: 0.9804 Epoch 47/200 37/37 [==============================] - ETA: 0s - loss: 0.1091 - accuracy: 0.9619 Epoch 47: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.1091 - accuracy: 0.9619 - val_loss: 0.0663 - val_accuracy: 0.9804 Epoch 48/200 37/37 [==============================] - ETA: 0s - loss: 0.1154 - accuracy: 0.9637 Epoch 48: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.1154 - accuracy: 0.9637 - val_loss: 0.0649 - val_accuracy: 0.9804 Epoch 49/200 19/37 [==============>...............] - ETA: 0s - loss: 0.1244 - accuracy: 0.9638 Epoch 49: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.1225 - accuracy: 0.9567 - val_loss: 0.0658 - val_accuracy: 0.9804 Epoch 50/200 37/37 [==============================] - ETA: 0s - loss: 0.1131 - accuracy: 0.9654 Epoch 50: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.1131 - accuracy: 0.9654 - val_loss: 0.0639 - val_accuracy: 0.9804 Epoch 51/200 20/37 [===============>..............] - ETA: 0s - loss: 0.0953 - accuracy: 0.9812 Epoch 51: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.0867 - accuracy: 0.9810 - val_loss: 0.0618 - val_accuracy: 0.9804