บำรุงรักษาโดย Arm ML Tooling

เอกสารนี้ให้ภาพรวมของ API ทดลองสำหรับการรวมเทคนิคต่างๆ เพื่อเพิ่มประสิทธิภาพโมเดลการเรียนรู้ของเครื่องสำหรับการปรับใช้

ภาพรวม

การเพิ่มประสิทธิภาพการทำงานร่วมกันเป็นกระบวนการที่ครอบคลุมซึ่งครอบคลุมเทคนิคต่างๆ เพื่อสร้างแบบจำลองที่เมื่อนำไปใช้งาน จะแสดงความสมดุลที่ดีที่สุดของคุณลักษณะเป้าหมาย เช่น ความเร็วในการอนุมาน ขนาดแบบจำลอง และความแม่นยำ

แนวคิดของการเพิ่มประสิทธิภาพร่วมกันคือการสร้างเทคนิคเฉพาะบุคคลโดยประยุกต์ใช้ทีละเทคนิคเพื่อให้บรรลุผลการปรับให้เหมาะสมที่สะสมไว้ สามารถผสมผสานการปรับให้เหมาะสมต่อไปนี้ได้หลากหลาย:

- การตัดแต่งกิ่งน้ำหนัก

- การจัดกลุ่มน้ำหนัก

การหาปริมาณ

ปัญหาที่เกิดขึ้นเมื่อพยายามเชื่อมโยงเทคนิคเหล่านี้เข้าด้วยกันคือการใช้เทคนิคหนึ่งมักจะทำลายผลลัพธ์ของเทคนิคก่อนหน้า ทำลายผลประโยชน์โดยรวมของการใช้เทคนิคทั้งหมดพร้อมกัน ตัวอย่างเช่น การจัดกลุ่มไม่ได้รักษาความกระจัดกระจายที่แนะนำโดย pruning API เพื่อแก้ไขปัญหานี้ เราขอแนะนำเทคนิคการเพิ่มประสิทธิภาพการทำงานร่วมกันเชิงทดลองต่อไปนี้:

- กระจัดกระจายรักษาคลัสเตอร์

- การฝึกอบรมการรับรู้เชิงปริมาณการรักษาความกระจัดกระจาย (PQAT)

- การฝึกอบรมการรับรู้เชิงปริมาณการรักษาคลัสเตอร์ (CQAT)

- การฝึกอบรมที่ตระหนักถึงความกระจัดกระจายและคลัสเตอร์ที่รักษาเชิงปริมาณ

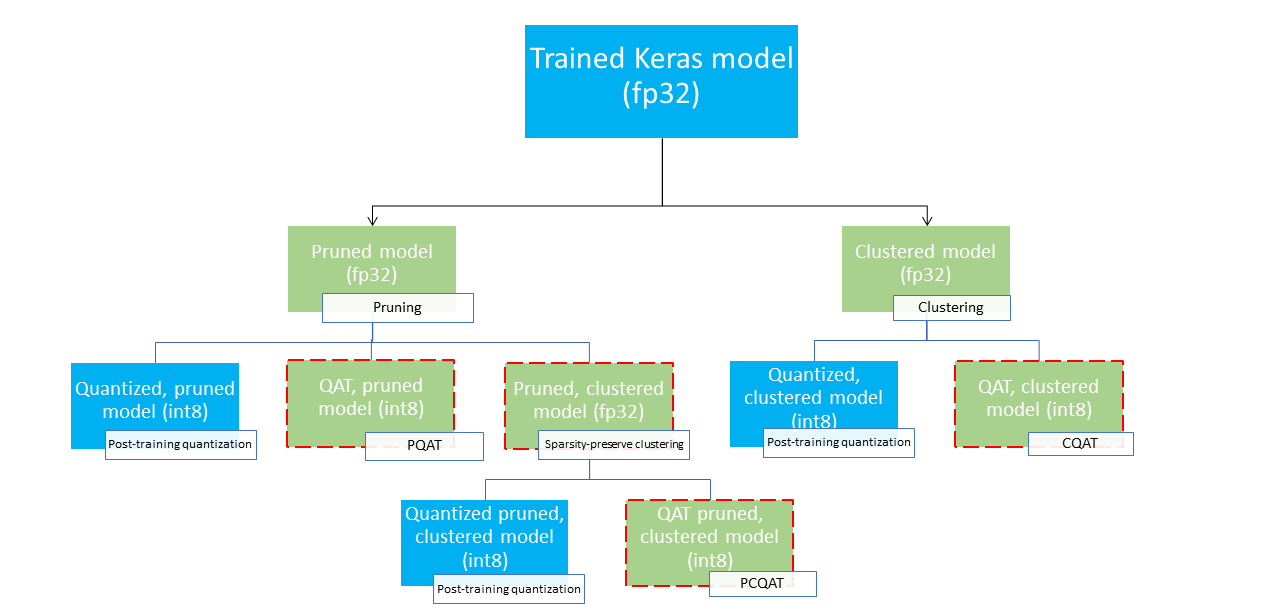

สิ่งเหล่านี้มีเส้นทางการปรับใช้หลายเส้นทางที่สามารถใช้เพื่อบีบอัดโมเดลแมชชีนเลิร์นนิงและใช้ประโยชน์จากการเร่งด้วยฮาร์ดแวร์ ณ เวลาอนุมาน แผนภาพด้านล่างแสดงเส้นทางการปรับใช้ต่างๆ ที่สามารถสำรวจได้ในการค้นหาโมเดลที่มีคุณสมบัติการปรับใช้ที่ต้องการ โดยที่โหนดปลายสุดเป็นโมเดลที่พร้อมปรับใช้ ซึ่งหมายความว่าเป็นแบบปริมาณบางส่วนหรือทั้งหมดและอยู่ในรูปแบบ tflite การเติมสีเขียวบ่งบอกถึงขั้นตอนที่จำเป็นต้องมีการฝึกสอนใหม่/การปรับแต่งอย่างละเอียด และเส้นขอบสีแดงประจะเน้นขั้นตอนการเพิ่มประสิทธิภาพการทำงานร่วมกัน เทคนิคที่ใช้ในการรับแบบจำลองที่โหนดที่กำหนดจะระบุไว้ในป้ายกำกับที่เกี่ยวข้อง

เส้นทางการปรับใช้โดยตรงแบบเชิงปริมาณเท่านั้น (หลังการฝึกอบรมหรือ QAT) ไม่ได้ระบุไว้ในภาพด้านบน

แนวคิดก็คือการเข้าถึงโมเดลที่ได้รับการปรับปรุงให้เหมาะสมที่สุดในระดับที่สามของแผนผังการปรับใช้ข้างต้น อย่างไรก็ตาม ระดับอื่นๆ ของการเพิ่มประสิทธิภาพสามารถพิสูจน์ได้ว่าน่าพอใจและบรรลุถึงค่าหน่วงการอนุมาน/ความแม่นยำในการอนุมานที่ต้องการ ซึ่งในกรณีนี้ไม่จำเป็นต้องปรับให้เหมาะสมอีกต่อไป กระบวนการฝึกอบรมที่แนะนำคือ ค่อยๆ ดำเนินการตามระดับของแผนผังการปรับใช้ที่เกี่ยวข้องกับสถานการณ์การปรับใช้เป้าหมาย และดูว่าแบบจำลองเป็นไปตามข้อกำหนดเวลาแฝงในการอนุมานหรือไม่ และหากไม่เป็นเช่นนั้น ให้ใช้เทคนิคการปรับให้เหมาะสมการทำงานร่วมกันที่เกี่ยวข้องเพื่อบีบอัดโมเดลเพิ่มเติมและทำซ้ำ จนกว่าแบบจำลองจะได้รับการปรับให้เหมาะสมที่สุด (ตัด แบ่งกลุ่ม และหาปริมาณ) หากจำเป็น

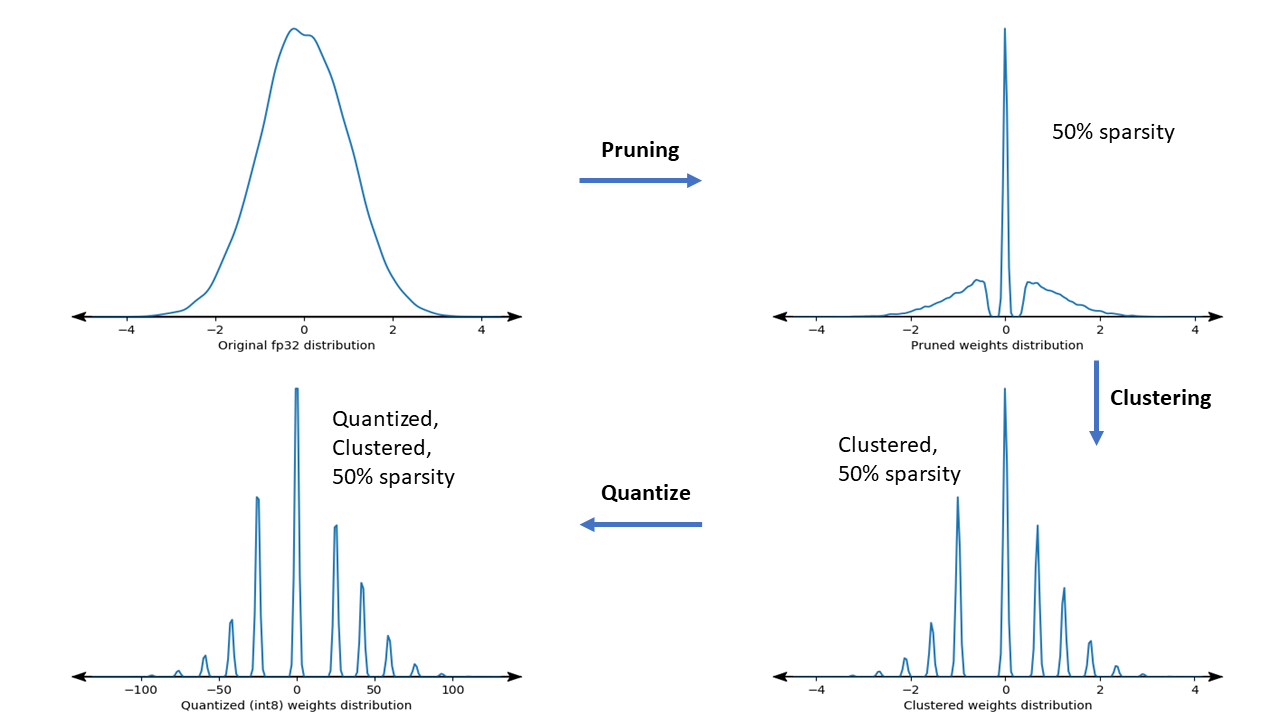

รูปด้านล่างแสดงแผนภาพความหนาแน่นของเคอร์เนลน้ำหนักตัวอย่างที่ดำเนินการผ่านไปป์ไลน์การปรับให้เหมาะสมร่วมกัน

ผลลัพธ์ที่ได้คือแบบจำลองการใช้งานเชิงปริมาณโดยมีจำนวนค่าที่ไม่ซ้ำกันลดลง รวมถึงน้ำหนักเบาบางที่มีนัยสำคัญ ขึ้นอยู่กับความกระจัดกระจายของเป้าหมายที่ระบุในเวลาการฝึกอบรม นอกเหนือจากข้อได้เปรียบด้านการบีบอัดโมเดลที่สำคัญแล้ว การสนับสนุนฮาร์ดแวร์เฉพาะยังสามารถใช้ประโยชน์จากโมเดลแบบคลัสเตอร์แบบกระจัดกระจายเหล่านี้ เพื่อลดเวลาแฝงในการอนุมานได้อย่างมาก

ผลลัพธ์

ด้านล่างนี้คือผลลัพธ์ความแม่นยำและการบีบอัดที่เราได้รับเมื่อทดลองกับเส้นทางการปรับให้เหมาะสมร่วมกันของ PQAT และ CQAT

การฝึกอบรมการรับรู้เชิงปริมาณเพื่อรักษาความกระจัดกระจาย (PQAT)

| แบบอย่าง | รายการ | พื้นฐาน | แบบตัดแต่งกิ่ง (กระจัดกระจาย 50%) | รุ่น QAT | รุ่น PQAT |

|---|---|---|---|---|---|

| DS-CNN-L | ความแม่นยำ FP32 Top1 | 95.23% | 94.80% | (ปลอม INT8) 94.721% | (INT8 ปลอม) 94.128% |

| การหาปริมาณจำนวนเต็ม INT8 | 94.48% | 93.80% | 94.72% | 94.13% | |

| การบีบอัด | 528,128 → 434,879 (17.66%) | 528,128 → 334,154 (36.73%) | 512,224 → 403,261 (21.27%) | 512,032 → 303,997 (40.63%) | |

| โมบายเน็ต_v1-224 | FP32 ความแม่นยำอันดับ 1 | 70.99% | 70.11% | (ปลอม INT8) 70.67% | (ปลอม INT8) 70.29% |

| การหาปริมาณจำนวนเต็ม INT8 | 69.37% | 67.82% | 70.67% | 70.29% | |

| การบีบอัด | 4,665,520 → 3,880,331 (16.83%) | 4,665,520 → 2,939,734 (37.00%) | 4,569,416 → 3,808,781 (16.65%) | 4,569,416 → 2,869,600 (37.20%) |

การฝึกอบรมการรับรู้เชิงปริมาณการรักษาคลัสเตอร์ (CQAT)

| แบบอย่าง | รายการ | พื้นฐาน | โมเดลแบบคลัสเตอร์ | รุ่น QAT | โมเดล CQAT |

|---|---|---|---|---|---|

| Mobilenet_v1 บน CIFAR-10 | ความแม่นยำ FP32 Top1 | 94.88% | 94.48% | (ปลอม INT8) 94.80% | (ปลอม INT8) 94.60% |

| การหาปริมาณจำนวนเต็ม INT8 | 94.65% | 94.41% | 94.77% | 94.52% | |

| ขนาด | 3.00 ลบ | 2.00 ลบ | 2.84 ลบ | 1.94 ลบ | |

| Mobilenet_v1 บน ImageNet | FP32 ความแม่นยำอันดับ 1 | 71.07% | 65.30% | (INT8 ปลอม) 70.39% | (ปลอม INT8) 65.35% |

| การหาปริมาณจำนวนเต็ม INT8 | 69.34% | 60.60% | 70.35% | 65.42% | |

| การบีบอัด | 4,665,568 → 3,886,277 (16.7%) | 4,665,568 → 3,035,752 (34.9%) | 4,569,416 → 3,804,871 (16.7%) | 4,569,472 → 2,912,655 (36.25%) |

ผลลัพธ์ CQAT และ PCQAT สำหรับโมเดลที่คลัสเตอร์ต่อแชนเนล

ผลลัพธ์ด้านล่างได้มาจากเทคนิค การจัดกลุ่มต่อช่องสัญญาณ พวกเขาแสดงให้เห็นว่าหากเลเยอร์แบบบิดเบี้ยวของโมเดลถูกคลัสเตอร์ต่อแชนเนล ความแม่นยำของโมเดลก็จะสูงขึ้น หากโมเดลของคุณมีเลเยอร์แบบหมุนวนหลายชั้น เราขอแนะนำให้ทำคลัสเตอร์ต่อแชนเนล อัตราส่วนการบีบอัดยังคงเท่าเดิม แต่ความแม่นยำของโมเดลจะสูงขึ้น ไปป์ไลน์การปรับให้เหมาะสมของโมเดลคือ 'คลัสเตอร์ -> คลัสเตอร์ที่รักษา QAT -> การหาปริมาณหลังการฝึกอบรม, int8' ในการทดลองของเรา แบบอย่าง คลัสเตอร์ -> CQAT, int8 เชิงปริมาณ ทำคลัสเตอร์ต่อช่อง -> CQAT, int8 quantized DS-CNN-L 95.949% 96.44% โมบายเน็ต-V2 71.538% 72.638% MobileNet-V2 (ตัดแต่ง) 71.45% 71.901%

ตัวอย่าง

สำหรับตัวอย่างแบบ end-to-end ของเทคนิคการเพิ่มประสิทธิภาพการทำงานร่วมกันที่อธิบายไว้ที่นี่ โปรดดูสมุดบันทึกตัวอย่าง CQAT , PQAT , sparsity-preserving Clustering และ PCQAT