View on TensorFlow.org View on TensorFlow.org

|

Run in Google Colab Run in Google Colab

|

View source on GitHub View source on GitHub

|

Download notebook Download notebook

|

Noise is present in modern day quantum computers. Qubits are susceptible to interference from the surrounding environment, imperfect fabrication, TLS and sometimes even gamma rays. Until large scale error correction is reached, the algorithms of today must be able to remain functional in the presence of noise. This makes testing algorithms under noise an important step for validating quantum algorithms / models will function on the quantum computers of today.

In this tutorial you will explore the basics of noisy circuit simulation in TFQ via the high level tfq.layers API.

Setup

Install TensorFlow and TensorFlow Quantum:

# In Colab, you will be asked to restart the session after this finishes.pip install tensorflow==2.16.2 tensorflow-quantum==0.7.5

# Update package resources to account for version changes.

import importlib, pkg_resources

importlib.reload(pkg_resources)

/tmpfs/tmp/ipykernel_17210/1875984233.py:2: UserWarning: pkg_resources is deprecated as an API. See https://setuptools.pypa.io/en/latest/pkg_resources.html. The pkg_resources package is slated for removal as early as 2025-11-30. Refrain from using this package or pin to Setuptools<81. import importlib, pkg_resources /usr/lib/python3.9/importlib/__init__.py:169: UserWarning: pkg_resources is deprecated as an API. See https://setuptools.pypa.io/en/latest/pkg_resources.html. The pkg_resources package is slated for removal as early as 2025-11-30. Refrain from using this package or pin to Setuptools<81. _bootstrap._exec(spec, module) <module 'pkg_resources' from '/tmpfs/src/tf_docs_env/lib/python3.9/site-packages/pkg_resources/__init__.py'>

Configure the use of Keras 2:

# Keras 2 must be selected before importing TensorFlow or TensorFlow Quantum:

import os

os.environ["TF_USE_LEGACY_KERAS"] = "1"

Install TensorFlow Docs so that we can use the plotting functions:

pip install -q git+https://github.com/tensorflow/docsNow import TensorFlow, TensorFlow Quantum, and other modules needed:

import random

import cirq

import sympy

import tensorflow_quantum as tfq

import tensorflow as tf

import numpy as np

# Plotting

import matplotlib.pyplot as plt

import tensorflow_docs as tfdocs

import tensorflow_docs.plots

2025-12-28 12:20:47.566691: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:479] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered 2025-12-28 12:20:47.595082: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:10575] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered 2025-12-28 12:20:47.595118: E external/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1442] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered 2025-12-28 12:20:48.560849: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT 2025-12-28 12:20:49.661438: E external/local_xla/xla/stream_executor/cuda/cuda_driver.cc:282] failed call to cuInit: CUDA_ERROR_NO_DEVICE: no CUDA-capable device is detected

1. Understanding quantum noise

1.1 Basic circuit noise

Noise on a quantum computer impacts the bitstring samples you are able to measure from it. One intuitive way you can start to think about this is that a noisy quantum computer will "insert", "delete" or "replace" gates in random places like the diagram below:

Building off of this intuition, when dealing with noise, you are no longer using a single pure state \(|\psi \rangle\) but instead dealing with an ensemble of all possible noisy realizations of your desired circuit: \(\rho = \sum_j p_j |\psi_j \rangle \langle \psi_j |\) . Where \(p_j\) gives the probability that the system is in \(|\psi_j \rangle\) .

Revisiting the above picture, if we knew beforehand that 90% of the time our system executed perfectly, or errored 10% of the time with just this one mode of failure, then our ensemble would be:

\(\rho = 0.9 |\psi_\text{desired} \rangle \langle \psi_\text{desired}| + 0.1 |\psi_\text{noisy} \rangle \langle \psi_\text{noisy}| \)

If there was more than just one way that our circuit could error, then the ensemble \(\rho\) would contain more than just two terms (one for each new noisy realization that could happen). \(\rho\) is referred to as the density matrix describing your noisy system.

1.2 Using channels to model circuit noise

Unfortunately in practice it's nearly impossible to know all the ways your circuit might error and their exact probabilities. A simplifying assumption you can make is that after each operation in your circuit there is some kind of channel that roughly captures how that operation might error. You can quickly create a circuit with some noise:

def x_circuit(qubits):

"""Produces an X wall circuit on `qubits`."""

return cirq.Circuit(cirq.X.on_each(*qubits))

def make_noisy(circuit, p):

"""Add a depolarization channel to all qubits in `circuit` before measurement."""

return circuit + cirq.Circuit(

cirq.depolarize(p).on_each(*circuit.all_qubits()))

my_qubits = cirq.GridQubit.rect(1, 2)

my_circuit = x_circuit(my_qubits)

my_noisy_circuit = make_noisy(my_circuit, 0.5)

my_circuit

my_noisy_circuit

You can examine the noiseless density matrix \(\rho\) with:

rho = cirq.final_density_matrix(my_circuit)

np.round(rho, 3)

array([[0.+0.j, 0.+0.j, 0.+0.j, 0.+0.j],

[0.+0.j, 0.+0.j, 0.+0.j, 0.+0.j],

[0.+0.j, 0.+0.j, 0.+0.j, 0.+0.j],

[0.+0.j, 0.+0.j, 0.+0.j, 1.+0.j]], dtype=complex64)

And the noisy density matrix \(\rho\) with:

rho = cirq.final_density_matrix(my_noisy_circuit)

np.round(rho, 3)

array([[0.111+0.j, 0. +0.j, 0. +0.j, 0. +0.j],

[0. +0.j, 0.222+0.j, 0. +0.j, 0. +0.j],

[0. +0.j, 0. +0.j, 0.222+0.j, 0. +0.j],

[0. +0.j, 0. +0.j, 0. +0.j, 0.444+0.j]], dtype=complex64)

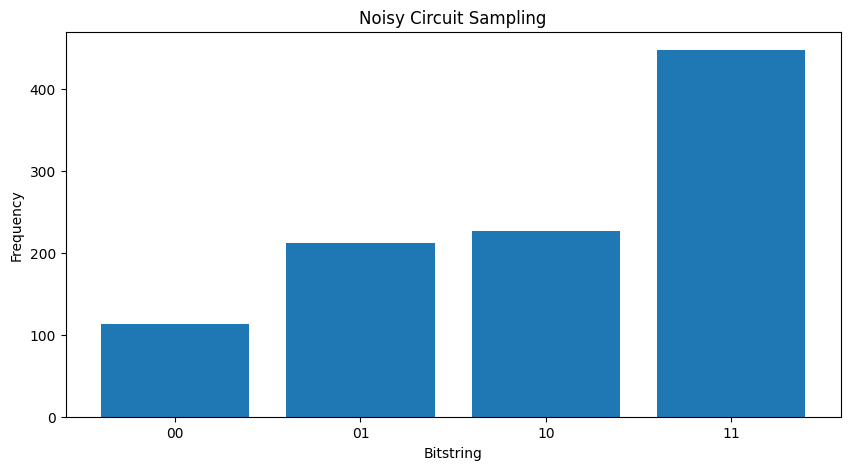

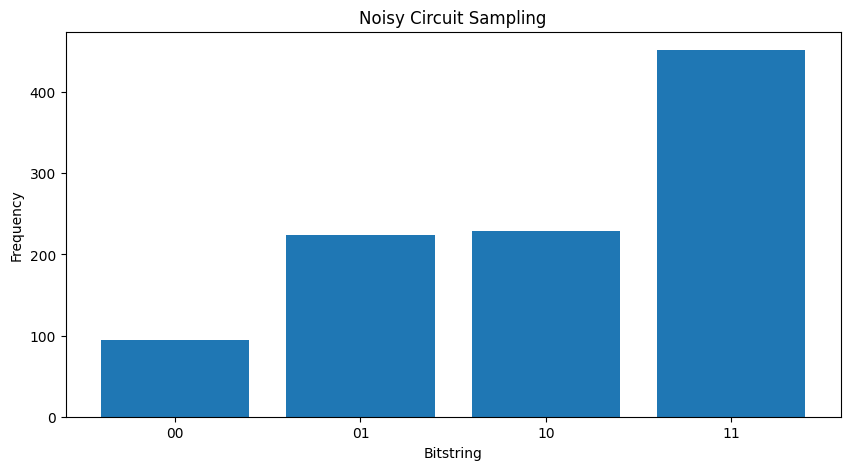

Comparing the two different \( \rho \) 's you can see that the noise has impacted the amplitudes of the state (and consequently sampling probabilities). In the noiseless case you would always expect to sample the \( |11\rangle \) state. But in the noisy state there is now a nonzero probability of sampling \( |00\rangle \) or \( |01\rangle \) or \( |10\rangle \) as well:

"""Sample from my_noisy_circuit."""

def plot_samples(circuit):

samples = cirq.sample(circuit +

cirq.measure(*circuit.all_qubits(), key='bits'),

repetitions=1000)

freqs, _ = np.histogram(

samples.data['bits'],

bins=[i + 0.01 for i in range(-1, 2**len(my_qubits))])

plt.figure(figsize=(10, 5))

plt.title('Noisy Circuit Sampling')

plt.xlabel('Bitstring')

plt.ylabel('Frequency')

plt.bar([i for i in range(2**len(my_qubits))],

freqs,

tick_label=['00', '01', '10', '11'])

plot_samples(my_noisy_circuit)

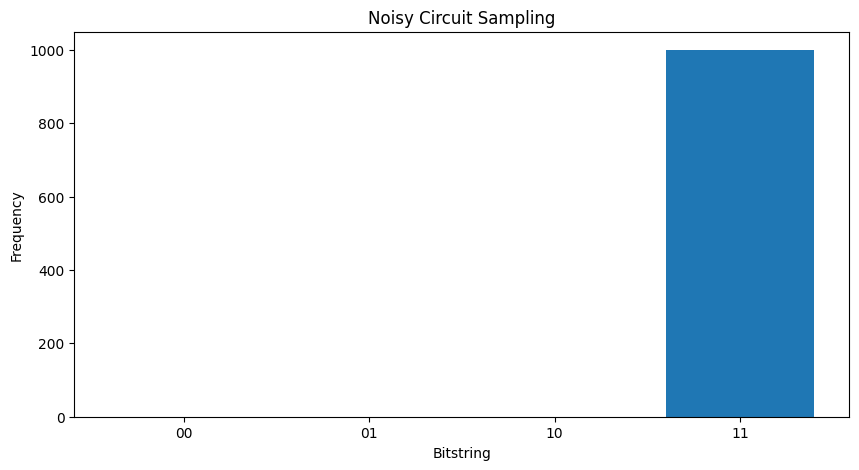

Without any noise you will always get \(|11\rangle\):

"""Sample from my_circuit."""

plot_samples(my_circuit)

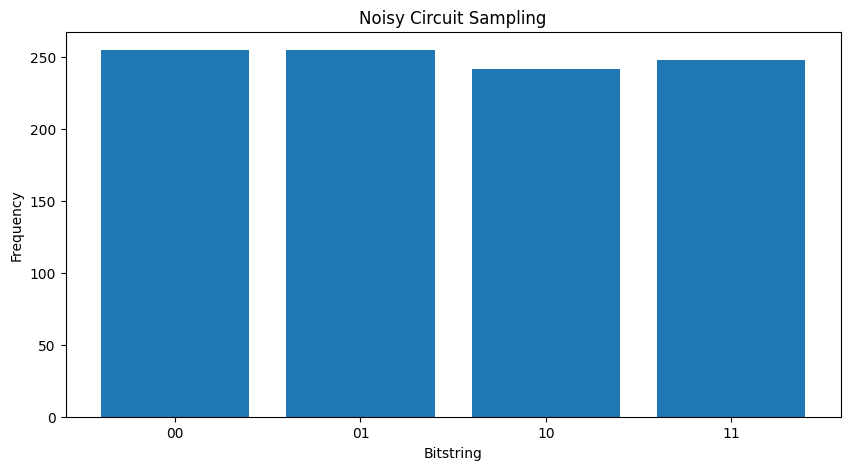

If you increase the noise a little further it will become harder and harder to distinguish the desired behavior (sampling \(|11\rangle\) ) from the noise:

my_really_noisy_circuit = make_noisy(my_circuit, 0.75)

plot_samples(my_really_noisy_circuit)

2. Basic noise in TFQ

With this understanding of how noise can impact circuit execution, you can explore how noise works in TFQ. TensorFlow Quantum uses monte-carlo / trajectory based simulation as an alternative to density matrix simulation. This is because the memory complexity of density matrix simulation limits large simulations to being <= 20 qubits with traditional full density matrix simulation methods. Monte-carlo / trajectory trades this cost in memory for additional cost in time. The backend='noisy' option available to all tfq.layers.Sample, tfq.layers.SampledExpectation and tfq.layers.Expectation (In the case of Expectation this does add a required repetitions parameter).

2.1 Noisy sampling in TFQ

To recreate the above plots using TFQ and trajectory simulation you can use tfq.layers.Sample

"""Draw bitstring samples from `my_noisy_circuit`"""

bitstrings = tfq.layers.Sample(backend='noisy')(my_noisy_circuit,

repetitions=1000)

numeric_values = np.einsum('ijk,k->ij',

bitstrings.to_tensor().numpy(), [1, 2])[0]

freqs, _ = np.histogram(numeric_values,

bins=[i + 0.01 for i in range(-1, 2**len(my_qubits))])

plt.figure(figsize=(10, 5))

plt.title('Noisy Circuit Sampling')

plt.xlabel('Bitstring')

plt.ylabel('Frequency')

plt.bar([i for i in range(2**len(my_qubits))],

freqs,

tick_label=['00', '01', '10', '11'])

<BarContainer object of 4 artists>

2.2 Noisy sample based expectation

To do noisy sample based expectation calculation you can use tfq.layers.SampleExpectation:

some_observables = [

cirq.X(my_qubits[0]),

cirq.Z(my_qubits[0]), 3.0 * cirq.Y(my_qubits[1]) + 1

]

some_observables

[cirq.X(cirq.GridQubit(0, 0)),

cirq.Z(cirq.GridQubit(0, 0)),

cirq.PauliSum(cirq.LinearDict({frozenset({(cirq.GridQubit(0, 1), cirq.Y)}): (3+0j), frozenset(): (1+0j)}))]

Compute the noiseless expectation estimates via sampling from the circuit:

noiseless_sampled_expectation = tfq.layers.SampledExpectation(

backend='noiseless')(my_circuit,

operators=some_observables,

repetitions=10000)

noiseless_sampled_expectation.numpy()

array([[ 0.0102, -1. , 0.9688]], dtype=float32)

Compare those with the noisy versions:

noisy_sampled_expectation = tfq.layers.SampledExpectation(backend='noisy')(

[my_noisy_circuit, my_really_noisy_circuit],

operators=some_observables,

repetitions=10000)

noisy_sampled_expectation.numpy()

/tmpfs/src/tf_docs_env/lib/python3.9/site-packages/tf_keras/src/initializers/initializers.py:121: UserWarning: The initializer RandomUniform is unseeded and being called multiple times, which will return identical values each time (even if the initializer is unseeded). Please update your code to provide a seed to the initializer, or avoid using the same initializer instance more than once.

warnings.warn(

array([[ 2.0000013e-04, -3.2679999e-01, 9.9640000e-01],

[ 4.0000019e-04, 8.3999997e-03, 1.0228001e+00]], dtype=float32)

You can see that the noise has particularly impacted the \(\langle \psi | Z | \psi \rangle\) accuracy, with my_really_noisy_circuit concentrating very quickly towards 0.

2.3 Noisy analytic expectation calculation

Doing noisy analytic expectation calculations is nearly identical to above:

noiseless_analytic_expectation = tfq.layers.Expectation(backend='noiseless')(

my_circuit, operators=some_observables)

noiseless_analytic_expectation.numpy()

array([[ 1.9106853e-15, -1.0000000e+00, 1.0000002e+00]], dtype=float32)

noisy_analytic_expectation = tfq.layers.Expectation(backend='noisy')(

[my_noisy_circuit, my_really_noisy_circuit],

operators=some_observables,

repetitions=10000)

noisy_analytic_expectation.numpy()

array([[ 1.9106846e-15, -3.3239999e-01, 1.0000000e+00],

[ 1.9106857e-15, -1.6000003e-02, 1.0000000e+00]], dtype=float32)

3. Hybrid models and quantum data noise

Now that you have implemented some noisy circuit simulations in TFQ, you can experiment with how noise impacts quantum and hybrid quantum classical models, by comparing and contrasting their noisy vs noiseless performance. A good first check to see if a model or algorithm is robust to noise is to test under a circuit wide depolarizing model which looks something like this:

Where each time slice of the circuit (sometimes referred to as moment) has a depolarizing channel appended after each gate operation in that time slice. The depolarizing channel with apply one of \(\{X, Y, Z \}\) with probability \(p\) or apply nothing (keep the original operation) with probability \(1-p\).

3.1 Data

For this example you can use some prepared circuits in the tfq.datasets module as training data:

qubits = cirq.GridQubit.rect(1, 8)

circuits, labels, pauli_sums, _ = tfq.datasets.xxz_chain(qubits, 'closed')

circuits[0]

Downloading data from https://storage.googleapis.com/download.tensorflow.org/data/quantum/spin_systems/XXZ_chain.zip 184449737/184449737 [==============================] - 1s 0us/step

Writing a small helper function will help to generate the data for the noisy vs noiseless case:

def get_data(qubits, depolarize_p=0.):

"""Return quantum data circuits and labels in `tf.Tensor` form."""

circuits, labels, pauli_sums, _ = tfq.datasets.xxz_chain(qubits, 'closed')

if depolarize_p >= 1e-5:

circuits = [

circuit.with_noise(cirq.depolarize(depolarize_p))

for circuit in circuits

]

tmp = list(zip(circuits, labels))

random.shuffle(tmp)

circuits_tensor = tfq.convert_to_tensor([x[0] for x in tmp])

labels_tensor = tf.convert_to_tensor([x[1] for x in tmp])

return circuits_tensor, labels_tensor

3.2 Define a model circuit

Now that you have quantum data in the form of circuits, you will need a circuit to model this data, like with the data you can write a helper function to generate this circuit optionally containing noise:

def modelling_circuit(qubits, depth, depolarize_p=0.):

"""A simple classifier circuit."""

dim = len(qubits)

ret = cirq.Circuit(cirq.H.on_each(*qubits))

for i in range(depth):

# Entangle layer.

ret += cirq.Circuit(

cirq.CX(q1, q2) for (q1, q2) in zip(qubits[::2], qubits[1::2]))

ret += cirq.Circuit(

cirq.CX(q1, q2) for (q1, q2) in zip(qubits[1::2], qubits[2::2]))

# Learnable rotation layer.

# i_params = sympy.symbols(f'layer-{i}-0:{dim}')

param = sympy.Symbol(f'layer-{i}')

single_qb = cirq.X

if i % 2 == 1:

single_qb = cirq.Y

ret += cirq.Circuit(single_qb(q)**param for q in qubits)

if depolarize_p >= 1e-5:

ret = ret.with_noise(cirq.depolarize(depolarize_p))

return ret, [op(q) for q in qubits for op in [cirq.X, cirq.Y, cirq.Z]]

modelling_circuit(qubits, 3)[0]

3.3 Model building and training

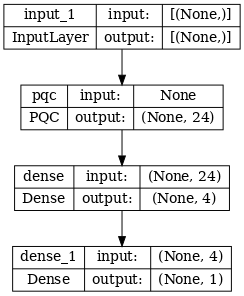

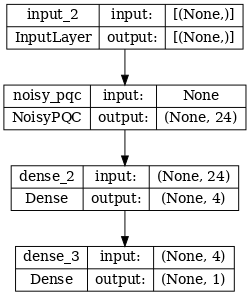

With your data and model circuit built, the final helper function you will need is one that can assemble both a noisy or a noiseless hybrid quantum tf.keras.Model:

def build_keras_model(qubits, depolarize_p=0.):

"""Prepare a noisy hybrid quantum classical Keras model."""

spin_input = tf.keras.Input(shape=(), dtype=tf.dtypes.string)

circuit_and_readout = modelling_circuit(qubits, 4, depolarize_p)

if depolarize_p >= 1e-5:

quantum_model = tfq.layers.NoisyPQC(*circuit_and_readout,

sample_based=False,

repetitions=10)(spin_input)

else:

quantum_model = tfq.layers.PQC(*circuit_and_readout)(spin_input)

intermediate = tf.keras.layers.Dense(4, activation='sigmoid')(quantum_model)

post_process = tf.keras.layers.Dense(1)(intermediate)

return tf.keras.Model(inputs=[spin_input], outputs=[post_process])

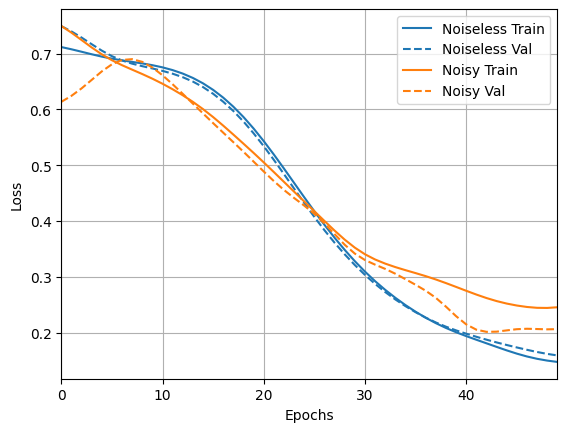

4. Compare performance

4.1 Noiseless baseline

With your data generation and model building code, you can now compare and contrast model performance in the noiseless and noisy settings, first you can run a reference noiseless training:

training_histories = dict()

depolarize_p = 0.

n_epochs = 50

phase_classifier = build_keras_model(qubits, depolarize_p)

phase_classifier.compile(

optimizer=tf.keras.optimizers.Adam(learning_rate=0.02),

loss=tf.keras.losses.BinaryCrossentropy(from_logits=True),

metrics=['accuracy'])

# Show the keras plot of the model

tf.keras.utils.plot_model(phase_classifier, show_shapes=True, dpi=70)

noiseless_data, noiseless_labels = get_data(qubits, depolarize_p)

training_histories['noiseless'] = phase_classifier.fit(x=noiseless_data,

y=noiseless_labels,

batch_size=16,

epochs=n_epochs,

validation_split=0.15,

verbose=1)

Epoch 1/50 4/4 [==============================] - 10s 205ms/step - loss: 0.6914 - accuracy: 0.4688 - val_loss: 0.6856 - val_accuracy: 0.5000 Epoch 2/50 4/4 [==============================] - 0s 64ms/step - loss: 0.6741 - accuracy: 0.4688 - val_loss: 0.6790 - val_accuracy: 0.5000 Epoch 3/50 4/4 [==============================] - 0s 59ms/step - loss: 0.6633 - accuracy: 0.4688 - val_loss: 0.6686 - val_accuracy: 0.5000 Epoch 4/50 4/4 [==============================] - 0s 57ms/step - loss: 0.6523 - accuracy: 0.4688 - val_loss: 0.6540 - val_accuracy: 0.5000 Epoch 5/50 4/4 [==============================] - 0s 59ms/step - loss: 0.6397 - accuracy: 0.4688 - val_loss: 0.6417 - val_accuracy: 0.5000 Epoch 6/50 4/4 [==============================] - 0s 56ms/step - loss: 0.6302 - accuracy: 0.4688 - val_loss: 0.6278 - val_accuracy: 0.5000 Epoch 7/50 4/4 [==============================] - 0s 57ms/step - loss: 0.6141 - accuracy: 0.4688 - val_loss: 0.6177 - val_accuracy: 0.5000 Epoch 8/50 4/4 [==============================] - 0s 56ms/step - loss: 0.5982 - accuracy: 0.4688 - val_loss: 0.6057 - val_accuracy: 0.5000 Epoch 9/50 4/4 [==============================] - 0s 55ms/step - loss: 0.5845 - accuracy: 0.5156 - val_loss: 0.5919 - val_accuracy: 0.5000 Epoch 10/50 4/4 [==============================] - 0s 56ms/step - loss: 0.5682 - accuracy: 0.5938 - val_loss: 0.5788 - val_accuracy: 0.6667 Epoch 11/50 4/4 [==============================] - 0s 55ms/step - loss: 0.5505 - accuracy: 0.6250 - val_loss: 0.5631 - val_accuracy: 0.7500 Epoch 12/50 4/4 [==============================] - 0s 56ms/step - loss: 0.5334 - accuracy: 0.6562 - val_loss: 0.5462 - val_accuracy: 0.7500 Epoch 13/50 4/4 [==============================] - 0s 54ms/step - loss: 0.5174 - accuracy: 0.6562 - val_loss: 0.5283 - val_accuracy: 0.7500 Epoch 14/50 4/4 [==============================] - 0s 56ms/step - loss: 0.4979 - accuracy: 0.6719 - val_loss: 0.5118 - val_accuracy: 0.7500 Epoch 15/50 4/4 [==============================] - 0s 57ms/step - loss: 0.4789 - accuracy: 0.7500 - val_loss: 0.4951 - val_accuracy: 0.7500 Epoch 16/50 4/4 [==============================] - 0s 55ms/step - loss: 0.4596 - accuracy: 0.7812 - val_loss: 0.4809 - val_accuracy: 0.8333 Epoch 17/50 4/4 [==============================] - 0s 54ms/step - loss: 0.4417 - accuracy: 0.8125 - val_loss: 0.4636 - val_accuracy: 0.8333 Epoch 18/50 4/4 [==============================] - 0s 56ms/step - loss: 0.4224 - accuracy: 0.8281 - val_loss: 0.4480 - val_accuracy: 0.9167 Epoch 19/50 4/4 [==============================] - 0s 55ms/step - loss: 0.4053 - accuracy: 0.8281 - val_loss: 0.4287 - val_accuracy: 0.9167 Epoch 20/50 4/4 [==============================] - 0s 55ms/step - loss: 0.3862 - accuracy: 0.8281 - val_loss: 0.4126 - val_accuracy: 0.9167 Epoch 21/50 4/4 [==============================] - 0s 55ms/step - loss: 0.3690 - accuracy: 0.8594 - val_loss: 0.3986 - val_accuracy: 0.9167 Epoch 22/50 4/4 [==============================] - 0s 55ms/step - loss: 0.3529 - accuracy: 0.8594 - val_loss: 0.3848 - val_accuracy: 0.9167 Epoch 23/50 4/4 [==============================] - 0s 55ms/step - loss: 0.3364 - accuracy: 0.8750 - val_loss: 0.3675 - val_accuracy: 0.9167 Epoch 24/50 4/4 [==============================] - 0s 56ms/step - loss: 0.3209 - accuracy: 0.8906 - val_loss: 0.3536 - val_accuracy: 0.9167 Epoch 25/50 4/4 [==============================] - 0s 55ms/step - loss: 0.3088 - accuracy: 0.8750 - val_loss: 0.3379 - val_accuracy: 0.9167 Epoch 26/50 4/4 [==============================] - 0s 54ms/step - loss: 0.2936 - accuracy: 0.8906 - val_loss: 0.3292 - val_accuracy: 0.9167 Epoch 27/50 4/4 [==============================] - 0s 54ms/step - loss: 0.2810 - accuracy: 0.9219 - val_loss: 0.3204 - val_accuracy: 0.9167 Epoch 28/50 4/4 [==============================] - 0s 53ms/step - loss: 0.2701 - accuracy: 0.9219 - val_loss: 0.3055 - val_accuracy: 0.9167 Epoch 29/50 4/4 [==============================] - 0s 54ms/step - loss: 0.2585 - accuracy: 0.9219 - val_loss: 0.2995 - val_accuracy: 0.9167 Epoch 30/50 4/4 [==============================] - 0s 55ms/step - loss: 0.2473 - accuracy: 0.9219 - val_loss: 0.2864 - val_accuracy: 0.9167 Epoch 31/50 4/4 [==============================] - 0s 54ms/step - loss: 0.2369 - accuracy: 0.9219 - val_loss: 0.2771 - val_accuracy: 0.9167 Epoch 32/50 4/4 [==============================] - 0s 53ms/step - loss: 0.2293 - accuracy: 0.9375 - val_loss: 0.2728 - val_accuracy: 0.9167 Epoch 33/50 4/4 [==============================] - 0s 54ms/step - loss: 0.2198 - accuracy: 0.9375 - val_loss: 0.2604 - val_accuracy: 0.9167 Epoch 34/50 4/4 [==============================] - 0s 56ms/step - loss: 0.2121 - accuracy: 0.9375 - val_loss: 0.2508 - val_accuracy: 0.9167 Epoch 35/50 4/4 [==============================] - 0s 53ms/step - loss: 0.2046 - accuracy: 0.9375 - val_loss: 0.2461 - val_accuracy: 0.9167 Epoch 36/50 4/4 [==============================] - 0s 54ms/step - loss: 0.1976 - accuracy: 0.9375 - val_loss: 0.2408 - val_accuracy: 0.9167 Epoch 37/50 4/4 [==============================] - 0s 54ms/step - loss: 0.1909 - accuracy: 0.9531 - val_loss: 0.2384 - val_accuracy: 1.0000 Epoch 38/50 4/4 [==============================] - 0s 53ms/step - loss: 0.1868 - accuracy: 0.9531 - val_loss: 0.2271 - val_accuracy: 0.9167 Epoch 39/50 4/4 [==============================] - 0s 53ms/step - loss: 0.1796 - accuracy: 0.9531 - val_loss: 0.2229 - val_accuracy: 0.9167 Epoch 40/50 4/4 [==============================] - 0s 53ms/step - loss: 0.1744 - accuracy: 0.9531 - val_loss: 0.2178 - val_accuracy: 0.9167 Epoch 41/50 4/4 [==============================] - 0s 54ms/step - loss: 0.1704 - accuracy: 0.9531 - val_loss: 0.2204 - val_accuracy: 1.0000 Epoch 42/50 4/4 [==============================] - 0s 54ms/step - loss: 0.1646 - accuracy: 0.9531 - val_loss: 0.2143 - val_accuracy: 1.0000 Epoch 43/50 4/4 [==============================] - 0s 53ms/step - loss: 0.1601 - accuracy: 0.9531 - val_loss: 0.2055 - val_accuracy: 1.0000 Epoch 44/50 4/4 [==============================] - 0s 54ms/step - loss: 0.1572 - accuracy: 0.9531 - val_loss: 0.1962 - val_accuracy: 0.9167 Epoch 45/50 4/4 [==============================] - 0s 53ms/step - loss: 0.1522 - accuracy: 0.9531 - val_loss: 0.1964 - val_accuracy: 1.0000 Epoch 46/50 4/4 [==============================] - 0s 53ms/step - loss: 0.1480 - accuracy: 0.9531 - val_loss: 0.1933 - val_accuracy: 1.0000 Epoch 47/50 4/4 [==============================] - 0s 54ms/step - loss: 0.1451 - accuracy: 0.9531 - val_loss: 0.1930 - val_accuracy: 1.0000 Epoch 48/50 4/4 [==============================] - 0s 53ms/step - loss: 0.1416 - accuracy: 0.9531 - val_loss: 0.1876 - val_accuracy: 1.0000 Epoch 49/50 4/4 [==============================] - 0s 56ms/step - loss: 0.1380 - accuracy: 0.9531 - val_loss: 0.1849 - val_accuracy: 1.0000 Epoch 50/50 4/4 [==============================] - 0s 53ms/step - loss: 0.1349 - accuracy: 0.9531 - val_loss: 0.1813 - val_accuracy: 1.0000

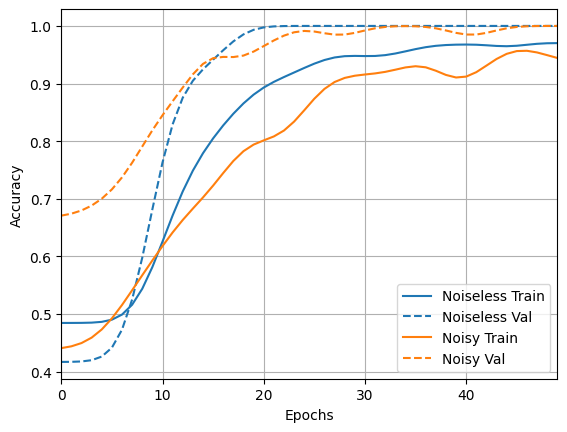

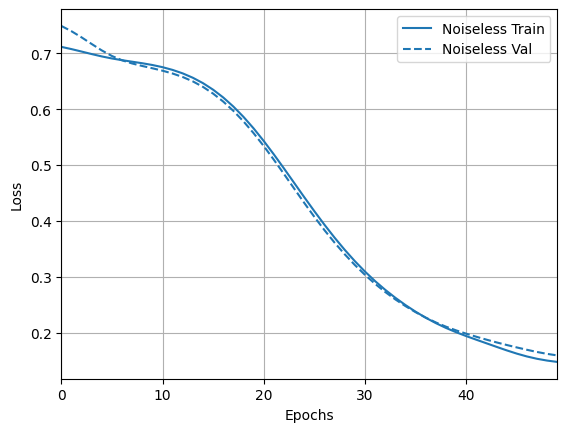

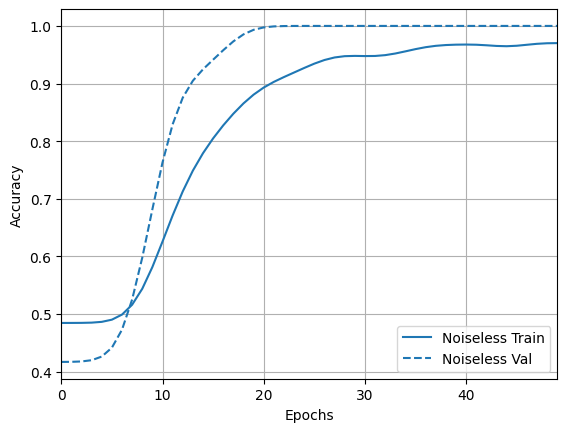

And explore the results and accuracy:

loss_plotter = tfdocs.plots.HistoryPlotter(metric='loss', smoothing_std=10)

loss_plotter.plot(training_histories)

acc_plotter = tfdocs.plots.HistoryPlotter(metric='accuracy', smoothing_std=10)

acc_plotter.plot(training_histories)

4.2 Noisy comparison

Now you can build a new model with noisy structure and compare to the above, the code is nearly identical:

depolarize_p = 0.001

n_epochs = 50

noisy_phase_classifier = build_keras_model(qubits, depolarize_p)

noisy_phase_classifier.compile(

optimizer=tf.keras.optimizers.Adam(learning_rate=0.02),

loss=tf.keras.losses.BinaryCrossentropy(from_logits=True),

metrics=['accuracy'])

# Show the keras plot of the model

tf.keras.utils.plot_model(noisy_phase_classifier, show_shapes=True, dpi=70)

noisy_data, noisy_labels = get_data(qubits, depolarize_p)

training_histories['noisy'] = noisy_phase_classifier.fit(x=noisy_data,

y=noisy_labels,

batch_size=16,

epochs=n_epochs,

validation_split=0.15,

verbose=1)

Epoch 1/50 4/4 [==============================] - 9s 1s/step - loss: 0.8062 - accuracy: 0.4844 - val_loss: 0.5759 - val_accuracy: 0.7500 Epoch 2/50 4/4 [==============================] - 5s 1s/step - loss: 0.7489 - accuracy: 0.4688 - val_loss: 0.6061 - val_accuracy: 0.2500 Epoch 3/50 4/4 [==============================] - 5s 1s/step - loss: 0.7115 - accuracy: 0.5156 - val_loss: 0.6352 - val_accuracy: 0.2500 Epoch 4/50 4/4 [==============================] - 5s 1s/step - loss: 0.6949 - accuracy: 0.5156 - val_loss: 0.6574 - val_accuracy: 0.2500 Epoch 5/50 4/4 [==============================] - 5s 1s/step - loss: 0.6853 - accuracy: 0.5156 - val_loss: 0.6854 - val_accuracy: 0.2500 Epoch 6/50 4/4 [==============================] - 5s 1s/step - loss: 0.6857 - accuracy: 0.5156 - val_loss: 0.6989 - val_accuracy: 0.2500 Epoch 7/50 4/4 [==============================] - 5s 1s/step - loss: 0.6826 - accuracy: 0.5156 - val_loss: 0.7074 - val_accuracy: 0.2500 Epoch 8/50 4/4 [==============================] - 5s 1s/step - loss: 0.6798 - accuracy: 0.5156 - val_loss: 0.7047 - val_accuracy: 0.2500 Epoch 9/50 4/4 [==============================] - 5s 1s/step - loss: 0.6779 - accuracy: 0.5156 - val_loss: 0.6983 - val_accuracy: 0.2500 Epoch 10/50 4/4 [==============================] - 5s 1s/step - loss: 0.6756 - accuracy: 0.5156 - val_loss: 0.6910 - val_accuracy: 0.2500 Epoch 11/50 4/4 [==============================] - 5s 1s/step - loss: 0.6713 - accuracy: 0.5156 - val_loss: 0.6819 - val_accuracy: 0.2500 Epoch 12/50 4/4 [==============================] - 5s 1s/step - loss: 0.6681 - accuracy: 0.5156 - val_loss: 0.6699 - val_accuracy: 0.2500 Epoch 13/50 4/4 [==============================] - 5s 1s/step - loss: 0.6627 - accuracy: 0.5156 - val_loss: 0.6642 - val_accuracy: 0.2500 Epoch 14/50 4/4 [==============================] - 5s 1s/step - loss: 0.6595 - accuracy: 0.5156 - val_loss: 0.6530 - val_accuracy: 0.2500 Epoch 15/50 4/4 [==============================] - 5s 1s/step - loss: 0.6529 - accuracy: 0.5156 - val_loss: 0.6327 - val_accuracy: 0.2500 Epoch 16/50 4/4 [==============================] - 5s 1s/step - loss: 0.6491 - accuracy: 0.5156 - val_loss: 0.6364 - val_accuracy: 0.2500 Epoch 17/50 4/4 [==============================] - 5s 1s/step - loss: 0.6387 - accuracy: 0.5156 - val_loss: 0.6207 - val_accuracy: 0.2500 Epoch 18/50 4/4 [==============================] - 5s 1s/step - loss: 0.6265 - accuracy: 0.5156 - val_loss: 0.6145 - val_accuracy: 0.2500 Epoch 19/50 4/4 [==============================] - 5s 1s/step - loss: 0.6127 - accuracy: 0.5156 - val_loss: 0.6033 - val_accuracy: 0.2500 Epoch 20/50 4/4 [==============================] - 5s 1s/step - loss: 0.6095 - accuracy: 0.5781 - val_loss: 0.5895 - val_accuracy: 0.2500 Epoch 21/50 4/4 [==============================] - 5s 1s/step - loss: 0.5961 - accuracy: 0.6094 - val_loss: 0.5640 - val_accuracy: 0.3333 Epoch 22/50 4/4 [==============================] - 5s 1s/step - loss: 0.5761 - accuracy: 0.6719 - val_loss: 0.5476 - val_accuracy: 0.4167 Epoch 23/50 4/4 [==============================] - 5s 1s/step - loss: 0.5695 - accuracy: 0.7188 - val_loss: 0.5597 - val_accuracy: 0.5000 Epoch 24/50 4/4 [==============================] - 5s 1s/step - loss: 0.5520 - accuracy: 0.7188 - val_loss: 0.5116 - val_accuracy: 0.5000 Epoch 25/50 4/4 [==============================] - 5s 1s/step - loss: 0.5334 - accuracy: 0.7812 - val_loss: 0.5153 - val_accuracy: 0.5000 Epoch 26/50 4/4 [==============================] - 5s 1s/step - loss: 0.5225 - accuracy: 0.8281 - val_loss: 0.5033 - val_accuracy: 0.5000 Epoch 27/50 4/4 [==============================] - 5s 1s/step - loss: 0.4895 - accuracy: 0.7969 - val_loss: 0.4786 - val_accuracy: 0.6667 Epoch 28/50 4/4 [==============================] - 5s 1s/step - loss: 0.4765 - accuracy: 0.8438 - val_loss: 0.4846 - val_accuracy: 0.5833 Epoch 29/50 4/4 [==============================] - 5s 1s/step - loss: 0.4611 - accuracy: 0.8438 - val_loss: 0.4341 - val_accuracy: 0.6667 Epoch 30/50 4/4 [==============================] - 5s 1s/step - loss: 0.4298 - accuracy: 0.8750 - val_loss: 0.4166 - val_accuracy: 0.7500 Epoch 31/50 4/4 [==============================] - 5s 1s/step - loss: 0.4294 - accuracy: 0.8906 - val_loss: 0.3992 - val_accuracy: 0.8333 Epoch 32/50 4/4 [==============================] - 5s 1s/step - loss: 0.3954 - accuracy: 0.8906 - val_loss: 0.3749 - val_accuracy: 0.8333 Epoch 33/50 4/4 [==============================] - 5s 1s/step - loss: 0.4137 - accuracy: 0.8750 - val_loss: 0.3589 - val_accuracy: 0.8333 Epoch 34/50 4/4 [==============================] - 5s 1s/step - loss: 0.3441 - accuracy: 0.9062 - val_loss: 0.3871 - val_accuracy: 0.8333 Epoch 35/50 4/4 [==============================] - 5s 1s/step - loss: 0.3402 - accuracy: 0.8906 - val_loss: 0.3976 - val_accuracy: 0.6667 Epoch 36/50 4/4 [==============================] - 5s 1s/step - loss: 0.3345 - accuracy: 0.9062 - val_loss: 0.3098 - val_accuracy: 0.9167 Epoch 37/50 4/4 [==============================] - 5s 1s/step - loss: 0.3337 - accuracy: 0.9062 - val_loss: 0.3280 - val_accuracy: 0.8333 Epoch 38/50 4/4 [==============================] - 5s 1s/step - loss: 0.3224 - accuracy: 0.9062 - val_loss: 0.2959 - val_accuracy: 0.9167 Epoch 39/50 4/4 [==============================] - 5s 1s/step - loss: 0.2935 - accuracy: 0.9062 - val_loss: 0.3134 - val_accuracy: 0.8333 Epoch 40/50 4/4 [==============================] - 5s 1s/step - loss: 0.2934 - accuracy: 0.9219 - val_loss: 0.3006 - val_accuracy: 0.8333 Epoch 41/50 4/4 [==============================] - 5s 1s/step - loss: 0.3100 - accuracy: 0.8594 - val_loss: 0.2844 - val_accuracy: 0.8333 Epoch 42/50 4/4 [==============================] - 5s 1s/step - loss: 0.2790 - accuracy: 0.8906 - val_loss: 0.2652 - val_accuracy: 0.9167 Epoch 43/50 4/4 [==============================] - 5s 1s/step - loss: 0.2583 - accuracy: 0.9062 - val_loss: 0.2932 - val_accuracy: 0.8333 Epoch 44/50 4/4 [==============================] - 5s 1s/step - loss: 0.2900 - accuracy: 0.9375 - val_loss: 0.2828 - val_accuracy: 0.7500 Epoch 45/50 4/4 [==============================] - 5s 1s/step - loss: 0.2814 - accuracy: 0.8906 - val_loss: 0.2181 - val_accuracy: 1.0000 Epoch 46/50 4/4 [==============================] - 5s 1s/step - loss: 0.2814 - accuracy: 0.8906 - val_loss: 0.1943 - val_accuracy: 0.9167 Epoch 47/50 4/4 [==============================] - 5s 1s/step - loss: 0.2322 - accuracy: 0.8906 - val_loss: 0.1787 - val_accuracy: 1.0000 Epoch 48/50 4/4 [==============================] - 5s 1s/step - loss: 0.2491 - accuracy: 0.9375 - val_loss: 0.1424 - val_accuracy: 1.0000 Epoch 49/50 4/4 [==============================] - 5s 1s/step - loss: 0.2259 - accuracy: 0.9219 - val_loss: 0.2408 - val_accuracy: 0.9167 Epoch 50/50 4/4 [==============================] - 5s 1s/step - loss: 0.2384 - accuracy: 0.8750 - val_loss: 0.1851 - val_accuracy: 1.0000

loss_plotter.plot(training_histories)

acc_plotter.plot(training_histories)