TensorFlow.org पर देखें TensorFlow.org पर देखें |  Google Colab में चलाएं Google Colab में चलाएं |  गिटहब पर देखें गिटहब पर देखें |  नोटबुक डाउनलोड करें नोटबुक डाउनलोड करें |

परिचय

निर्णय वन (DF) पर्यवेक्षित वर्गीकरण, प्रतिगमन और रैंकिंग के लिए मशीन लर्निंग एल्गोरिदम का एक बड़ा परिवार है। जैसा कि नाम से पता चलता है, डीएफ बिल्डिंग ब्लॉक के रूप में निर्णय पेड़ों का उपयोग करते हैं। आज, दो सबसे लोकप्रिय DF प्रशिक्षण एल्गोरिदम हैं रैंडम वन और ढाल बढ़ाया निर्णय पेड़ । दोनों एल्गोरिदम ऐसी तकनीकें हैं जो कई निर्णय पेड़ों का उपयोग करती हैं, लेकिन वे इसे कैसे करते हैं, इस पर भिन्न होते हैं।

TensorFlow निर्णय वन (TF-DF) निर्णय वन मॉडल के प्रशिक्षण, मूल्यांकन, व्याख्या और अनुमान के लिए एक पुस्तकालय है।

इस ट्यूटोरियल में, आप सीखेंगे कि कैसे:

- संख्यात्मक, श्रेणीबद्ध और अनुपलब्ध सुविधाओं वाले डेटासेट पर एक बाइनरी वर्गीकरण रैंडम फ़ॉरेस्ट को प्रशिक्षित करें।

- परीक्षण डेटासेट पर मॉडल का मूल्यांकन करें।

- के लिए मॉडल तैयार TensorFlow प्रस्तुति ।

- मॉडल की समग्र संरचना और प्रत्येक विशेषता के महत्व की जांच करें।

- एक अलग लर्निंग एल्गोरिदम (ग्रेडिएंट बूस्टेड डिसीजन ट्री) के साथ मॉडल को फिर से प्रशिक्षित करें।

- इनपुट सुविधाओं के एक अलग सेट का उपयोग करें।

- मॉडल के हाइपरपैरामीटर बदलें।

- सुविधाओं को प्रीप्रोसेस करें।

- प्रतिगमन के लिए एक मॉडल को प्रशिक्षित करें।

- रैंकिंग के लिए एक मॉडल को प्रशिक्षित करें।

विस्तृत दस्तावेज में उपलब्ध है उपयोगकर्ता पुस्तिका । उदाहरण निर्देशिका दूसरे छोर से अंत उदाहरण हैं।

TensorFlow निर्णय वन स्थापित करना

निम्न सेल चलाकर TF-DF स्थापित करें।

pip install tensorflow_decision_forests

स्थापित Wurlitzer विस्तृत प्रशिक्षण लॉग प्रदर्शित करने के लिए। यह केवल कोलाब में आवश्यक है।

pip install wurlitzer

पुस्तकालय आयात करना

import tensorflow_decision_forests as tfdf

import os

import numpy as np

import pandas as pd

import tensorflow as tf

import math

try:

from wurlitzer import sys_pipes

except:

from colabtools.googlelog import CaptureLog as sys_pipes

from IPython.core.magic import register_line_magic

from IPython.display import Javascript

WARNING:root:Failure to load the custom c++ tensorflow ops. This error is likely caused the version of TensorFlow and TensorFlow Decision Forests are not compatible. WARNING:root:TF Parameter Server distributed training not available.

छुपा कोड सेल कोलाब में आउटपुट ऊंचाई को सीमित करता है।

# Some of the model training logs can cover the full

# screen if not compressed to a smaller viewport.

# This magic allows setting a max height for a cell.

@register_line_magic

def set_cell_height(size):

display(

Javascript("google.colab.output.setIframeHeight(0, true, {maxHeight: " +

str(size) + "})"))

# Check the version of TensorFlow Decision Forests

print("Found TensorFlow Decision Forests v" + tfdf.__version__)

Found TensorFlow Decision Forests v0.2.1

एक यादृच्छिक वन मॉडल का प्रशिक्षण

इस खंड में, हम को प्रशिक्षित, मूल्यांकन, विश्लेषण और एक द्विआधारी वर्गीकरण रैंडम वन पर प्रशिक्षित निर्यात पामर के पेंगुइन डाटासेट।

डेटासेट लोड करें और इसे tf.Dataset . में बदलें

यह डेटासेट बहुत छोटा है (300 उदाहरण) और एक .csv जैसी फ़ाइल के रूप में संग्रहीत है। इसलिए, इसे लोड करने के लिए पंडों का उपयोग करें।

आइए डेटासेट को एक सीएसवी फ़ाइल में इकट्ठा करें (यानी हेडर जोड़ें), और इसे लोड करें:

# Download the dataset

!wget -q https://storage.googleapis.com/download.tensorflow.org/data/palmer_penguins/penguins.csv -O /tmp/penguins.csv

# Load a dataset into a Pandas Dataframe.

dataset_df = pd.read_csv("/tmp/penguins.csv")

# Display the first 3 examples.

dataset_df.head(3)

डाटासेट एक संख्यात्मक का मिश्रण (जैसे शामिल bill_depth_mm ), स्पष्ट (जैसे island ) और याद आ रही सुविधाओं। TF-DF (की तुलना में अलग एनएन आधारित मॉडल) इन सभी सुविधा प्रकार मूल रूप से समर्थन करता है, इसलिए एक गर्म एन्कोडिंग, सामान्य या अतिरिक्त के रूप में preprocessing के लिए कोई जरूरत नहीं है is_present सुविधा।

लेबल थोड़े अलग हैं: केरस मेट्रिक्स पूर्णांकों की अपेक्षा करते हैं। लेबल ( species ) एक स्ट्रिंग के रूप में संग्रहीत किया जाता है, तो चलो यह एक पूर्णांक में परिवर्तित करते हैं।

# Encode the categorical label into an integer.

#

# Details:

# This stage is necessary if your classification label is represented as a

# string. Note: Keras expected classification labels to be integers.

# Name of the label column.

label = "species"

classes = dataset_df[label].unique().tolist()

print(f"Label classes: {classes}")

dataset_df[label] = dataset_df[label].map(classes.index)

Label classes: ['Adelie', 'Gentoo', 'Chinstrap']

अगला डेटासेट को प्रशिक्षण और परीक्षण में विभाजित करें:

# Split the dataset into a training and a testing dataset.

def split_dataset(dataset, test_ratio=0.30):

"""Splits a panda dataframe in two."""

test_indices = np.random.rand(len(dataset)) < test_ratio

return dataset[~test_indices], dataset[test_indices]

train_ds_pd, test_ds_pd = split_dataset(dataset_df)

print("{} examples in training, {} examples for testing.".format(

len(train_ds_pd), len(test_ds_pd)))

252 examples in training, 92 examples for testing.

और अंत में, पांडा dataframe (परिवर्तित pd.Dataframe ) tensorflow डेटासेट में ( tf.data.Dataset ):

train_ds = tfdf.keras.pd_dataframe_to_tf_dataset(train_ds_pd, label=label)

test_ds = tfdf.keras.pd_dataframe_to_tf_dataset(test_ds_pd, label=label)

/tmpfs/src/tf_docs_env/lib/python3.7/site-packages/tensorflow_decision_forests/keras/core.py:1612: FutureWarning: In a future version of pandas all arguments of DataFrame.drop except for the argument 'labels' will be keyword-only features_dataframe = dataframe.drop(label, 1)

नोट: pd_dataframe_to_tf_dataset आप के लिए पूर्णांक तक लेबल परिवर्तित किया जा सकता था।

और, यदि आप बनाना चाहता था tf.data.Dataset खुद, वहाँ बातें याद करने की एक जोड़ी है:

- लर्निंग एल्गोरिदम एक-युग के डेटासेट के साथ और बिना फेरबदल के काम करते हैं।

- बैच का आकार प्रशिक्षण एल्गोरिथ्म को प्रभावित नहीं करता है, लेकिन एक छोटा मान डेटासेट पढ़ने को धीमा कर सकता है।

मॉडल को प्रशिक्षित करें

%set_cell_height 300

# Specify the model.

model_1 = tfdf.keras.RandomForestModel()

# Optionally, add evaluation metrics.

model_1.compile(

metrics=["accuracy"])

# Train the model.

# "sys_pipes" is optional. It enables the display of the training logs.

with sys_pipes():

model_1.fit(x=train_ds)

<IPython.core.display.Javascript object>

1/4 [======>.......................] - ETA: 12s

[INFO kernel.cc:736] Start Yggdrasil model training

[INFO kernel.cc:737] Collect training examples

[INFO kernel.cc:392] Number of batches: 4

[INFO kernel.cc:393] Number of examples: 252

[INFO kernel.cc:759] Dataset:

Number of records: 252

Number of columns: 8

Number of columns by type:

NUMERICAL: 5 (62.5%)

CATEGORICAL: 3 (37.5%)

Columns:

NUMERICAL: 5 (62.5%)

0: "bill_depth_mm" NUMERICAL num-nas:2 (0.793651%) mean:17.1936 min:13.2 max:21.5 sd:1.96763

1: "bill_length_mm" NUMERICAL num-nas:2 (0.793651%) mean:44.1884 min:33.1 max:59.6 sd:5.36528

2: "body_mass_g" NUMERICAL num-nas:2 (0.793651%) mean:4221 min:2700 max:6300 sd:811.125

3: "flipper_length_mm" NUMERICAL num-nas:2 (0.793651%) mean:201.264 min:172 max:231 sd:14.0793

6: "year" NUMERICAL mean:2008.05 min:2007 max:2009 sd:0.817297

CATEGORICAL: 3 (37.5%)

4: "island" CATEGORICAL has-dict vocab-size:4 zero-ood-items most-frequent:"Biscoe" 126 (50%)

5: "sex" CATEGORICAL num-nas:7 (2.77778%) has-dict vocab-size:3 zero-ood-items most-frequent:"male" 124 (50.6122%)

7: "__LABEL" CATEGORICAL integerized vocab-size:4 no-ood-item

Terminology:

nas: Number of non-available (i.e. missing) values.

ood: Out of dictionary.

manually-defined: Attribute which type is manually defined by the user i.e. the type was not automatically inferred.

tokenized: The attribute value is obtained through tokenization.

has-dict: The attribute is attached to a string dictionary e.g. a categorical attribute stored as a string.

vocab-size: Number of unique values.

[INFO kernel.cc:762] Configure learner

[INFO kernel.cc:787] Training config:

learner: "RANDOM_FOREST"

features: "bill_depth_mm"

features: "bill_length_mm"

features: "body_mass_g"

features: "flipper_length_mm"

features: "island"

features: "sex"

features: "year"

label: "__LABEL"

task: CLASSIFICATION

[yggdrasil_decision_forests.model.random_forest.proto.random_forest_config] {

num_trees: 300

decision_tree {

max_depth: 16

min_examples: 5

in_split_min_examples_check: true

missing_value_policy: GLOBAL_IMPUTATION

allow_na_conditions: false

categorical_set_greedy_forward {

sampling: 0.1

max_num_items: -1

min_item_frequency: 1

}

growing_strategy_local {

}

categorical {

cart {

}

}

num_candidate_attributes_ratio: -1

axis_aligned_split {

}

internal {

sorting_strategy: PRESORTED

}

}

winner_take_all_inference: true

compute_oob_performances: true

compute_oob_variable_importances: false

adapt_bootstrap_size_ratio_for_maximum_training_duration: false

}

[INFO kernel.cc:790] Deployment config:

num_threads: 6

[INFO kernel.cc:817] Train model

[INFO random_forest.cc:315] Training random forest on 252 example(s) and 7 feature(s).

[INFO random_forest.cc:628] Training of tree 1/300 (tree index:0) done accuracy:0.922222 logloss:2.8034

[INFO random_forest.cc:628] Training of tree 11/300 (tree index:10) done accuracy:0.960159 logloss:0.355553

[INFO random_forest.cc:628] Training of tree 21/300 (tree index:17) done accuracy:0.960317 logloss:0.360011

[INFO random_forest.cc:628] Training of tree 31/300 (tree index:32) done accuracy:0.968254 logloss:0.355906

[INFO random_forest.cc:628] Training of tree 41/300 (tree index:41) done accuracy:0.972222 logloss:0.354263

[INFO random_forest.cc:628] Training of tree 51/300 (tree index:51) done accuracy:0.980159 logloss:0.355675

[INFO random_forest.cc:628] Training of tree 61/300 (tree index:60) done accuracy:0.97619 logloss:0.354058

[INFO random_forest.cc:628] Training of tree 71/300 (tree index:70) done accuracy:0.972222 logloss:0.355711

[INFO random_forest.cc:628] Training of tree 81/300 (tree index:82) done accuracy:0.980159 logloss:0.356747

[INFO random_forest.cc:628] Training of tree 91/300 (tree index:90) done accuracy:0.97619 logloss:0.225018

[INFO random_forest.cc:628] Training of tree 101/300 (tree index:100) done accuracy:0.972222 logloss:0.221976

[INFO random_forest.cc:628] Training of tree 111/300 (tree index:109) done accuracy:0.972222 logloss:0.223525

[INFO random_forest.cc:628] Training of tree 121/300 (tree index:117) done accuracy:0.972222 logloss:0.095911

[INFO random_forest.cc:628] Training of tree 131/300 (tree index:127) done accuracy:0.968254 logloss:0.0970941

[INFO random_forest.cc:628] Training of tree 141/300 (tree index:140) done accuracy:0.972222 logloss:0.0962378

[INFO random_forest.cc:628] Training of tree 151/300 (tree index:151) done accuracy:0.972222 logloss:0.0952778

[INFO random_forest.cc:628] Training of tree 161/300 (tree index:161) done accuracy:0.97619 logloss:0.0953929

[INFO random_forest.cc:628] Training of tree 171/300 (tree index:172) done accuracy:0.972222 logloss:0.0966406

[INFO random_forest.cc:628] Training of tree 181/300 (tree index:180) done accuracy:0.97619 logloss:0.096802

[INFO random_forest.cc:628] Training of tree 191/300 (tree index:189) done accuracy:0.972222 logloss:0.0952902

[INFO random_forest.cc:628] Training of tree 201/300 (tree index:200) done accuracy:0.972222 logloss:0.0926996

[INFO random_forest.cc:628] Training of tree 211/300 (tree index:210) done accuracy:0.97619 logloss:0.0923645

[INFO random_forest.cc:628] Training of tree 221/300 (tree index:221) done accuracy:0.97619 logloss:0.0928984

[INFO random_forest.cc:628] Training of tree 231/300 (tree index:230) done accuracy:0.97619 logloss:0.0938896

[INFO random_forest.cc:628] Training of tree 241/300 (tree index:240) done accuracy:0.972222 logloss:0.0947512

[INFO random_forest.cc:628] Training of tree 251/300 (tree index:250) done accuracy:0.972222 logloss:0.0952597

[INFO random_forest.cc:628] Training of tree 261/300 (tree index:260) done accuracy:0.972222 logloss:0.0948972

[INFO random_forest.cc:628] Training of tree 271/300 (tree index:270) done accuracy:0.968254 logloss:0.096022

[INFO random_forest.cc:628] Training of tree 281/300 (tree index:280) done accuracy:0.968254 logloss:0.0950604

[INFO random_forest.cc:628] Training of tree 291/300 (tree index:290) done accuracy:0.972222 logloss:0.0962781

[INFO random_forest.cc:628] Training of tree 300/300 (tree index:298) done accuracy:0.972222 logloss:0.0967387

[INFO random_forest.cc:696] Final OOB metrics: accuracy:0.972222 logloss:0.0967387

[INFO kernel.cc:828] Export model in log directory: /tmp/tmpdqbqx3ck

[INFO kernel.cc:836] Save model in resources

[INFO kernel.cc:988] Loading model from path

[INFO decision_forest.cc:590] Model loaded with 300 root(s), 4558 node(s), and 7 input feature(s).

[INFO abstract_model.cc:993] Engine "RandomForestGeneric" built

[INFO kernel.cc:848] Use fast generic engine

4/4 [==============================] - 4s 19ms/step

टिप्पणियां

- कोई इनपुट सुविधाएँ निर्दिष्ट नहीं हैं। इसलिए, लेबल को छोड़कर सभी कॉलम इनपुट सुविधाओं के रूप में उपयोग किए जाएंगे। मॉडल के आधार पर सुविधा का इस्तेमाल किया प्रशिक्षण लॉग में और में दिखाए जाते हैं

model.summary() - DFs मूल रूप से संख्यात्मक, श्रेणीबद्ध, श्रेणीबद्ध-सेट सुविधाओं और लापता-मानों का उपभोग करते हैं। संख्यात्मक विशेषताओं को सामान्यीकृत करने की आवश्यकता नहीं है। श्रेणीबद्ध स्ट्रिंग मानों को शब्दकोश में एन्कोड करने की आवश्यकता नहीं है।

- कोई प्रशिक्षण हाइपर-पैरामीटर निर्दिष्ट नहीं हैं। इसलिए डिफ़ॉल्ट हाइपर-पैरामीटर का उपयोग किया जाएगा। डिफ़ॉल्ट हाइपर-पैरामीटर अधिकांश स्थितियों में उचित परिणाम प्रदान करते हैं।

- कॉलिंग

compileसे पहले मॉडल परfitवैकल्पिक है। अतिरिक्त मूल्यांकन मेट्रिक्स प्रदान करने के लिए संकलन का उपयोग किया जा सकता है। - प्रशिक्षण एल्गोरिदम को सत्यापन डेटासेट की आवश्यकता नहीं है। यदि कोई सत्यापन डेटासेट प्रदान किया जाता है, तो इसका उपयोग केवल मीट्रिक दिखाने के लिए किया जाएगा।

मॉडल का मूल्यांकन करें

आइए परीक्षण डेटासेट पर हमारे मॉडल का मूल्यांकन करें।

evaluation = model_1.evaluate(test_ds, return_dict=True)

print()

for name, value in evaluation.items():

print(f"{name}: {value:.4f}")

2/2 [==============================] - 0s 4ms/step - loss: 0.0000e+00 - accuracy: 1.0000 loss: 0.0000 accuracy: 1.0000

टिप्पणी: परीक्षण सटीकता (.८६,५१४) से बाहर का बैग सटीकता (0.8672) प्रशिक्षण लॉग में दिखाया गया के करीब है।

मॉडल स्व मूल्यांकन अधिक मूल्यांकन विधियों के लिए नीचे दिए गए अनुभाग देखें।

इस मॉडल को TensorFlow सर्विंग के लिए तैयार करें।

बाद में फिर से उपयोग के लिए SavedModel प्रारूप जैसे करने के लिए मॉडल निर्यात TensorFlow सेवित ।

model_1.save("/tmp/my_saved_model")

2021-11-08 12:10:07.057561: W tensorflow/python/util/util.cc:368] Sets are not currently considered sequences, but this may change in the future, so consider avoiding using them. INFO:tensorflow:Assets written to: /tmp/my_saved_model/assets INFO:tensorflow:Assets written to: /tmp/my_saved_model/assets

मॉडल प्लॉट करें

डिसीजन ट्री लगाने और पहली शाखाओं का अनुसरण करने से निर्णय वनों के बारे में सीखने में मदद मिलती है। कुछ मामलों में, एक मॉडल की साजिश रचने का उपयोग डिबगिंग के लिए भी किया जा सकता है।

जिस तरह से उन्हें प्रशिक्षित किया जाता है, उसमें अंतर के कारण, कुछ मॉडल दूसरों की तुलना में योजना बनाने के लिए अधिक दिलचस्प होते हैं। प्रशिक्षण के दौरान लगाए गए शोर और पेड़ों की गहराई के कारण, रैंडम फ़ॉरेस्ट को प्लॉट करना कार्ट या ग्रेडिएंट बूस्टेड ट्री के पहले पेड़ को प्लॉट करने की तुलना में कम जानकारीपूर्ण है।

कभी कम नहीं, आइए हमारे रैंडम फ़ॉरेस्ट मॉडल के पहले पेड़ की साजिश रचें:

tfdf.model_plotter.plot_model_in_colab(model_1, tree_idx=0, max_depth=3)

बाईं तरफ के रूट नोड पहली शर्त (शामिल bill_depth_mm >= 16.55 ), उदाहरण (240) और लेबल वितरण (लाल-नीले-हरे पट्टी) की संख्या।

उदाहरण, जिसकी को सच का मूल्यांकन करता है bill_depth_mm >= 16.55 हरी पथ के लिए शाखाओं कर रहे हैं। अन्य लाल पथ के लिए शाखित हैं।

गहरी नोड, और अधिक pure वे बन यानी लेबल वितरण वर्गों के एक सबसेट की ओर पक्षपाती है।

मॉडल संरचना और विशेषता महत्व

मॉडल के समग्र संरचना के साथ शो है .summary() आप देखेंगे:

- टाइप करें: शिक्षण मॉडल (प्रशिक्षित करने के लिए प्रयोग किया जाता एल्गोरिथ्म

Random Forestहमारे मामले में)। - टास्क: समस्या मॉडल (द्वारा हल

Classificationहमारे मामले में)। - इनपुट विशेषताएं: इनपुट मॉडल की सुविधा है।

- चर महत्व: मॉडल के लिए प्रत्येक सुविधा के महत्व के विभिन्न उपायों।

- बाहर का बैग मूल्यांकन: बाहर के बैग मॉडल के मूल्यांकन। यह क्रॉस-वेलिडेशन का एक सस्ता और कुशल विकल्प है।

- निर्णय जंगलों की संरचना के बारे में आंकड़े: {पेड़, नोड्स} और अन्य मैट्रिक की संख्या।

टिप्पणी: सारांश की सामग्री सीखने एल्गोरिथ्म पर निर्भर करता है (उदाहरण के लिए बाहर का बैग रैंडम वन लिए ही उपलब्ध है) और अति मानकों (जैसे मतलब-कमी में सटीकता चर महत्व अति मानकों में निष्क्रिय किया जा सकता) .

%set_cell_height 300

model_1.summary()

<IPython.core.display.Javascript object>

Model: "random_forest_model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

=================================================================

Total params: 1

Trainable params: 0

Non-trainable params: 1

_________________________________________________________________

Type: "RANDOM_FOREST"

Task: CLASSIFICATION

Label: "__LABEL"

Input Features (7):

bill_depth_mm

bill_length_mm

body_mass_g

flipper_length_mm

island

sex

year

No weights

Variable Importance: MEAN_MIN_DEPTH:

1. "__LABEL" 3.318694 ################

2. "year" 3.297927 ###############

3. "sex" 3.267547 ###############

4. "body_mass_g" 2.658307 ##########

5. "bill_depth_mm" 2.213272 #######

6. "island" 2.153127 #######

7. "bill_length_mm" 1.515876 ##

8. "flipper_length_mm" 1.217305

Variable Importance: NUM_AS_ROOT:

1. "flipper_length_mm" 161.000000 ################

2. "bill_length_mm" 62.000000 #####

3. "bill_depth_mm" 57.000000 #####

4. "body_mass_g" 12.000000

5. "island" 8.000000

Variable Importance: NUM_NODES:

1. "bill_length_mm" 682.000000 ################

2. "bill_depth_mm" 399.000000 #########

3. "flipper_length_mm" 383.000000 ########

4. "body_mass_g" 315.000000 #######

5. "island" 298.000000 ######

6. "sex" 34.000000

7. "year" 18.000000

Variable Importance: SUM_SCORE:

1. "flipper_length_mm" 26046.340791 ################

2. "bill_length_mm" 24253.203630 ##############

3. "bill_depth_mm" 11054.011817 ######

4. "island" 10713.713617 ######

5. "body_mass_g" 4117.938353 ##

6. "sex" 290.820204

7. "year" 39.211544

Winner take all: true

Out-of-bag evaluation: accuracy:0.972222 logloss:0.0967387

Number of trees: 300

Total number of nodes: 4558

Number of nodes by tree:

Count: 300 Average: 15.1933 StdDev: 3.2623

Min: 9 Max: 29 Ignored: 0

----------------------------------------------

[ 9, 10) 6 2.00% 2.00% #

[ 10, 11) 0 0.00% 2.00%

[ 11, 12) 38 12.67% 14.67% #####

[ 12, 13) 0 0.00% 14.67%

[ 13, 14) 71 23.67% 38.33% #########

[ 14, 15) 0 0.00% 38.33%

[ 15, 16) 83 27.67% 66.00% ##########

[ 16, 17) 0 0.00% 66.00%

[ 17, 18) 52 17.33% 83.33% ######

[ 18, 19) 0 0.00% 83.33%

[ 19, 20) 27 9.00% 92.33% ###

[ 20, 21) 0 0.00% 92.33%

[ 21, 22) 12 4.00% 96.33% #

[ 22, 23) 0 0.00% 96.33%

[ 23, 24) 6 2.00% 98.33% #

[ 24, 25) 0 0.00% 98.33%

[ 25, 26) 3 1.00% 99.33%

[ 26, 27) 0 0.00% 99.33%

[ 27, 28) 1 0.33% 99.67%

[ 28, 29] 1 0.33% 100.00%

Depth by leafs:

Count: 2429 Average: 3.39234 StdDev: 1.08569

Min: 1 Max: 7 Ignored: 0

----------------------------------------------

[ 1, 2) 26 1.07% 1.07%

[ 2, 3) 557 22.93% 24.00% #######

[ 3, 4) 716 29.48% 53.48% #########

[ 4, 5) 767 31.58% 85.06% ##########

[ 5, 6) 300 12.35% 97.41% ####

[ 6, 7) 57 2.35% 99.75% #

[ 7, 7] 6 0.25% 100.00%

Number of training obs by leaf:

Count: 2429 Average: 31.1239 StdDev: 32.4208

Min: 5 Max: 115 Ignored: 0

----------------------------------------------

[ 5, 10) 1193 49.11% 49.11% ##########

[ 10, 16) 137 5.64% 54.76% #

[ 16, 21) 70 2.88% 57.64% #

[ 21, 27) 69 2.84% 60.48% #

[ 27, 32) 72 2.96% 63.44% #

[ 32, 38) 86 3.54% 66.98% #

[ 38, 43) 67 2.76% 69.74% #

[ 43, 49) 79 3.25% 72.99% #

[ 49, 54) 54 2.22% 75.22%

[ 54, 60) 43 1.77% 76.99%

[ 60, 66) 43 1.77% 78.76%

[ 66, 71) 39 1.61% 80.36%

[ 71, 77) 62 2.55% 82.91% #

[ 77, 82) 63 2.59% 85.51% #

[ 82, 88) 102 4.20% 89.71% #

[ 88, 93) 95 3.91% 93.62% #

[ 93, 99) 99 4.08% 97.69% #

[ 99, 104) 37 1.52% 99.22%

[ 104, 110) 16 0.66% 99.88%

[ 110, 115] 3 0.12% 100.00%

Attribute in nodes:

682 : bill_length_mm [NUMERICAL]

399 : bill_depth_mm [NUMERICAL]

383 : flipper_length_mm [NUMERICAL]

315 : body_mass_g [NUMERICAL]

298 : island [CATEGORICAL]

34 : sex [CATEGORICAL]

18 : year [NUMERICAL]

Attribute in nodes with depth <= 0:

161 : flipper_length_mm [NUMERICAL]

62 : bill_length_mm [NUMERICAL]

57 : bill_depth_mm [NUMERICAL]

12 : body_mass_g [NUMERICAL]

8 : island [CATEGORICAL]

Attribute in nodes with depth <= 1:

236 : flipper_length_mm [NUMERICAL]

224 : bill_length_mm [NUMERICAL]

175 : bill_depth_mm [NUMERICAL]

169 : island [CATEGORICAL]

70 : body_mass_g [NUMERICAL]

Attribute in nodes with depth <= 2:

401 : bill_length_mm [NUMERICAL]

319 : flipper_length_mm [NUMERICAL]

290 : bill_depth_mm [NUMERICAL]

261 : island [CATEGORICAL]

174 : body_mass_g [NUMERICAL]

14 : sex [CATEGORICAL]

6 : year [NUMERICAL]

Attribute in nodes with depth <= 3:

593 : bill_length_mm [NUMERICAL]

371 : bill_depth_mm [NUMERICAL]

365 : flipper_length_mm [NUMERICAL]

290 : island [CATEGORICAL]

273 : body_mass_g [NUMERICAL]

30 : sex [CATEGORICAL]

9 : year [NUMERICAL]

Attribute in nodes with depth <= 5:

681 : bill_length_mm [NUMERICAL]

399 : bill_depth_mm [NUMERICAL]

383 : flipper_length_mm [NUMERICAL]

314 : body_mass_g [NUMERICAL]

298 : island [CATEGORICAL]

33 : sex [CATEGORICAL]

18 : year [NUMERICAL]

Condition type in nodes:

1797 : HigherCondition

332 : ContainsBitmapCondition

Condition type in nodes with depth <= 0:

292 : HigherCondition

8 : ContainsBitmapCondition

Condition type in nodes with depth <= 1:

705 : HigherCondition

169 : ContainsBitmapCondition

Condition type in nodes with depth <= 2:

1190 : HigherCondition

275 : ContainsBitmapCondition

Condition type in nodes with depth <= 3:

1611 : HigherCondition

320 : ContainsBitmapCondition

Condition type in nodes with depth <= 5:

1795 : HigherCondition

331 : ContainsBitmapCondition

Node format: NOT_SET

Training OOB:

trees: 1, Out-of-bag evaluation: accuracy:0.922222 logloss:2.8034

trees: 11, Out-of-bag evaluation: accuracy:0.960159 logloss:0.355553

trees: 21, Out-of-bag evaluation: accuracy:0.960317 logloss:0.360011

trees: 31, Out-of-bag evaluation: accuracy:0.968254 logloss:0.355906

trees: 41, Out-of-bag evaluation: accuracy:0.972222 logloss:0.354263

trees: 51, Out-of-bag evaluation: accuracy:0.980159 logloss:0.355675

trees: 61, Out-of-bag evaluation: accuracy:0.97619 logloss:0.354058

trees: 71, Out-of-bag evaluation: accuracy:0.972222 logloss:0.355711

trees: 81, Out-of-bag evaluation: accuracy:0.980159 logloss:0.356747

trees: 91, Out-of-bag evaluation: accuracy:0.97619 logloss:0.225018

trees: 101, Out-of-bag evaluation: accuracy:0.972222 logloss:0.221976

trees: 111, Out-of-bag evaluation: accuracy:0.972222 logloss:0.223525

trees: 121, Out-of-bag evaluation: accuracy:0.972222 logloss:0.095911

trees: 131, Out-of-bag evaluation: accuracy:0.968254 logloss:0.0970941

trees: 141, Out-of-bag evaluation: accuracy:0.972222 logloss:0.0962378

trees: 151, Out-of-bag evaluation: accuracy:0.972222 logloss:0.0952778

trees: 161, Out-of-bag evaluation: accuracy:0.97619 logloss:0.0953929

trees: 171, Out-of-bag evaluation: accuracy:0.972222 logloss:0.0966406

trees: 181, Out-of-bag evaluation: accuracy:0.97619 logloss:0.096802

trees: 191, Out-of-bag evaluation: accuracy:0.972222 logloss:0.0952902

trees: 201, Out-of-bag evaluation: accuracy:0.972222 logloss:0.0926996

trees: 211, Out-of-bag evaluation: accuracy:0.97619 logloss:0.0923645

trees: 221, Out-of-bag evaluation: accuracy:0.97619 logloss:0.0928984

trees: 231, Out-of-bag evaluation: accuracy:0.97619 logloss:0.0938896

trees: 241, Out-of-bag evaluation: accuracy:0.972222 logloss:0.0947512

trees: 251, Out-of-bag evaluation: accuracy:0.972222 logloss:0.0952597

trees: 261, Out-of-bag evaluation: accuracy:0.972222 logloss:0.0948972

trees: 271, Out-of-bag evaluation: accuracy:0.968254 logloss:0.096022

trees: 281, Out-of-bag evaluation: accuracy:0.968254 logloss:0.0950604

trees: 291, Out-of-bag evaluation: accuracy:0.972222 logloss:0.0962781

trees: 300, Out-of-bag evaluation: accuracy:0.972222 logloss:0.0967387

में जानकारी summary सभी उपलब्ध प्रोग्राम के मॉडल निरीक्षक का उपयोग कर रहे हैं:

# The input features

model_1.make_inspector().features()

["bill_depth_mm" (1; #0), "bill_length_mm" (1; #1), "body_mass_g" (1; #2), "flipper_length_mm" (1; #3), "island" (4; #4), "sex" (4; #5), "year" (1; #6)]

# The feature importances

model_1.make_inspector().variable_importances()

{'NUM_NODES': [("bill_length_mm" (1; #1), 682.0),

("bill_depth_mm" (1; #0), 399.0),

("flipper_length_mm" (1; #3), 383.0),

("body_mass_g" (1; #2), 315.0),

("island" (4; #4), 298.0),

("sex" (4; #5), 34.0),

("year" (1; #6), 18.0)],

'SUM_SCORE': [("flipper_length_mm" (1; #3), 26046.34079089854),

("bill_length_mm" (1; #1), 24253.20363048464),

("bill_depth_mm" (1; #0), 11054.011817359366),

("island" (4; #4), 10713.713617041707),

("body_mass_g" (1; #2), 4117.938353393227),

("sex" (4; #5), 290.82020355574787),

("year" (1; #6), 39.21154398471117)],

'NUM_AS_ROOT': [("flipper_length_mm" (1; #3), 161.0),

("bill_length_mm" (1; #1), 62.0),

("bill_depth_mm" (1; #0), 57.0),

("body_mass_g" (1; #2), 12.0),

("island" (4; #4), 8.0)],

'MEAN_MIN_DEPTH': [("__LABEL" (4; #7), 3.318693759943752),

("year" (1; #6), 3.2979265641765556),

("sex" (4; #5), 3.2675474155474094),

("body_mass_g" (1; #2), 2.6583072575572553),

("bill_depth_mm" (1; #0), 2.213271913271913),

("island" (4; #4), 2.153126937876938),

("bill_length_mm" (1; #1), 1.5158758371258376),

("flipper_length_mm" (1; #3), 1.2173052873052872)]}

सारांश और निरीक्षक की सामग्री सीखने एल्गोरिथ्म (पर निर्भर करता है tfdf.keras.RandomForestModel इस मामले में) और उसके अति मानकों (जैसे compute_oob_variable_importances=True यादृच्छिक वन शिक्षार्थी के लिए बाहर का बैग चर importances की गणना को गति प्रदान करेगा )

मॉडल स्व मूल्यांकन

TFDF मॉडल प्रशिक्षण के दौरान स्वयं भले ही कोई मान्यता डाटासेट प्रदान की जाती है मूल्यांकन कर सकते हैं fit() विधि। सटीक तर्क मॉडल पर निर्भर करता है। उदाहरण के लिए, रैंडम फ़ॉरेस्ट आउट-ऑफ-बैग मूल्यांकन का उपयोग करेगा जबकि ग्रेडिएंट बूस्टेड ट्री आंतरिक ट्रेन-सत्यापन का उपयोग करेगा।

मॉडल आत्म मूल्यांकन निरीक्षक के साथ उपलब्ध है evaluation() :

model_1.make_inspector().evaluation()

Evaluation(num_examples=252, accuracy=0.9722222222222222, loss=0.09673874925762888, rmse=None, ndcg=None, aucs=None)

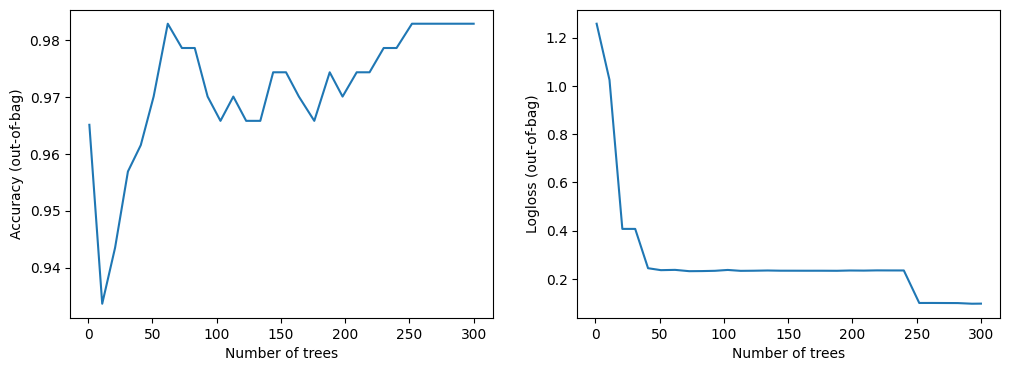

प्रशिक्षण लॉग प्लॉट करना

प्रशिक्षण लॉग मॉडल में पेड़ों की संख्या के अनुसार मॉडल की गुणवत्ता (उदाहरण के लिए आउट-ऑफ-बैग या सत्यापन डेटासेट पर मूल्यांकन की गई सटीकता) दिखाते हैं। ये लॉग मॉडल आकार और मॉडल गुणवत्ता के बीच संतुलन का अध्ययन करने में सहायक होते हैं।

लॉग कई तरह से उपलब्ध हैं:

- अगर प्रशिक्षण के दौरान में दिखाया गया है

fit()में लपेटा जाता हैwith sys_pipes():(ऊपर के उदाहरण देखें)। - मॉडल सारांश यानी के अंत में

model.summary()(उपरोक्त उदाहरण देखें)। - प्रोग्राम के रूप में, मॉडल निरीक्षक का उपयोग कर यानी

model.make_inspector().training_logs()। - का उपयोग करते हुए TensorBoard

आइए विकल्प 2 और 3 का प्रयास करें:

%set_cell_height 150

model_1.make_inspector().training_logs()

<IPython.core.display.Javascript object> [TrainLog(num_trees=1, evaluation=Evaluation(num_examples=90, accuracy=0.9222222222222223, loss=2.8033951229519314, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=11, evaluation=Evaluation(num_examples=251, accuracy=0.9601593625498008, loss=0.35555349201320174, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=21, evaluation=Evaluation(num_examples=252, accuracy=0.9603174603174603, loss=0.36001140491238665, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=31, evaluation=Evaluation(num_examples=252, accuracy=0.9682539682539683, loss=0.35590612713897984, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=41, evaluation=Evaluation(num_examples=252, accuracy=0.9722222222222222, loss=0.3542631175664682, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=51, evaluation=Evaluation(num_examples=252, accuracy=0.9801587301587301, loss=0.3556750144602524, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=61, evaluation=Evaluation(num_examples=252, accuracy=0.9761904761904762, loss=0.35405768100763596, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=71, evaluation=Evaluation(num_examples=252, accuracy=0.9722222222222222, loss=0.3557109447003948, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=81, evaluation=Evaluation(num_examples=252, accuracy=0.9801587301587301, loss=0.3567472372411026, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=91, evaluation=Evaluation(num_examples=252, accuracy=0.9761904761904762, loss=0.22501842999121263, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=101, evaluation=Evaluation(num_examples=252, accuracy=0.9722222222222222, loss=0.22197619985256875, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=111, evaluation=Evaluation(num_examples=252, accuracy=0.9722222222222222, loss=0.22352461745252922, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=121, evaluation=Evaluation(num_examples=252, accuracy=0.9722222222222222, loss=0.0959110420552038, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=131, evaluation=Evaluation(num_examples=252, accuracy=0.9682539682539683, loss=0.09709411316240828, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=141, evaluation=Evaluation(num_examples=252, accuracy=0.9722222222222222, loss=0.09623779574896962, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=151, evaluation=Evaluation(num_examples=252, accuracy=0.9722222222222222, loss=0.0952777798871495, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=161, evaluation=Evaluation(num_examples=252, accuracy=0.9761904761904762, loss=0.09539292345473928, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=171, evaluation=Evaluation(num_examples=252, accuracy=0.9722222222222222, loss=0.0966405748567056, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=181, evaluation=Evaluation(num_examples=252, accuracy=0.9761904761904762, loss=0.09680202871280176, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=191, evaluation=Evaluation(num_examples=252, accuracy=0.9722222222222222, loss=0.09529015259994637, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=201, evaluation=Evaluation(num_examples=252, accuracy=0.9722222222222222, loss=0.09269960071625453, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=211, evaluation=Evaluation(num_examples=252, accuracy=0.9761904761904762, loss=0.09236453164605395, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=221, evaluation=Evaluation(num_examples=252, accuracy=0.9761904761904762, loss=0.09289838398791968, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=231, evaluation=Evaluation(num_examples=252, accuracy=0.9761904761904762, loss=0.09388963293491139, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=241, evaluation=Evaluation(num_examples=252, accuracy=0.9722222222222222, loss=0.09475124760028271, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=251, evaluation=Evaluation(num_examples=252, accuracy=0.9722222222222222, loss=0.09525974302197851, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=261, evaluation=Evaluation(num_examples=252, accuracy=0.9722222222222222, loss=0.09489722432391275, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=271, evaluation=Evaluation(num_examples=252, accuracy=0.9682539682539683, loss=0.09602198886152889, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=281, evaluation=Evaluation(num_examples=252, accuracy=0.9682539682539683, loss=0.09506043538613806, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=291, evaluation=Evaluation(num_examples=252, accuracy=0.9722222222222222, loss=0.09627806474750358, rmse=None, ndcg=None, aucs=None)), TrainLog(num_trees=300, evaluation=Evaluation(num_examples=252, accuracy=0.9722222222222222, loss=0.09673874925762888, rmse=None, ndcg=None, aucs=None))]

आइए इसे प्लॉट करें:

import matplotlib.pyplot as plt

logs = model_1.make_inspector().training_logs()

plt.figure(figsize=(12, 4))

plt.subplot(1, 2, 1)

plt.plot([log.num_trees for log in logs], [log.evaluation.accuracy for log in logs])

plt.xlabel("Number of trees")

plt.ylabel("Accuracy (out-of-bag)")

plt.subplot(1, 2, 2)

plt.plot([log.num_trees for log in logs], [log.evaluation.loss for log in logs])

plt.xlabel("Number of trees")

plt.ylabel("Logloss (out-of-bag)")

plt.show()

यह डेटासेट छोटा है। आप मॉडल को लगभग तुरंत परिवर्तित होते हुए देख सकते हैं।

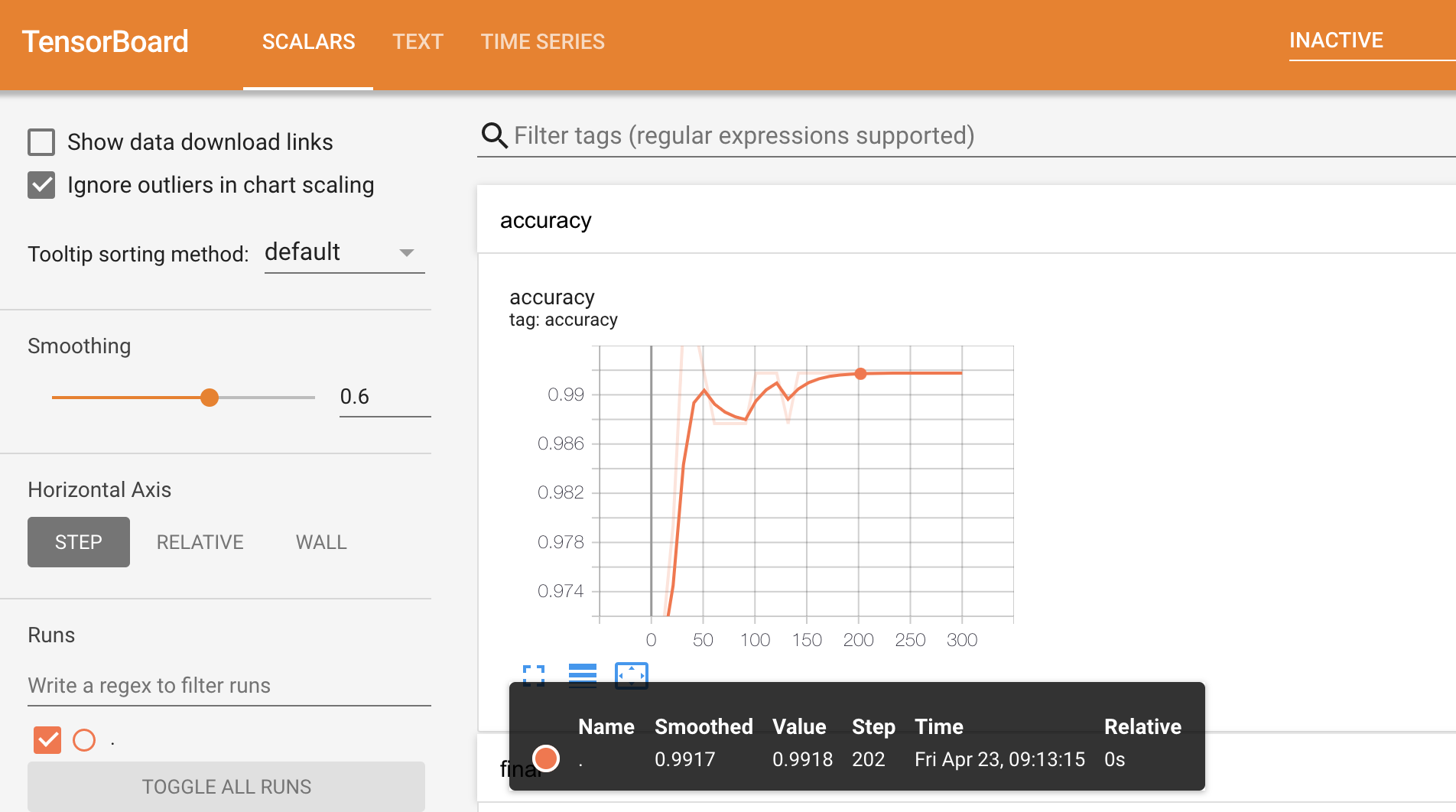

आइए टेंसरबोर्ड का उपयोग करें:

# This cell start TensorBoard that can be slow.

# Load the TensorBoard notebook extension

%load_ext tensorboard

# Google internal version

# %load_ext google3.learning.brain.tensorboard.notebook.extension

# Clear existing results (if any)rm -fr "/tmp/tensorboard_logs"

# Export the meta-data to tensorboard.

model_1.make_inspector().export_to_tensorboard("/tmp/tensorboard_logs")

# docs_infra: no_execute

# Start a tensorboard instance.

%tensorboard --logdir "/tmp/tensorboard_logs"

एक अलग लर्निंग एल्गोरिदम के साथ मॉडल को फिर से प्रशिक्षित करें

लर्निंग एल्गोरिदम को मॉडल क्लास द्वारा परिभाषित किया गया है। उदाहरण के लिए, tfdf.keras.RandomForestModel() गाड़ियों एक यादृच्छिक वन, जबकि tfdf.keras.GradientBoostedTreesModel() एक ढाल बढ़ाया निर्णय पेड़ प्रशिक्षण देता है।

सीखने एल्गोरिदम को फोन करके सूचीबद्ध हैं tfdf.keras.get_all_models() या में शिक्षार्थी सूची ।

tfdf.keras.get_all_models()

[tensorflow_decision_forests.keras.RandomForestModel, tensorflow_decision_forests.keras.GradientBoostedTreesModel, tensorflow_decision_forests.keras.CartModel, tensorflow_decision_forests.keras.DistributedGradientBoostedTreesModel]

सीखने एल्गोरिदम और उनके अति मानकों के वर्णन में भी उपलब्ध हैं API संदर्भ और मदद निर्मित:

# help works anywhere.

help(tfdf.keras.RandomForestModel)

# ? only works in ipython or notebooks, it usually opens on a separate panel.

tfdf.keras.RandomForestModel?

Help on class RandomForestModel in module tensorflow_decision_forests.keras:

class RandomForestModel(tensorflow_decision_forests.keras.wrappers.RandomForestModel)

| RandomForestModel(*args, **kwargs)

|

| Random Forest learning algorithm.

|

| A Random Forest (https://www.stat.berkeley.edu/~breiman/randomforest2001.pdf)

| is a collection of deep CART decision trees trained independently and without

| pruning. Each tree is trained on a random subset of the original training

| dataset (sampled with replacement).

|

| The algorithm is unique in that it is robust to overfitting, even in extreme

| cases e.g. when there is more features than training examples.

|

| It is probably the most well-known of the Decision Forest training

| algorithms.

|

| Usage example:

|

| ```python

| import tensorflow_decision_forests as tfdf

| import pandas as pd

|

| dataset = pd.read_csv("project/dataset.csv")

| tf_dataset = tfdf.keras.pd_dataframe_to_tf_dataset(dataset, label="my_label")

|

| model = tfdf.keras.RandomForestModel()

| model.fit(tf_dataset)

|

| print(model.summary())

| ```

|

| Attributes:

| task: Task to solve (e.g. Task.CLASSIFICATION, Task.REGRESSION,

| Task.RANKING).

| features: Specify the list and semantic of the input features of the model.

| If not specified, all the available features will be used. If specified

| and if `exclude_non_specified_features=True`, only the features in

| `features` will be used by the model. If "preprocessing" is used,

| `features` corresponds to the output of the preprocessing. In this case,

| it is recommended for the preprocessing to return a dictionary of tensors.

| exclude_non_specified_features: If true, only use the features specified in

| `features`.

| preprocessing: Functional keras model or @tf.function to apply on the input

| feature before the model to train. This preprocessing model can consume

| and return tensors, list of tensors or dictionary of tensors. If

| specified, the model only "sees" the output of the preprocessing (and not

| the raw input). Can be used to prepare the features or to stack multiple

| models on top of each other. Unlike preprocessing done in the tf.dataset,

| the operation in "preprocessing" are serialized with the model.

| postprocessing: Like "preprocessing" but applied on the model output.

| ranking_group: Only for `task=Task.RANKING`. Name of a tf.string feature that

| identifies queries in a query/document ranking task. The ranking group

| is not added automatically for the set of features if

| `exclude_non_specified_features=false`.

| temp_directory: Temporary directory used to store the model Assets after the

| training, and possibly as a work directory during the training. This

| temporary directory is necessary for the model to be exported after

| training e.g. `model.save(path)`. If not specified, `temp_directory` is

| set to a temporary directory using `tempfile.TemporaryDirectory`. This

| directory is deleted when the model python object is garbage-collected.

| verbose: If true, displays information about the training.

| hyperparameter_template: Override the default value of the hyper-parameters.

| If None (default) the default parameters of the library are used. If set,

| `default_hyperparameter_template` refers to one of the following

| preconfigured hyper-parameter sets. Those sets outperforms the default

| hyper-parameters (either generally or in specific scenarios).

| You can omit the version (e.g. remove "@v5") to use the last version of

| the template. In this case, the hyper-parameter can change in between

| releases (not recommended for training in production).

| - better_default@v1: A configuration that is generally better than the

| default parameters without being more expensive. The parameters are:

| winner_take_all=True.

| - benchmark_rank1@v1: Top ranking hyper-parameters on our benchmark

| slightly modified to run in reasonable time. The parameters are:

| winner_take_all=True, categorical_algorithm="RANDOM",

| split_axis="SPARSE_OBLIQUE", sparse_oblique_normalization="MIN_MAX",

| sparse_oblique_num_projections_exponent=1.0.

|

| advanced_arguments: Advanced control of the model that most users won't need

| to use. See `AdvancedArguments` for details.

| num_threads: Number of threads used to train the model. Different learning

| algorithms use multi-threading differently and with different degree of

| efficiency. If specified, `num_threads` field of the

| `advanced_arguments.yggdrasil_deployment_config` has priority.

| name: The name of the model.

| max_vocab_count: Default maximum size of the vocabulary for CATEGORICAL and

| CATEGORICAL_SET features stored as strings. If more unique values exist,

| only the most frequent values are kept, and the remaining values are

| considered as out-of-vocabulary. The value `max_vocab_count` defined in a

| `FeatureUsage` (if any) takes precedence.

| adapt_bootstrap_size_ratio_for_maximum_training_duration: Control how the

| maximum training duration (if set) is applied. If false, the training

| stop when the time is used. If true, adapts the size of the sampled

| dataset used to train each tree such that `num_trees` will train within

| `maximum_training_duration`. Has no effect if there is no maximum

| training duration specified. Default: False.

| allow_na_conditions: If true, the tree training evaluates conditions of the

| type `X is NA` i.e. `X is missing`. Default: False.

| categorical_algorithm: How to learn splits on categorical attributes.

| - `CART`: CART algorithm. Find categorical splits of the form "value \\in

| mask". The solution is exact for binary classification, regression and

| ranking. It is approximated for multi-class classification. This is a

| good first algorithm to use. In case of overfitting (very small

| dataset, large dictionary), the "random" algorithm is a good

| alternative.

| - `ONE_HOT`: One-hot encoding. Find the optimal categorical split of the

| form "attribute == param". This method is similar (but more efficient)

| than converting converting each possible categorical value into a

| boolean feature. This method is available for comparison purpose and

| generally performs worse than other alternatives.

| - `RANDOM`: Best splits among a set of random candidate. Find the a

| categorical split of the form "value \\in mask" using a random search.

| This solution can be seen as an approximation of the CART algorithm.

| This method is a strong alternative to CART. This algorithm is inspired

| from section "5.1 Categorical Variables" of "Random Forest", 2001.

| Default: "CART".

| categorical_set_split_greedy_sampling: For categorical set splits e.g.

| texts. Probability for a categorical value to be a candidate for the

| positive set. The sampling is applied once per node (i.e. not at every

| step of the greedy optimization). Default: 0.1.

| categorical_set_split_max_num_items: For categorical set splits e.g. texts.

| Maximum number of items (prior to the sampling). If more items are

| available, the least frequent items are ignored. Changing this value is

| similar to change the "max_vocab_count" before loading the dataset, with

| the following exception: With `max_vocab_count`, all the remaining items

| are grouped in a special Out-of-vocabulary item. With `max_num_items`,

| this is not the case. Default: -1.

| categorical_set_split_min_item_frequency: For categorical set splits e.g.

| texts. Minimum number of occurrences of an item to be considered.

| Default: 1.

| compute_oob_performances: If true, compute the Out-of-bag evaluation (then

| available in the summary and model inspector). This evaluation is a cheap

| alternative to cross-validation evaluation. Default: True.

| compute_oob_variable_importances: If true, compute the Out-of-bag feature

| importance (then available in the summary and model inspector). Note that

| the OOB feature importance can be expensive to compute. Default: False.

| growing_strategy: How to grow the tree.

| - `LOCAL`: Each node is split independently of the other nodes. In other

| words, as long as a node satisfy the splits "constraints (e.g. maximum

| depth, minimum number of observations), the node will be split. This is

| the "classical" way to grow decision trees.

| - `BEST_FIRST_GLOBAL`: The node with the best loss reduction among all

| the nodes of the tree is selected for splitting. This method is also

| called "best first" or "leaf-wise growth". See "Best-first decision

| tree learning", Shi and "Additive logistic regression : A statistical

| view of boosting", Friedman for more details. Default: "LOCAL".

| in_split_min_examples_check: Whether to check the `min_examples` constraint

| in the split search (i.e. splits leading to one child having less than

| `min_examples` examples are considered invalid) or before the split

| search (i.e. a node can be derived only if it contains more than

| `min_examples` examples). If false, there can be nodes with less than

| `min_examples` training examples. Default: True.

| max_depth: Maximum depth of the tree. `max_depth=1` means that all trees

| will be roots. Negative values are ignored. Default: 16.

| max_num_nodes: Maximum number of nodes in the tree. Set to -1 to disable

| this limit. Only available for `growing_strategy=BEST_FIRST_GLOBAL`.

| Default: None.

| maximum_model_size_in_memory_in_bytes: Limit the size of the model when

| stored in ram. Different algorithms can enforce this limit differently.

| Note that when models are compiled into an inference, the size of the

| inference engine is generally much smaller than the original model.

| Default: -1.0.

| maximum_training_duration_seconds: Maximum training duration of the model

| expressed in seconds. Each learning algorithm is free to use this

| parameter at it sees fit. Enabling maximum training duration makes the

| model training non-deterministic. Default: -1.0.

| min_examples: Minimum number of examples in a node. Default: 5.

| missing_value_policy: Method used to handle missing attribute values.

| - `GLOBAL_IMPUTATION`: Missing attribute values are imputed, with the

| mean (in case of numerical attribute) or the most-frequent-item (in

| case of categorical attribute) computed on the entire dataset (i.e. the

| information contained in the data spec).

| - `LOCAL_IMPUTATION`: Missing attribute values are imputed with the mean

| (numerical attribute) or most-frequent-item (in the case of categorical

| attribute) evaluated on the training examples in the current node.

| - `RANDOM_LOCAL_IMPUTATION`: Missing attribute values are imputed from

| randomly sampled values from the training examples in the current node.

| This method was proposed by Clinic et al. in "Random Survival Forests"

| (https://projecteuclid.org/download/pdfview_1/euclid.aoas/1223908043).

| Default: "GLOBAL_IMPUTATION".

| num_candidate_attributes: Number of unique valid attributes tested for each

| node. An attribute is valid if it has at least a valid split. If

| `num_candidate_attributes=0`, the value is set to the classical default

| value for Random Forest: `sqrt(number of input attributes)` in case of

| classification and `number_of_input_attributes / 3` in case of

| regression. If `num_candidate_attributes=-1`, all the attributes are

| tested. Default: 0.

| num_candidate_attributes_ratio: Ratio of attributes tested at each node. If

| set, it is equivalent to `num_candidate_attributes =

| number_of_input_features x num_candidate_attributes_ratio`. The possible

| values are between ]0, and 1] as well as -1. If not set or equal to -1,

| the `num_candidate_attributes` is used. Default: -1.0.

| num_trees: Number of individual decision trees. Increasing the number of

| trees can increase the quality of the model at the expense of size,

| training speed, and inference latency. Default: 300.

| sorting_strategy: How are sorted the numerical features in order to find

| the splits

| - PRESORT: The features are pre-sorted at the start of the training. This

| solution is faster but consumes much more memory than IN_NODE.

| - IN_NODE: The features are sorted just before being used in the node.

| This solution is slow but consumes little amount of memory.

| . Default: "PRESORT".

| sparse_oblique_normalization: For sparse oblique splits i.e.

| `split_axis=SPARSE_OBLIQUE`. Normalization applied on the features,

| before applying the sparse oblique projections.

| - `NONE`: No normalization.

| - `STANDARD_DEVIATION`: Normalize the feature by the estimated standard

| deviation on the entire train dataset. Also known as Z-Score

| normalization.

| - `MIN_MAX`: Normalize the feature by the range (i.e. max-min) estimated

| on the entire train dataset. Default: None.

| sparse_oblique_num_projections_exponent: For sparse oblique splits i.e.

| `split_axis=SPARSE_OBLIQUE`. Controls of the number of random projections

| to test at each node as `num_features^num_projections_exponent`. Default:

| None.

| sparse_oblique_projection_density_factor: For sparse oblique splits i.e.

| `split_axis=SPARSE_OBLIQUE`. Controls of the number of random projections

| to test at each node as `num_features^num_projections_exponent`. Default:

| None.

| split_axis: What structure of split to consider for numerical features.

| - `AXIS_ALIGNED`: Axis aligned splits (i.e. one condition at a time).

| This is the "classical" way to train a tree. Default value.

| - `SPARSE_OBLIQUE`: Sparse oblique splits (i.e. splits one a small number

| of features) from "Sparse Projection Oblique Random Forests", Tomita et

| al., 2020. Default: "AXIS_ALIGNED".

| winner_take_all: Control how classification trees vote. If true, each tree

| votes for one class. If false, each tree vote for a distribution of

| classes. winner_take_all_inference=false is often preferable. Default:

| True.

|

| Method resolution order:

| RandomForestModel

| tensorflow_decision_forests.keras.wrappers.RandomForestModel

| tensorflow_decision_forests.keras.core.CoreModel

| keras.engine.training.Model

| keras.engine.base_layer.Layer

| tensorflow.python.module.module.Module

| tensorflow.python.training.tracking.tracking.AutoTrackable

| tensorflow.python.training.tracking.base.Trackable

| keras.utils.version_utils.LayerVersionSelector

| keras.utils.version_utils.ModelVersionSelector

| builtins.object

|

| Methods inherited from tensorflow_decision_forests.keras.wrappers.RandomForestModel:

|

| __init__ = wrapper(*args, **kargs)

|

| ----------------------------------------------------------------------

| Static methods inherited from tensorflow_decision_forests.keras.wrappers.RandomForestModel:

|

| capabilities() -> yggdrasil_decision_forests.learner.abstract_learner_pb2.LearnerCapabilities

| Lists the capabilities of the learning algorithm.

|

| predefined_hyperparameters() -> List[tensorflow_decision_forests.keras.core.HyperParameterTemplate]

| Returns a better than default set of hyper-parameters.

|

| They can be used directly with the `hyperparameter_template` argument of the

| model constructor.

|

| These hyper-parameters outperforms the default hyper-parameters (either

| generally or in specific scenarios). Like default hyper-parameters, existing

| pre-defined hyper-parameters cannot change.

|

| ----------------------------------------------------------------------

| Methods inherited from tensorflow_decision_forests.keras.core.CoreModel:

|

| call(self, inputs, training=False)

| Inference of the model.

|

| This method is used for prediction and evaluation of a trained model.

|

| Args:

| inputs: Input tensors.

| training: Is the model being trained. Always False.

|

| Returns:

| Model predictions.

|

| compile(self, metrics=None)

| Configure the model for training.

|

| Unlike for most Keras model, calling "compile" is optional before calling

| "fit".

|

| Args:

| metrics: Metrics to report during training.

|

| Raises:

| ValueError: Invalid arguments.

|

| evaluate(self, *args, **kwargs)

| Returns the loss value & metrics values for the model.

|

| See details on `keras.Model.evaluate`.

|

| Args:

| *args: Passed to `keras.Model.evaluate`.

| **kwargs: Passed to `keras.Model.evaluate`. Scalar test loss (if the

| model has a single output and no metrics) or list of scalars (if the

| model has multiple outputs and/or metrics). See details in

| `keras.Model.evaluate`.

|

| fit(self, x=None, y=None, callbacks=None, **kwargs) -> keras.callbacks.History

| Trains the model.

|

| The following dataset formats are supported:

|

| 1. "x" is a tf.data.Dataset containing a tuple "(features, labels)".

| "features" can be a dictionary a tensor, a list of tensors or a

| dictionary of tensors (recommended). "labels" is a tensor.

|

| 2. "x" is a tensor, list of tensors or dictionary of tensors containing

| the input features. "y" is a tensor.

|

| 3. "x" is a numpy-array, list of numpy-arrays or dictionary of

| numpy-arrays containing the input features. "y" is a numpy-array.

|

| Unlike classical neural networks, the learning algorithm requires to scan

| the training dataset exactly once. Therefore, the dataset should not be

| repeated. The algorithm also does not benefit from shuffling the dataset.

|

| Input features generally do not need to be normalized (numerical) or indexed

| (categorical features stored as string). Also, missing values are well

| supported (i.e. not need to replace missing values).

|

| Pandas Dataframe can be prepared with "dataframe_to_tf_dataset":

| dataset = pandas.Dataframe(...)

| model.fit(pd_dataframe_to_tf_dataset(dataset, label="my_label"))

|

| Some of the learning algorithm will support distributed training with the

| ParameterServerStrategy e.g.:

|

| with tf.distribute.experimental.ParameterServerStrategy(...).scope():

| model = DistributedGradientBoostedTreesModel()

| model.fit(...)

|

| Args:

| x: Training dataset (See details above for the supported formats).

| y: Label of the training dataset. Only used if "x" does not contains the

| labels.

| callbacks: Callbacks triggered during the training.

| **kwargs: Arguments passed to the core keras model's fit.

|

| Returns:

| A `History` object. Its `History.history` attribute is not yet

| implemented for decision forests algorithms, and will return empty.

| All other fields are filled as usual for `Keras.Mode.fit()`.

|

| fit_on_dataset_path(self, train_path: str, label_key: str, weight_key: Union[str, NoneType] = None, ranking_key: Union[str, NoneType] = None, valid_path: Union[str, NoneType] = None, dataset_format: Union[str, NoneType] = 'csv')

| Trains the model on a dataset stored on disk.

|

| This solution is generally more efficient and easier that loading the

| dataset with a tf.Dataset both for local and distributed training.

|

| Usage example:

|

| # Local training

| model = model = keras.GradientBoostedTreesModel()

| model.fit_on_dataset_path(

| train_path="/path/to/dataset.csv",

| label_key="label",

| dataset_format="csv")

| model.save("/model/path")

|

| # Distributed training

| with tf.distribute.experimental.ParameterServerStrategy(...).scope():

| model = model = keras.DistributedGradientBoostedTreesModel()

| model.fit_on_dataset_path(

| train_path="/path/to/dataset@10",

| label_key="label",

| dataset_format="tfrecord+tfe")

| model.save("/model/path")

|

| Args:

| train_path: Path to the training dataset. Support comma separated files,

| shard and glob notation.

| label_key: Name of the label column.

| weight_key: Name of the weighing column.

| ranking_key: Name of the ranking column.

| valid_path: Path to the validation dataset. If not provided, or if the

| learning algorithm does not support/need a validation dataset,

| `valid_path` is ignored.

| dataset_format: Format of the dataset. Should be one of the registered

| dataset format (see

| https://github.com/google/yggdrasil-decision-forests/blob/main/documentation/user_manual#dataset-path-and-format

| for more details). The format "csv" always available but it is

| generally only suited for small datasets.

|

| Returns:

| A `History` object. Its `History.history` attribute is not yet

| implemented for decision forests algorithms, and will return empty.

| All other fields are filled as usual for `Keras.Mode.fit()`.

|

| make_inspector(self) -> tensorflow_decision_forests.component.inspector.inspector.AbstractInspector

| Creates an inspector to access the internal model structure.

|

| Usage example:

|

| ```python

| inspector = model.make_inspector()

| print(inspector.num_trees())

| print(inspector.variable_importances())

| ```

|

| Returns:

| A model inspector.

|

| make_predict_function(self)

| Prediction of the model (!= evaluation).

|

| make_test_function(self)

| Predictions for evaluation.

|

| save(self, filepath: str, overwrite: Union[bool, NoneType] = True, **kwargs)

| Saves the model as a TensorFlow SavedModel.

|

| The exported SavedModel contains a standalone Yggdrasil Decision Forests

| model in the "assets" sub-directory. The Yggdrasil model can be used

| directly using the Yggdrasil API. However, this model does not contain the

| "preprocessing" layer (if any).

|

| Args:

| filepath: Path to the output model.

| overwrite: If true, override an already existing model. If false, raise an

| error if a model already exist.

| **kwargs: Arguments passed to the core keras model's save.

|

| summary(self, line_length=None, positions=None, print_fn=None)

| Shows information about the model.

|

| train_step(self, data)

| Collects training examples.

|

| yggdrasil_model_path_tensor(self) -> Union[tensorflow.python.framework.ops.Tensor, NoneType]

| Gets the path to yggdrasil model, if available.

|

| The effective path can be obtained with:

|

| ```python

| yggdrasil_model_path_tensor().numpy().decode("utf-8")

| ```

|

| Returns:

| Path to the Yggdrasil model.

|

| ----------------------------------------------------------------------

| Methods inherited from keras.engine.training.Model:

|

| __copy__(self)

|

| __deepcopy__(self, memo)

|

| __reduce__(self)

| Helper for pickle.

|

| __setattr__(self, name, value)

| Support self.foo = trackable syntax.

|

| build(self, input_shape)

| Builds the model based on input shapes received.

|

| This is to be used for subclassed models, which do not know at instantiation

| time what their inputs look like.

|

| This method only exists for users who want to call `model.build()` in a

| standalone way (as a substitute for calling the model on real data to

| build it). It will never be called by the framework (and thus it will

| never throw unexpected errors in an unrelated workflow).

|

| Args:

| input_shape: Single tuple, `TensorShape` instance, or list/dict of shapes,

| where shapes are tuples, integers, or `TensorShape` instances.

|

| Raises:

| ValueError:

| 1. In case of invalid user-provided data (not of type tuple,

| list, `TensorShape`, or dict).

| 2. If the model requires call arguments that are agnostic

| to the input shapes (positional or keyword arg in call signature).

| 3. If not all layers were properly built.

| 4. If float type inputs are not supported within the layers.

|

| In each of these cases, the user should build their model by calling it

| on real tensor data.

|

| evaluate_generator(self, generator, steps=None, callbacks=None, max_queue_size=10, workers=1, use_multiprocessing=False, verbose=0)

| Evaluates the model on a data generator.

|

| DEPRECATED:

| `Model.evaluate` now supports generators, so there is no longer any need

| to use this endpoint.

|

| fit_generator(self, generator, steps_per_epoch=None, epochs=1, verbose=1, callbacks=None, validation_data=None, validation_steps=None, validation_freq=1, class_weight=None, max_queue_size=10, workers=1, use_multiprocessing=False, shuffle=True, initial_epoch=0)

| Fits the model on data yielded batch-by-batch by a Python generator.

|

| DEPRECATED:

| `Model.fit` now supports generators, so there is no longer any need to use

| this endpoint.

|

| get_config(self)

| Returns the config of the layer.

|

| A layer config is a Python dictionary (serializable)

| containing the configuration of a layer.

| The same layer can be reinstantiated later

| (without its trained weights) from this configuration.

|

| The config of a layer does not include connectivity

| information, nor the layer class name. These are handled

| by `Network` (one layer of abstraction above).

|

| Note that `get_config()` does not guarantee to return a fresh copy of dict

| every time it is called. The callers should make a copy of the returned dict

| if they want to modify it.

|

| Returns:

| Python dictionary.

|

| get_layer(self, name=None, index=None)

| Retrieves a layer based on either its name (unique) or index.

|

| If `name` and `index` are both provided, `index` will take precedence.

| Indices are based on order of horizontal graph traversal (bottom-up).

|

| Args:

| name: String, name of layer.

| index: Integer, index of layer.

|

| Returns:

| A layer instance.

|

| get_weights(self)

| Retrieves the weights of the model.

|

| Returns:

| A flat list of Numpy arrays.

|

| load_weights(self, filepath, by_name=False, skip_mismatch=False, options=None)

| Loads all layer weights, either from a TensorFlow or an HDF5 weight file.

|

| If `by_name` is False weights are loaded based on the network's

| topology. This means the architecture should be the same as when the weights

| were saved. Note that layers that don't have weights are not taken into

| account in the topological ordering, so adding or removing layers is fine as

| long as they don't have weights.

|

| If `by_name` is True, weights are loaded into layers only if they share the

| same name. This is useful for fine-tuning or transfer-learning models where

| some of the layers have changed.

|

| Only topological loading (`by_name=False`) is supported when loading weights

| from the TensorFlow format. Note that topological loading differs slightly

| between TensorFlow and HDF5 formats for user-defined classes inheriting from

| `tf.keras.Model`: HDF5 loads based on a flattened list of weights, while the

| TensorFlow format loads based on the object-local names of attributes to

| which layers are assigned in the `Model`'s constructor.

|

| Args:

| filepath: String, path to the weights file to load. For weight files in

| TensorFlow format, this is the file prefix (the same as was passed

| to `save_weights`). This can also be a path to a SavedModel

| saved from `model.save`.

| by_name: Boolean, whether to load weights by name or by topological

| order. Only topological loading is supported for weight files in

| TensorFlow format.

| skip_mismatch: Boolean, whether to skip loading of layers where there is

| a mismatch in the number of weights, or a mismatch in the shape of

| the weight (only valid when `by_name=True`).

| options: Optional `tf.train.CheckpointOptions` object that specifies

| options for loading weights.

|

| Returns:

| When loading a weight file in TensorFlow format, returns the same status

| object as `tf.train.Checkpoint.restore`. When graph building, restore

| ops are run automatically as soon as the network is built (on first call

| for user-defined classes inheriting from `Model`, immediately if it is

| already built).

|

| When loading weights in HDF5 format, returns `None`.

|

| Raises:

| ImportError: If `h5py` is not available and the weight file is in HDF5

| format.

| ValueError: If `skip_mismatch` is set to `True` when `by_name` is

| `False`.

|

| make_train_function(self, force=False)

| Creates a function that executes one step of training.

|

| This method can be overridden to support custom training logic.

| This method is called by `Model.fit` and `Model.train_on_batch`.

|

| Typically, this method directly controls `tf.function` and

| `tf.distribute.Strategy` settings, and delegates the actual training

| logic to `Model.train_step`.

|

| This function is cached the first time `Model.fit` or

| `Model.train_on_batch` is called. The cache is cleared whenever

| `Model.compile` is called. You can skip the cache and generate again the

| function with `force=True`.

|

| Args:

| force: Whether to regenerate the train function and skip the cached

| function if available.

|

| Returns:

| Function. The function created by this method should accept a

| `tf.data.Iterator`, and return a `dict` containing values that will

| be passed to `tf.keras.Callbacks.on_train_batch_end`, such as

| `{'loss': 0.2, 'accuracy': 0.7}`.

|

| predict(self, x, batch_size=None, verbose=0, steps=None, callbacks=None, max_queue_size=10, workers=1, use_multiprocessing=False)

| Generates output predictions for the input samples.

|

| Computation is done in batches. This method is designed for performance in

| large scale inputs. For small amount of inputs that fit in one batch,

| directly using `__call__()` is recommended for faster execution, e.g.,

| `model(x)`, or `model(x, training=False)` if you have layers such as

| `tf.keras.layers.BatchNormalization` that behaves differently during

| inference. Also, note the fact that test loss is not affected by

| regularization layers like noise and dropout.

|

| Args:

| x: Input samples. It could be:

| - A Numpy array (or array-like), or a list of arrays

| (in case the model has multiple inputs).

| - A TensorFlow tensor, or a list of tensors

| (in case the model has multiple inputs).

| - A `tf.data` dataset.

| - A generator or `keras.utils.Sequence` instance.

| A more detailed description of unpacking behavior for iterator types

| (Dataset, generator, Sequence) is given in the `Unpacking behavior

| for iterator-like inputs` section of `Model.fit`.

| batch_size: Integer or `None`.

| Number of samples per batch.

| If unspecified, `batch_size` will default to 32.

| Do not specify the `batch_size` if your data is in the

| form of dataset, generators, or `keras.utils.Sequence` instances

| (since they generate batches).

| verbose: Verbosity mode, 0 or 1.

| steps: Total number of steps (batches of samples)

| before declaring the prediction round finished.

| Ignored with the default value of `None`. If x is a `tf.data`

| dataset and `steps` is None, `predict()` will

| run until the input dataset is exhausted.

| callbacks: List of `keras.callbacks.Callback` instances.

| List of callbacks to apply during prediction.

| See [callbacks](/api_docs/python/tf/keras/callbacks).

| max_queue_size: Integer. Used for generator or `keras.utils.Sequence`

| input only. Maximum size for the generator queue.

| If unspecified, `max_queue_size` will default to 10.

| workers: Integer. Used for generator or `keras.utils.Sequence` input

| only. Maximum number of processes to spin up when using

| process-based threading. If unspecified, `workers` will default

| to 1.

| use_multiprocessing: Boolean. Used for generator or

| `keras.utils.Sequence` input only. If `True`, use process-based

| threading. If unspecified, `use_multiprocessing` will default to

| `False`. Note that because this implementation relies on

| multiprocessing, you should not pass non-picklable arguments to

| the generator as they can't be passed easily to children processes.

|

| See the discussion of `Unpacking behavior for iterator-like inputs` for

| `Model.fit`. Note that Model.predict uses the same interpretation rules as

| `Model.fit` and `Model.evaluate`, so inputs must be unambiguous for all

| three methods.

|

| Returns:

| Numpy array(s) of predictions.

|

| Raises:

| RuntimeError: If `model.predict` is wrapped in a `tf.function`.

| ValueError: In case of mismatch between the provided

| input data and the model's expectations,

| or in case a stateful model receives a number of samples

| that is not a multiple of the batch size.

|

| predict_generator(self, generator, steps=None, callbacks=None, max_queue_size=10, workers=1, use_multiprocessing=False, verbose=0)

| Generates predictions for the input samples from a data generator.

|

| DEPRECATED:

| `Model.predict` now supports generators, so there is no longer any need

| to use this endpoint.

|

| predict_on_batch(self, x)

| Returns predictions for a single batch of samples.

|

| Args:

| x: Input data. It could be:

| - A Numpy array (or array-like), or a list of arrays (in case the

| model has multiple inputs).

| - A TensorFlow tensor, or a list of tensors (in case the model has

| multiple inputs).

|

| Returns:

| Numpy array(s) of predictions.

|

| Raises:

| RuntimeError: If `model.predict_on_batch` is wrapped in a `tf.function`.

|

| predict_step(self, data)

| The logic for one inference step.

|

| This method can be overridden to support custom inference logic.

| This method is called by `Model.make_predict_function`.

|

| This method should contain the mathematical logic for one step of inference.

| This typically includes the forward pass.

|

| Configuration details for *how* this logic is run (e.g. `tf.function` and

| `tf.distribute.Strategy` settings), should be left to

| `Model.make_predict_function`, which can also be overridden.

|

| Args:

| data: A nested structure of `Tensor`s.

|

| Returns:

| The result of one inference step, typically the output of calling the

| `Model` on data.

|

| reset_metrics(self)

| Resets the state of all the metrics in the model.

|

| Examples:

|

| >>> inputs = tf.keras.layers.Input(shape=(3,))

| >>> outputs = tf.keras.layers.Dense(2)(inputs)

| >>> model = tf.keras.models.Model(inputs=inputs, outputs=outputs)

| >>> model.compile(optimizer="Adam", loss="mse", metrics=["mae"])

|

| >>> x = np.random.random((2, 3))

| >>> y = np.random.randint(0, 2, (2, 2))

| >>> _ = model.fit(x, y, verbose=0)

| >>> assert all(float(m.result()) for m in model.metrics)

|

| >>> model.reset_metrics()

| >>> assert all(float(m.result()) == 0 for m in model.metrics)

|

| reset_states(self)

|

| save_spec(self, dynamic_batch=True)

| Returns the `tf.TensorSpec` of call inputs as a tuple `(args, kwargs)`.

|

| This value is automatically defined after calling the model for the first

| time. Afterwards, you can use it when exporting the model for serving:

|

| ```python

| model = tf.keras.Model(...)

|

| @tf.function

| def serve(*args, **kwargs):

| outputs = model(*args, **kwargs)

| # Apply postprocessing steps, or add additional outputs.

| ...

| return outputs

|

| # arg_specs is `[tf.TensorSpec(...), ...]`. kwarg_specs, in this example, is

| # an empty dict since functional models do not use keyword arguments.

| arg_specs, kwarg_specs = model.save_spec()

|

| model.save(path, signatures={

| 'serving_default': serve.get_concrete_function(*arg_specs, **kwarg_specs)

| })

| ```

|

| Args:

| dynamic_batch: Whether to set the batch sizes of all the returned

| `tf.TensorSpec` to `None`. (Note that when defining functional or

| Sequential models with `tf.keras.Input([...], batch_size=X)`, the

| batch size will always be preserved). Defaults to `True`.

| Returns:

| If the model inputs are defined, returns a tuple `(args, kwargs)`. All

| elements in `args` and `kwargs` are `tf.TensorSpec`.

| If the model inputs are not defined, returns `None`.

| The model inputs are automatically set when calling the model,

| `model.fit`, `model.evaluate` or `model.predict`.

|

| save_weights(self, filepath, overwrite=True, save_format=None, options=None)

| Saves all layer weights.

|

| Either saves in HDF5 or in TensorFlow format based on the `save_format`

| argument.

|

| When saving in HDF5 format, the weight file has:

| - `layer_names` (attribute), a list of strings

| (ordered names of model layers).

| - For every layer, a `group` named `layer.name`

| - For every such layer group, a group attribute `weight_names`,

| a list of strings

| (ordered names of weights tensor of the layer).

| - For every weight in the layer, a dataset

| storing the weight value, named after the weight tensor.

|

| When saving in TensorFlow format, all objects referenced by the network are

| saved in the same format as `tf.train.Checkpoint`, including any `Layer`

| instances or `Optimizer` instances assigned to object attributes. For

| networks constructed from inputs and outputs using `tf.keras.Model(inputs,

| outputs)`, `Layer` instances used by the network are tracked/saved

| automatically. For user-defined classes which inherit from `tf.keras.Model`,

| `Layer` instances must be assigned to object attributes, typically in the

| constructor. See the documentation of `tf.train.Checkpoint` and

| `tf.keras.Model` for details.

|

| While the formats are the same, do not mix `save_weights` and

| `tf.train.Checkpoint`. Checkpoints saved by `Model.save_weights` should be

| loaded using `Model.load_weights`. Checkpoints saved using

| `tf.train.Checkpoint.save` should be restored using the corresponding

| `tf.train.Checkpoint.restore`. Prefer `tf.train.Checkpoint` over

| `save_weights` for training checkpoints.

|

| The TensorFlow format matches objects and variables by starting at a root

| object, `self` for `save_weights`, and greedily matching attribute

| names. For `Model.save` this is the `Model`, and for `Checkpoint.save` this

| is the `Checkpoint` even if the `Checkpoint` has a model attached. This

| means saving a `tf.keras.Model` using `save_weights` and loading into a

| `tf.train.Checkpoint` with a `Model` attached (or vice versa) will not match

| the `Model`'s variables. See the

| [guide to training checkpoints](https://www.tensorflow.org/guide/checkpoint)

| for details on the TensorFlow format.

|

| Args:

| filepath: String or PathLike, path to the file to save the weights to.

| When saving in TensorFlow format, this is the prefix used for

| checkpoint files (multiple files are generated). Note that the '.h5'

| suffix causes weights to be saved in HDF5 format.

| overwrite: Whether to silently overwrite any existing file at the

| target location, or provide the user with a manual prompt.

| save_format: Either 'tf' or 'h5'. A `filepath` ending in '.h5' or

| '.keras' will default to HDF5 if `save_format` is `None`. Otherwise

| `None` defaults to 'tf'.

| options: Optional `tf.train.CheckpointOptions` object that specifies