Sổ tay này hướng dẫn bạn cách đào tạo mô hình phân loại tư thế bằng MoveNet và TensorFlow Lite. Kết quả là một mô hình TensorFlow Lite mới chấp nhận đầu ra từ mô hình MoveNet làm đầu vào của nó và xuất ra phân loại tư thế, chẳng hạn như tên của tư thế yoga.

Quy trình trong sổ tay này bao gồm 3 phần:

- Phần 1: Xử lý trước dữ liệu đào tạo phân loại tư thế thành tệp CSV chỉ định các điểm mốc (điểm chính cơ thể) được phát hiện bởi mô hình MoveNet, cùng với các nhãn tư thế chân thực mặt đất.

- Phần 2: Xây dựng và đào tạo mô hình phân loại tư thế lấy tọa độ mốc từ tệp CSV làm đầu vào và xuất các nhãn dự đoán.

- Phần 3: Chuyển đổi mô hình phân loại tư thế sang TFLite.

Theo mặc định, sổ tay này sử dụng tập dữ liệu hình ảnh với các tư thế yoga được gắn nhãn, nhưng chúng tôi cũng đã bao gồm một phần trong Phần 1, nơi bạn có thể tải lên tập dữ liệu hình ảnh của riêng mình về các tư thế.

Xem trên TensorFlow.org Xem trên TensorFlow.org |  Chạy trong Google Colab Chạy trong Google Colab |  Xem nguồn trên GitHub Xem nguồn trên GitHub |  Tải xuống sổ ghi chép Tải xuống sổ ghi chép |  Xem mô hình TF Hub Xem mô hình TF Hub |

Sự chuẩn bị

Trong phần này, bạn sẽ nhập các thư viện cần thiết và xác định một số chức năng để xử lý trước hình ảnh đào tạo thành tệp CSV có chứa tọa độ mốc và nhãn chân lý mặt đất.

Không có gì có thể quan sát được xảy ra ở đây, nhưng bạn có thể mở rộng các ô mã ẩn để xem việc triển khai một số hàm mà chúng ta sẽ gọi sau này.

Nếu bạn chỉ muốn tạo tệp CSV mà không biết tất cả các chi tiết, chỉ cần chạy phần này và chuyển sang Phần 1.

pip install -q opencv-python

import csv

import cv2

import itertools

import numpy as np

import pandas as pd

import os

import sys

import tempfile

import tqdm

from matplotlib import pyplot as plt

from matplotlib.collections import LineCollection

import tensorflow as tf

import tensorflow_hub as hub

from tensorflow import keras

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score, classification_report, confusion_matrix

Mã để chạy ước tính tư thế bằng MoveNet

Các chức năng để chạy ước tính tư thế với MoveNet

# Download model from TF Hub and check out inference code from GitHub

!wget -q -O movenet_thunder.tflite https://tfhub.dev/google/lite-model/movenet/singlepose/thunder/tflite/float16/4?lite-format=tflite

!git clone https://github.com/tensorflow/examples.git

pose_sample_rpi_path = os.path.join(os.getcwd(), 'examples/lite/examples/pose_estimation/raspberry_pi')

sys.path.append(pose_sample_rpi_path)

# Load MoveNet Thunder model

import utils

from data import BodyPart

from ml import Movenet

movenet = Movenet('movenet_thunder')

# Define function to run pose estimation using MoveNet Thunder.

# You'll apply MoveNet's cropping algorithm and run inference multiple times on

# the input image to improve pose estimation accuracy.

def detect(input_tensor, inference_count=3):

"""Runs detection on an input image.

Args:

input_tensor: A [height, width, 3] Tensor of type tf.float32.

Note that height and width can be anything since the image will be

immediately resized according to the needs of the model within this

function.

inference_count: Number of times the model should run repeatly on the

same input image to improve detection accuracy.

Returns:

A Person entity detected by the MoveNet.SinglePose.

"""

image_height, image_width, channel = input_tensor.shape

# Detect pose using the full input image

movenet.detect(input_tensor.numpy(), reset_crop_region=True)

# Repeatedly using previous detection result to identify the region of

# interest and only croping that region to improve detection accuracy

for _ in range(inference_count - 1):

person = movenet.detect(input_tensor.numpy(),

reset_crop_region=False)

return person

Cloning into 'examples'... remote: Enumerating objects: 20141, done.[K remote: Counting objects: 100% (1961/1961), done.[K remote: Compressing objects: 100% (1055/1055), done.[K remote: Total 20141 (delta 909), reused 1584 (delta 595), pack-reused 18180[K Receiving objects: 100% (20141/20141), 33.15 MiB | 25.83 MiB/s, done. Resolving deltas: 100% (11003/11003), done.

Các chức năng để trực quan hóa kết quả ước lượng tư thế.

def draw_prediction_on_image(

image, person, crop_region=None, close_figure=True,

keep_input_size=False):

"""Draws the keypoint predictions on image.

Args:

image: An numpy array with shape [height, width, channel] representing the

pixel values of the input image.

person: A person entity returned from the MoveNet.SinglePose model.

close_figure: Whether to close the plt figure after the function returns.

keep_input_size: Whether to keep the size of the input image.

Returns:

An numpy array with shape [out_height, out_width, channel] representing the

image overlaid with keypoint predictions.

"""

# Draw the detection result on top of the image.

image_np = utils.visualize(image, [person])

# Plot the image with detection results.

height, width, channel = image.shape

aspect_ratio = float(width) / height

fig, ax = plt.subplots(figsize=(12 * aspect_ratio, 12))

im = ax.imshow(image_np)

if close_figure:

plt.close(fig)

if not keep_input_size:

image_np = utils.keep_aspect_ratio_resizer(image_np, (512, 512))

return image_np

Mã để tải hình ảnh, phát hiện các điểm mốc tư thế và lưu chúng vào tệp CSV

class MoveNetPreprocessor(object):

"""Helper class to preprocess pose sample images for classification."""

def __init__(self,

images_in_folder,

images_out_folder,

csvs_out_path):

"""Creates a preprocessor to detection pose from images and save as CSV.

Args:

images_in_folder: Path to the folder with the input images. It should

follow this structure:

yoga_poses

|__ downdog

|______ 00000128.jpg

|______ 00000181.bmp

|______ ...

|__ goddess

|______ 00000243.jpg

|______ 00000306.jpg

|______ ...

...

images_out_folder: Path to write the images overlay with detected

landmarks. These images are useful when you need to debug accuracy

issues.

csvs_out_path: Path to write the CSV containing the detected landmark

coordinates and label of each image that can be used to train a pose

classification model.

"""

self._images_in_folder = images_in_folder

self._images_out_folder = images_out_folder

self._csvs_out_path = csvs_out_path

self._messages = []

# Create a temp dir to store the pose CSVs per class

self._csvs_out_folder_per_class = tempfile.mkdtemp()

# Get list of pose classes and print image statistics

self._pose_class_names = sorted(

[n for n in os.listdir(self._images_in_folder) if not n.startswith('.')]

)

def process(self, per_pose_class_limit=None, detection_threshold=0.1):

"""Preprocesses images in the given folder.

Args:

per_pose_class_limit: Number of images to load. As preprocessing usually

takes time, this parameter can be specified to make the reduce of the

dataset for testing.

detection_threshold: Only keep images with all landmark confidence score

above this threshold.

"""

# Loop through the classes and preprocess its images

for pose_class_name in self._pose_class_names:

print('Preprocessing', pose_class_name, file=sys.stderr)

# Paths for the pose class.

images_in_folder = os.path.join(self._images_in_folder, pose_class_name)

images_out_folder = os.path.join(self._images_out_folder, pose_class_name)

csv_out_path = os.path.join(self._csvs_out_folder_per_class,

pose_class_name + '.csv')

if not os.path.exists(images_out_folder):

os.makedirs(images_out_folder)

# Detect landmarks in each image and write it to a CSV file

with open(csv_out_path, 'w') as csv_out_file:

csv_out_writer = csv.writer(csv_out_file,

delimiter=',',

quoting=csv.QUOTE_MINIMAL)

# Get list of images

image_names = sorted(

[n for n in os.listdir(images_in_folder) if not n.startswith('.')])

if per_pose_class_limit is not None:

image_names = image_names[:per_pose_class_limit]

valid_image_count = 0

# Detect pose landmarks from each image

for image_name in tqdm.tqdm(image_names):

image_path = os.path.join(images_in_folder, image_name)

try:

image = tf.io.read_file(image_path)

image = tf.io.decode_jpeg(image)

except:

self._messages.append('Skipped ' + image_path + '. Invalid image.')

continue

else:

image = tf.io.read_file(image_path)

image = tf.io.decode_jpeg(image)

image_height, image_width, channel = image.shape

# Skip images that isn't RGB because Movenet requires RGB images

if channel != 3:

self._messages.append('Skipped ' + image_path +

'. Image isn\'t in RGB format.')

continue

person = detect(image)

# Save landmarks if all landmarks were detected

min_landmark_score = min(

[keypoint.score for keypoint in person.keypoints])

should_keep_image = min_landmark_score >= detection_threshold

if not should_keep_image:

self._messages.append('Skipped ' + image_path +

'. No pose was confidentlly detected.')

continue

valid_image_count += 1

# Draw the prediction result on top of the image for debugging later

output_overlay = draw_prediction_on_image(

image.numpy().astype(np.uint8), person,

close_figure=True, keep_input_size=True)

# Write detection result into an image file

output_frame = cv2.cvtColor(output_overlay, cv2.COLOR_RGB2BGR)

cv2.imwrite(os.path.join(images_out_folder, image_name), output_frame)

# Get landmarks and scale it to the same size as the input image

pose_landmarks = np.array(

[[keypoint.coordinate.x, keypoint.coordinate.y, keypoint.score]

for keypoint in person.keypoints],

dtype=np.float32)

# Write the landmark coordinates to its per-class CSV file

coordinates = pose_landmarks.flatten().astype(np.str).tolist()

csv_out_writer.writerow([image_name] + coordinates)

if not valid_image_count:

raise RuntimeError(

'No valid images found for the "{}" class.'

.format(pose_class_name))

# Print the error message collected during preprocessing.

print('\n'.join(self._messages))

# Combine all per-class CSVs into a single output file

all_landmarks_df = self._all_landmarks_as_dataframe()

all_landmarks_df.to_csv(self._csvs_out_path, index=False)

def class_names(self):

"""List of classes found in the training dataset."""

return self._pose_class_names

def _all_landmarks_as_dataframe(self):

"""Merge all per-class CSVs into a single dataframe."""

total_df = None

for class_index, class_name in enumerate(self._pose_class_names):

csv_out_path = os.path.join(self._csvs_out_folder_per_class,

class_name + '.csv')

per_class_df = pd.read_csv(csv_out_path, header=None)

# Add the labels

per_class_df['class_no'] = [class_index]*len(per_class_df)

per_class_df['class_name'] = [class_name]*len(per_class_df)

# Append the folder name to the filename column (first column)

per_class_df[per_class_df.columns[0]] = (os.path.join(class_name, '')

+ per_class_df[per_class_df.columns[0]].astype(str))

if total_df is None:

# For the first class, assign its data to the total dataframe

total_df = per_class_df

else:

# Concatenate each class's data into the total dataframe

total_df = pd.concat([total_df, per_class_df], axis=0)

list_name = [[bodypart.name + '_x', bodypart.name + '_y',

bodypart.name + '_score'] for bodypart in BodyPart]

header_name = []

for columns_name in list_name:

header_name += columns_name

header_name = ['file_name'] + header_name

header_map = {total_df.columns[i]: header_name[i]

for i in range(len(header_name))}

total_df.rename(header_map, axis=1, inplace=True)

return total_df

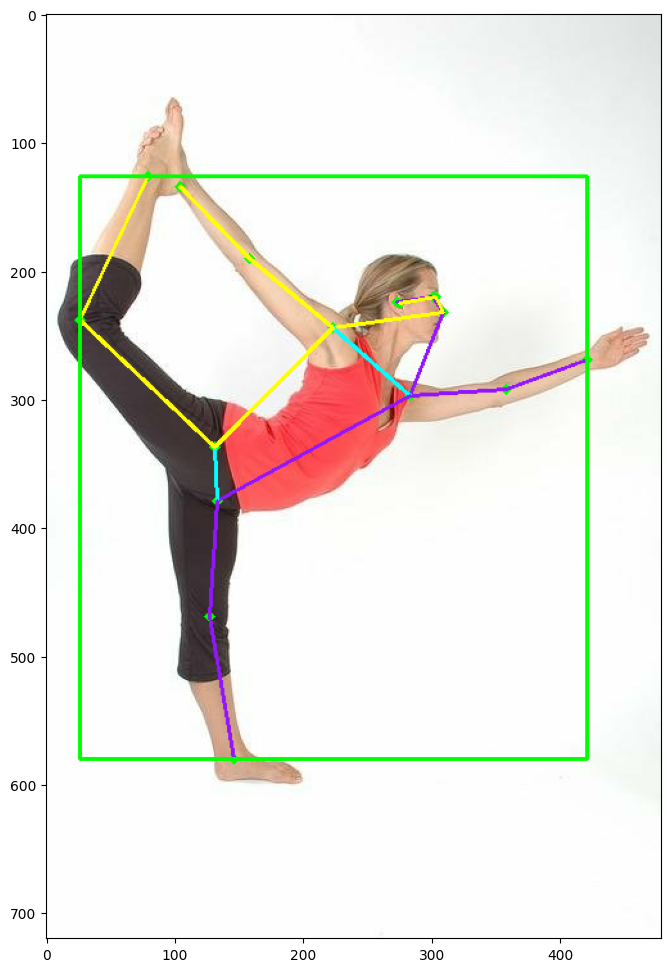

(Tùy chọn) Đoạn mã để thử logic ước tính tư thế Movenet

test_image_url = "https://cdn.pixabay.com/photo/2017/03/03/17/30/yoga-2114512_960_720.jpg"

!wget -O /tmp/image.jpeg {test_image_url}

if len(test_image_url):

image = tf.io.read_file('/tmp/image.jpeg')

image = tf.io.decode_jpeg(image)

person = detect(image)

_ = draw_prediction_on_image(image.numpy(), person, crop_region=None,

close_figure=False, keep_input_size=True)

--2021-12-21 12:07:36-- https://cdn.pixabay.com/photo/2017/03/03/17/30/yoga-2114512_960_720.jpg Resolving cdn.pixabay.com (cdn.pixabay.com)... 104.18.20.183, 104.18.21.183, 2606:4700::6812:14b7, ... Connecting to cdn.pixabay.com (cdn.pixabay.com)|104.18.20.183|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 28665 (28K) [image/jpeg] Saving to: ‘/tmp/image.jpeg’ /tmp/image.jpeg 100%[===================>] 27.99K --.-KB/s in 0s 2021-12-21 12:07:36 (111 MB/s) - ‘/tmp/image.jpeg’ saved [28665/28665]

Phần 1: Xử lý trước hình ảnh đầu vào

Bởi vì đầu vào cho phân loại tư thế của chúng tôi là những điểm mốc sản lượng từ mô hình MoveNet, chúng ta cần phải tạo tập dữ liệu huấn luyện của chúng tôi bằng cách chạy hình ảnh nhãn qua MoveNet và sau đó nắm bắt tất cả dữ liệu mang tính bước ngoặt và nhãn thực địa vào một tập tin CSV.

Tập dữ liệu chúng tôi đã cung cấp cho hướng dẫn này là tập dữ liệu tư thế yoga do CG tạo ra. Nó chứa hình ảnh của nhiều mô hình do CG tạo ra đang thực hiện 5 tư thế yoga khác nhau. Các thư mục đã được chia thành một train dữ liệu và test dữ liệu.

Vì vậy, trong phần này, chúng tôi sẽ tải về các tập dữ liệu yoga và chạy nó thông qua MoveNet vì vậy chúng tôi có thể nắm bắt tất cả những điểm mốc vào một tập tin CSV ... Tuy nhiên, phải mất khoảng 15 phút để nuôi bộ dữ liệu yoga của chúng tôi để MoveNet và tạo ra file CSV này . Vì vậy, như một sự thay thế, bạn có thể tải về một tập tin CSV tồn tại trước đó cho bộ dữ liệu yoga bằng cách thiết lập is_skip_step_1 tham số dưới đây để True. Bằng cách đó, bạn sẽ bỏ qua bước này và thay vào đó tải xuống cùng một tệp CSV sẽ được tạo trong bước tiền xử lý này.

Mặt khác, nếu bạn muốn đào tạo các phân loại tư thế với bộ dữ liệu hình ảnh của riêng bạn, bạn cần phải tải lên hình ảnh của bạn và chạy bước tiền xử lý này (nghỉ is_skip_step_1 False) -follow các hướng dẫn dưới đây để tải dữ liệu tư thế của riêng bạn.

is_skip_step_1 = False

(Tùy chọn) Tải lên tập dữ liệu tư thế của riêng bạn

use_custom_dataset = False

dataset_is_split = False

Nếu bạn muốn huấn luyện bộ phân loại tư thế với các tư thế được gắn nhãn của riêng bạn (chúng có thể là bất kỳ tư thế nào, không chỉ là tư thế yoga), hãy làm theo các bước sau:

Đặt trên

use_custom_datasettùy chọn là True.Chuẩn bị một tệp lưu trữ (ZIP, TAR hoặc khác) bao gồm một thư mục với tập dữ liệu hình ảnh của bạn. Thư mục phải bao gồm các hình ảnh được sắp xếp về các tư thế của bạn như sau.

Nếu bạn đã chia tập dữ liệu của bạn vào xe lửa và kiểm tra bộ, sau đó thiết lập

dataset_is_splitTrue. Tức là, thư mục hình ảnh của bạn phải bao gồm các thư mục "train" và "test" như sau:yoga_poses/ |__ train/ |__ downdog/ |______ 00000128.jpg |______ ... |__ test/ |__ downdog/ |______ 00000181.jpg |______ ...Hoặc, nếu dữ liệu của bạn là không tách được nêu ra, sau đó đặt

dataset_is_splitFalse và chúng tôi sẽ chia nó lên dựa trên một phần nhỏ chia cụ thể. Tức là, thư mục hình ảnh đã tải lên của bạn sẽ trông như thế này:yoga_poses/ |__ downdog/ |______ 00000128.jpg |______ 00000181.jpg |______ ... |__ goddess/ |______ 00000243.jpg |______ 00000306.jpg |______ ...Nhấp vào tab Files bên trái (biểu tượng thư mục) và sau đó nhấn Upload để lưu trữ phiên (biểu tượng tập tin).

Chọn tệp lưu trữ của bạn và đợi cho đến khi tải lên xong trước khi bạn tiếp tục.

Chỉnh sửa khối mã sau để chỉ định tên của tệp lưu trữ và thư mục hình ảnh của bạn. (Theo mặc định, chúng tôi mong đợi một tệp ZIP, vì vậy bạn cũng cần phải sửa đổi phần đó nếu tệp lưu trữ của bạn là một định dạng khác.)

Bây giờ chạy phần còn lại của sổ ghi chép.

import os

import random

import shutil

def split_into_train_test(images_origin, images_dest, test_split):

"""Splits a directory of sorted images into training and test sets.

Args:

images_origin: Path to the directory with your images. This directory

must include subdirectories for each of your labeled classes. For example:

yoga_poses/

|__ downdog/

|______ 00000128.jpg

|______ 00000181.jpg

|______ ...

|__ goddess/

|______ 00000243.jpg

|______ 00000306.jpg

|______ ...

...

images_dest: Path to a directory where you want the split dataset to be

saved. The results looks like this:

split_yoga_poses/

|__ train/

|__ downdog/

|______ 00000128.jpg

|______ ...

|__ test/

|__ downdog/

|______ 00000181.jpg

|______ ...

test_split: Fraction of data to reserve for test (float between 0 and 1).

"""

_, dirs, _ = next(os.walk(images_origin))

TRAIN_DIR = os.path.join(images_dest, 'train')

TEST_DIR = os.path.join(images_dest, 'test')

os.makedirs(TRAIN_DIR, exist_ok=True)

os.makedirs(TEST_DIR, exist_ok=True)

for dir in dirs:

# Get all filenames for this dir, filtered by filetype

filenames = os.listdir(os.path.join(images_origin, dir))

filenames = [os.path.join(images_origin, dir, f) for f in filenames if (

f.endswith('.png') or f.endswith('.jpg') or f.endswith('.jpeg') or f.endswith('.bmp'))]

# Shuffle the files, deterministically

filenames.sort()

random.seed(42)

random.shuffle(filenames)

# Divide them into train/test dirs

os.makedirs(os.path.join(TEST_DIR, dir), exist_ok=True)

os.makedirs(os.path.join(TRAIN_DIR, dir), exist_ok=True)

test_count = int(len(filenames) * test_split)

for i, file in enumerate(filenames):

if i < test_count:

destination = os.path.join(TEST_DIR, dir, os.path.split(file)[1])

else:

destination = os.path.join(TRAIN_DIR, dir, os.path.split(file)[1])

shutil.copyfile(file, destination)

print(f'Moved {test_count} of {len(filenames)} from class "{dir}" into test.')

print(f'Your split dataset is in "{images_dest}"')

if use_custom_dataset:

# ATTENTION:

# You must edit these two lines to match your archive and images folder name:

# !tar -xf YOUR_DATASET_ARCHIVE_NAME.tar

!unzip -q YOUR_DATASET_ARCHIVE_NAME.zip

dataset_in = 'YOUR_DATASET_DIR_NAME'

# You can leave the rest alone:

if not os.path.isdir(dataset_in):

raise Exception("dataset_in is not a valid directory")

if dataset_is_split:

IMAGES_ROOT = dataset_in

else:

dataset_out = 'split_' + dataset_in

split_into_train_test(dataset_in, dataset_out, test_split=0.2)

IMAGES_ROOT = dataset_out

Tải xuống bộ dữ liệu yoga

if not is_skip_step_1 and not use_custom_dataset:

!wget -O yoga_poses.zip http://download.tensorflow.org/data/pose_classification/yoga_poses.zip

!unzip -q yoga_poses.zip -d yoga_cg

IMAGES_ROOT = "yoga_cg"

--2021-12-21 12:07:46-- http://download.tensorflow.org/data/pose_classification/yoga_poses.zip Resolving download.tensorflow.org (download.tensorflow.org)... 172.217.218.128, 2a00:1450:4013:c08::80 Connecting to download.tensorflow.org (download.tensorflow.org)|172.217.218.128|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 102517581 (98M) [application/zip] Saving to: ‘yoga_poses.zip’ yoga_poses.zip 100%[===================>] 97.77M 76.7MB/s in 1.3s 2021-12-21 12:07:48 (76.7 MB/s) - ‘yoga_poses.zip’ saved [102517581/102517581]

Preprocess các TRAIN bộ dữ liệu

if not is_skip_step_1:

images_in_train_folder = os.path.join(IMAGES_ROOT, 'train')

images_out_train_folder = 'poses_images_out_train'

csvs_out_train_path = 'train_data.csv'

preprocessor = MoveNetPreprocessor(

images_in_folder=images_in_train_folder,

images_out_folder=images_out_train_folder,

csvs_out_path=csvs_out_train_path,

)

preprocessor.process(per_pose_class_limit=None)

Preprocessing chair 0%| | 0/200 [00:00<?, ?it/s]/tmpfs/src/tf_docs_env/lib/python3.7/site-packages/ipykernel_launcher.py:128: DeprecationWarning: `np.str` is a deprecated alias for the builtin `str`. To silence this warning, use `str` by itself. Doing this will not modify any behavior and is safe. If you specifically wanted the numpy scalar type, use `np.str_` here. Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations 100%|██████████| 200/200 [00:32<00:00, 6.10it/s] Preprocessing cobra 100%|██████████| 200/200 [00:31<00:00, 6.27it/s] Preprocessing dog 100%|██████████| 200/200 [00:32<00:00, 6.15it/s] Preprocessing tree 100%|██████████| 200/200 [00:33<00:00, 6.00it/s] Preprocessing warrior 100%|██████████| 200/200 [00:30<00:00, 6.54it/s] Skipped yoga_cg/train/chair/girl3_chair091.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair092.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair093.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair094.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair096.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair097.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair099.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair100.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair104.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair106.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair110.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair114.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair115.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair118.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair122.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair123.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair124.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair125.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair131.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair132.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair133.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair134.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair136.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair138.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair139.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/girl3_chair142.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/guy2_chair089.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/guy2_chair136.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/guy2_chair140.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/guy2_chair143.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/guy2_chair144.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/guy2_chair145.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/chair/guy2_chair146.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra026.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra029.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra030.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra038.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra040.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra041.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra048.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra050.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra051.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra055.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra059.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra061.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra068.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra070.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra081.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra087.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra088.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra089.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra090.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra091.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra092.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra093.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra094.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra096.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra099.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra102.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra110.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra112.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra115.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra119.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra122.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra128.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra129.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra136.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl1_cobra140.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl2_cobra029.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl2_cobra046.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl2_cobra050.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl2_cobra053.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl2_cobra108.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl2_cobra117.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl2_cobra129.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl2_cobra133.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl2_cobra136.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl2_cobra140.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra028.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra030.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra032.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra039.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra040.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra051.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra052.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra058.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra062.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra068.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra072.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra076.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra078.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra079.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra082.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra088.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra092.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra097.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra099.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra107.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra129.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra130.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra132.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra134.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/girl3_cobra138.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra034.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra042.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra043.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra047.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra053.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra065.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra077.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra078.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra080.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra081.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra084.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra088.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra089.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra102.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra105.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra108.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/cobra/guy2_cobra139.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl1_dog027.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl1_dog028.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl1_dog030.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl1_dog032.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog075.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog080.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog083.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog085.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog087.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog088.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog090.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog091.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog093.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog094.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog095.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog099.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog100.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog101.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog103.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog104.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog105.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog107.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl2_dog111.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog025.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog026.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog027.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog028.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog031.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog033.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog035.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog037.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog040.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog041.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog047.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog052.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog062.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog072.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog074.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog075.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog077.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog081.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog082.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog086.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog088.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog090.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog092.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog094.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog095.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog096.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog100.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog102.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog103.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog104.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog106.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog107.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/girl3_dog111.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/guy1_dog070.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/guy1_dog076.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/guy2_dog070.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/guy2_dog071.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/dog/guy2_dog082.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/girl2_tree119.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/girl2_tree122.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/girl2_tree161.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/girl2_tree163.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy1_tree139.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy1_tree140.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy1_tree141.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy1_tree143.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy2_tree085.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy2_tree086.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy2_tree087.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy2_tree088.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy2_tree090.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy2_tree092.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy2_tree145.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/tree/guy2_tree147.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior049.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior053.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior064.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior066.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior067.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior072.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior075.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior077.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior080.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior083.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior084.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior087.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior089.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior093.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior094.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior095.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior098.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior099.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior100.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior103.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior108.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior109.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior111.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior112.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior113.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior114.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior116.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl1_warrior117.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior047.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior049.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior050.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior052.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior057.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior058.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior063.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior068.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior079.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior083.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior085.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior088.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior092.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior096.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior097.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior102.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior106.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl2_warrior108.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior042.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior043.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior047.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior049.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior051.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior054.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior056.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior057.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior061.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior066.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior067.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior073.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior074.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior075.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior079.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior087.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior089.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior090.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior091.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior092.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior094.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior095.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior096.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior100.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior103.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior107.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior115.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior117.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior134.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior140.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/girl3_warrior143.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior043.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior048.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior051.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior052.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior055.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior057.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior062.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior068.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior069.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior073.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior076.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior077.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior080.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior081.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior082.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior088.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior091.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior092.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior093.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior094.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior097.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior118.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior120.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior121.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior124.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior125.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior126.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior131.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior134.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior135.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior138.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior143.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior145.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy1_warrior148.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior051.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior086.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior111.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior118.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior122.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior129.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior131.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior135.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior137.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior139.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior145.jpg. No pose was confidentlly detected. Skipped yoga_cg/train/warrior/guy2_warrior148.jpg. No pose was confidentlly detected.

Preprocess các TEST bộ dữ liệu

if not is_skip_step_1:

images_in_test_folder = os.path.join(IMAGES_ROOT, 'test')

images_out_test_folder = 'poses_images_out_test'

csvs_out_test_path = 'test_data.csv'

preprocessor = MoveNetPreprocessor(

images_in_folder=images_in_test_folder,

images_out_folder=images_out_test_folder,

csvs_out_path=csvs_out_test_path,

)

preprocessor.process(per_pose_class_limit=None)

Preprocessing chair 0%| | 0/84 [00:00<?, ?it/s]/tmpfs/src/tf_docs_env/lib/python3.7/site-packages/ipykernel_launcher.py:128: DeprecationWarning: `np.str` is a deprecated alias for the builtin `str`. To silence this warning, use `str` by itself. Doing this will not modify any behavior and is safe. If you specifically wanted the numpy scalar type, use `np.str_` here. Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations 100%|██████████| 84/84 [00:15<00:00, 5.51it/s] Preprocessing cobra 100%|██████████| 116/116 [00:19<00:00, 6.10it/s] Preprocessing dog 100%|██████████| 90/90 [00:14<00:00, 6.03it/s] Preprocessing tree 100%|██████████| 96/96 [00:16<00:00, 5.98it/s] Preprocessing warrior 100%|██████████| 109/109 [00:17<00:00, 6.38it/s] Skipped yoga_cg/test/cobra/guy3_cobra048.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra050.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra051.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra052.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra053.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra054.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra055.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra056.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra057.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra058.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra059.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra060.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra062.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra069.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra075.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra077.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra081.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra124.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra131.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra132.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra134.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra135.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/cobra/guy3_cobra136.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/dog/guy3_dog025.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/dog/guy3_dog026.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/dog/guy3_dog036.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/dog/guy3_dog042.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/dog/guy3_dog106.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/dog/guy3_dog108.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior042.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior043.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior044.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior045.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior046.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior047.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior048.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior050.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior051.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior052.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior053.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior054.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior055.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior056.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior059.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior060.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior062.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior063.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior065.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior066.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior068.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior070.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior071.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior072.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior073.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior074.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior075.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior076.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior077.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior079.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior080.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior081.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior082.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior083.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior084.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior085.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior086.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior087.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior088.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior089.jpg. No pose was confidentlly detected. Skipped yoga_cg/test/warrior/guy3_warrior137.jpg. No pose was confidentlly detected.

Phần 2: Đào tạo mô hình phân loại tư thế lấy tọa độ mốc làm đầu vào và xuất các nhãn dự đoán.

Bạn sẽ xây dựng mô hình TensorFlow lấy tọa độ mốc và dự đoán kiểu tư thế mà người trong ảnh đầu vào thực hiện. Mô hình bao gồm hai mô hình con:

- Mô hình con 1 tính toán việc nhúng tư thế (hay còn gọi là vectơ đặc điểm) từ các tọa độ mốc được phát hiện.

- Mô hình phụ 2 thức ăn tư thế nhúng qua một vài

Denselớp để dự đoán lớp tư thế.

Sau đó, bạn sẽ đào tạo mô hình dựa trên tập dữ liệu đã được xử lý trước trong phần 1.

(Tùy chọn) Tải xuống tập dữ liệu được xử lý trước nếu bạn không chạy phần 1

# Download the preprocessed CSV files which are the same as the output of step 1

if is_skip_step_1:

!wget -O train_data.csv http://download.tensorflow.org/data/pose_classification/yoga_train_data.csv

!wget -O test_data.csv http://download.tensorflow.org/data/pose_classification/yoga_test_data.csv

csvs_out_train_path = 'train_data.csv'

csvs_out_test_path = 'test_data.csv'

is_skipped_step_1 = True

Nạp CSV preprocessed vào TRAIN và TEST bộ dữ liệu.

def load_pose_landmarks(csv_path):

"""Loads a CSV created by MoveNetPreprocessor.

Returns:

X: Detected landmark coordinates and scores of shape (N, 17 * 3)

y: Ground truth labels of shape (N, label_count)

classes: The list of all class names found in the dataset

dataframe: The CSV loaded as a Pandas dataframe features (X) and ground

truth labels (y) to use later to train a pose classification model.

"""

# Load the CSV file

dataframe = pd.read_csv(csv_path)

df_to_process = dataframe.copy()

# Drop the file_name columns as you don't need it during training.

df_to_process.drop(columns=['file_name'], inplace=True)

# Extract the list of class names

classes = df_to_process.pop('class_name').unique()

# Extract the labels

y = df_to_process.pop('class_no')

# Convert the input features and labels into the correct format for training.

X = df_to_process.astype('float64')

y = keras.utils.to_categorical(y)

return X, y, classes, dataframe

Tải và chia gốc TRAIN bộ dữ liệu vào TRAIN (85% dữ liệu) và VALIDATE (phần còn lại 15%).

# Load the train data

X, y, class_names, _ = load_pose_landmarks(csvs_out_train_path)

# Split training data (X, y) into (X_train, y_train) and (X_val, y_val)

X_train, X_val, y_train, y_val = train_test_split(X, y,

test_size=0.15)

# Load the test data

X_test, y_test, _, df_test = load_pose_landmarks(csvs_out_test_path)

Xác định các chức năng để chuyển đổi các mốc tư thế thành nhúng tư thế (còn gọi là vectơ đặc điểm) để phân loại tư thế

Tiếp theo, chuyển đổi tọa độ mốc thành vectơ đối tượng bằng cách:

- Di chuyển trung tâm tư thế về điểm gốc.

- Mở rộng tư thế để kích thước tư thế trở thành 1

- Làm phẳng các tọa độ này thành một vectơ đặc trưng

Sau đó, sử dụng vectơ đặc điểm này để đào tạo bộ phân loại tư thế dựa trên mạng thần kinh.

def get_center_point(landmarks, left_bodypart, right_bodypart):

"""Calculates the center point of the two given landmarks."""

left = tf.gather(landmarks, left_bodypart.value, axis=1)

right = tf.gather(landmarks, right_bodypart.value, axis=1)

center = left * 0.5 + right * 0.5

return center

def get_pose_size(landmarks, torso_size_multiplier=2.5):

"""Calculates pose size.

It is the maximum of two values:

* Torso size multiplied by `torso_size_multiplier`

* Maximum distance from pose center to any pose landmark

"""

# Hips center

hips_center = get_center_point(landmarks, BodyPart.LEFT_HIP,

BodyPart.RIGHT_HIP)

# Shoulders center

shoulders_center = get_center_point(landmarks, BodyPart.LEFT_SHOULDER,

BodyPart.RIGHT_SHOULDER)

# Torso size as the minimum body size

torso_size = tf.linalg.norm(shoulders_center - hips_center)

# Pose center

pose_center_new = get_center_point(landmarks, BodyPart.LEFT_HIP,

BodyPart.RIGHT_HIP)

pose_center_new = tf.expand_dims(pose_center_new, axis=1)

# Broadcast the pose center to the same size as the landmark vector to

# perform substraction

pose_center_new = tf.broadcast_to(pose_center_new,

[tf.size(landmarks) // (17*2), 17, 2])

# Dist to pose center

d = tf.gather(landmarks - pose_center_new, 0, axis=0,

name="dist_to_pose_center")

# Max dist to pose center

max_dist = tf.reduce_max(tf.linalg.norm(d, axis=0))

# Normalize scale

pose_size = tf.maximum(torso_size * torso_size_multiplier, max_dist)

return pose_size

def normalize_pose_landmarks(landmarks):

"""Normalizes the landmarks translation by moving the pose center to (0,0) and

scaling it to a constant pose size.

"""

# Move landmarks so that the pose center becomes (0,0)

pose_center = get_center_point(landmarks, BodyPart.LEFT_HIP,

BodyPart.RIGHT_HIP)

pose_center = tf.expand_dims(pose_center, axis=1)

# Broadcast the pose center to the same size as the landmark vector to perform

# substraction

pose_center = tf.broadcast_to(pose_center,

[tf.size(landmarks) // (17*2), 17, 2])

landmarks = landmarks - pose_center

# Scale the landmarks to a constant pose size

pose_size = get_pose_size(landmarks)

landmarks /= pose_size

return landmarks

def landmarks_to_embedding(landmarks_and_scores):

"""Converts the input landmarks into a pose embedding."""

# Reshape the flat input into a matrix with shape=(17, 3)

reshaped_inputs = keras.layers.Reshape((17, 3))(landmarks_and_scores)

# Normalize landmarks 2D

landmarks = normalize_pose_landmarks(reshaped_inputs[:, :, :2])

# Flatten the normalized landmark coordinates into a vector

embedding = keras.layers.Flatten()(landmarks)

return embedding

Xác định mô hình Keras để phân loại tư thế

Mô hình Keras của chúng tôi lấy các mốc tư thế đã phát hiện, sau đó tính toán cách nhúng tư thế và dự đoán loại tư thế.

# Define the model

inputs = tf.keras.Input(shape=(51))

embedding = landmarks_to_embedding(inputs)

layer = keras.layers.Dense(128, activation=tf.nn.relu6)(embedding)

layer = keras.layers.Dropout(0.5)(layer)

layer = keras.layers.Dense(64, activation=tf.nn.relu6)(layer)

layer = keras.layers.Dropout(0.5)(layer)

outputs = keras.layers.Dense(len(class_names), activation="softmax")(layer)

model = keras.Model(inputs, outputs)

model.summary()

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 51)] 0 []

reshape (Reshape) (None, 17, 3) 0 ['input_1[0][0]']

tf.__operators__.getitem (Slic (None, 17, 2) 0 ['reshape[0][0]']

ingOpLambda)

tf.compat.v1.gather (TFOpLambd (None, 2) 0 ['tf.__operators__.getitem[0][0]'

a) ]

tf.compat.v1.gather_1 (TFOpLam (None, 2) 0 ['tf.__operators__.getitem[0][0]'

bda) ]

tf.math.multiply (TFOpLambda) (None, 2) 0 ['tf.compat.v1.gather[0][0]']

tf.math.multiply_1 (TFOpLambda (None, 2) 0 ['tf.compat.v1.gather_1[0][0]']

)

tf.__operators__.add (TFOpLamb (None, 2) 0 ['tf.math.multiply[0][0]',

da) 'tf.math.multiply_1[0][0]']

tf.compat.v1.size (TFOpLambda) () 0 ['tf.__operators__.getitem[0][0]'

]

tf.expand_dims (TFOpLambda) (None, 1, 2) 0 ['tf.__operators__.add[0][0]']

tf.compat.v1.floor_div (TFOpLa () 0 ['tf.compat.v1.size[0][0]']

mbda)

tf.broadcast_to (TFOpLambda) (None, 17, 2) 0 ['tf.expand_dims[0][0]',

'tf.compat.v1.floor_div[0][0]']

tf.math.subtract (TFOpLambda) (None, 17, 2) 0 ['tf.__operators__.getitem[0][0]'

, 'tf.broadcast_to[0][0]']

tf.compat.v1.gather_6 (TFOpLam (None, 2) 0 ['tf.math.subtract[0][0]']

bda)

tf.compat.v1.gather_7 (TFOpLam (None, 2) 0 ['tf.math.subtract[0][0]']

bda)

tf.math.multiply_6 (TFOpLambda (None, 2) 0 ['tf.compat.v1.gather_6[0][0]']

)

tf.math.multiply_7 (TFOpLambda (None, 2) 0 ['tf.compat.v1.gather_7[0][0]']

)

tf.__operators__.add_3 (TFOpLa (None, 2) 0 ['tf.math.multiply_6[0][0]',

mbda) 'tf.math.multiply_7[0][0]']

tf.compat.v1.size_1 (TFOpLambd () 0 ['tf.math.subtract[0][0]']

a)

tf.compat.v1.gather_4 (TFOpLam (None, 2) 0 ['tf.math.subtract[0][0]']

bda)

tf.compat.v1.gather_5 (TFOpLam (None, 2) 0 ['tf.math.subtract[0][0]']

bda)

tf.compat.v1.gather_2 (TFOpLam (None, 2) 0 ['tf.math.subtract[0][0]']

bda)

tf.compat.v1.gather_3 (TFOpLam (None, 2) 0 ['tf.math.subtract[0][0]']

bda)

tf.expand_dims_1 (TFOpLambda) (None, 1, 2) 0 ['tf.__operators__.add_3[0][0]']

tf.compat.v1.floor_div_1 (TFOp () 0 ['tf.compat.v1.size_1[0][0]']

Lambda)

tf.math.multiply_4 (TFOpLambda (None, 2) 0 ['tf.compat.v1.gather_4[0][0]']

)

tf.math.multiply_5 (TFOpLambda (None, 2) 0 ['tf.compat.v1.gather_5[0][0]']

)

tf.math.multiply_2 (TFOpLambda (None, 2) 0 ['tf.compat.v1.gather_2[0][0]']

)

tf.math.multiply_3 (TFOpLambda (None, 2) 0 ['tf.compat.v1.gather_3[0][0]']

)

tf.broadcast_to_1 (TFOpLambda) (None, 17, 2) 0 ['tf.expand_dims_1[0][0]',

'tf.compat.v1.floor_div_1[0][0]'

]

tf.__operators__.add_2 (TFOpLa (None, 2) 0 ['tf.math.multiply_4[0][0]',

mbda) 'tf.math.multiply_5[0][0]']

tf.__operators__.add_1 (TFOpLa (None, 2) 0 ['tf.math.multiply_2[0][0]',

mbda) 'tf.math.multiply_3[0][0]']

tf.math.subtract_2 (TFOpLambda (None, 17, 2) 0 ['tf.math.subtract[0][0]',

) 'tf.broadcast_to_1[0][0]']

tf.math.subtract_1 (TFOpLambda (None, 2) 0 ['tf.__operators__.add_2[0][0]',

) 'tf.__operators__.add_1[0][0]']

tf.compat.v1.gather_8 (TFOpLam (17, 2) 0 ['tf.math.subtract_2[0][0]']

bda)

tf.compat.v1.norm (TFOpLambda) () 0 ['tf.math.subtract_1[0][0]']

tf.compat.v1.norm_1 (TFOpLambd (2,) 0 ['tf.compat.v1.gather_8[0][0]']

a)

tf.math.multiply_8 (TFOpLambda () 0 ['tf.compat.v1.norm[0][0]']

)

tf.math.reduce_max (TFOpLambda () 0 ['tf.compat.v1.norm_1[0][0]']

)

tf.math.maximum (TFOpLambda) () 0 ['tf.math.multiply_8[0][0]',

'tf.math.reduce_max[0][0]']

tf.math.truediv (TFOpLambda) (None, 17, 2) 0 ['tf.math.subtract[0][0]',

'tf.math.maximum[0][0]']

flatten (Flatten) (None, 34) 0 ['tf.math.truediv[0][0]']

dense (Dense) (None, 128) 4480 ['flatten[0][0]']

dropout (Dropout) (None, 128) 0 ['dense[0][0]']

dense_1 (Dense) (None, 64) 8256 ['dropout[0][0]']

dropout_1 (Dropout) (None, 64) 0 ['dense_1[0][0]']

dense_2 (Dense) (None, 5) 325 ['dropout_1[0][0]']

==================================================================================================

Total params: 13,061

Trainable params: 13,061

Non-trainable params: 0

__________________________________________________________________________________________________

model.compile(

optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy']

)

# Add a checkpoint callback to store the checkpoint that has the highest

# validation accuracy.

checkpoint_path = "weights.best.hdf5"

checkpoint = keras.callbacks.ModelCheckpoint(checkpoint_path,

monitor='val_accuracy',

verbose=1,

save_best_only=True,

mode='max')

earlystopping = keras.callbacks.EarlyStopping(monitor='val_accuracy',

patience=20)

# Start training

history = model.fit(X_train, y_train,

epochs=200,

batch_size=16,

validation_data=(X_val, y_val),

callbacks=[checkpoint, earlystopping])

Epoch 1/200 19/37 [==============>...............] - ETA: 0s - loss: 1.5703 - accuracy: 0.3684 Epoch 00001: val_accuracy improved from -inf to 0.64706, saving model to weights.best.hdf5 37/37 [==============================] - 1s 11ms/step - loss: 1.5090 - accuracy: 0.4602 - val_loss: 1.3352 - val_accuracy: 0.6471 Epoch 2/200 20/37 [===============>..............] - ETA: 0s - loss: 1.3372 - accuracy: 0.4844 Epoch 00002: val_accuracy improved from 0.64706 to 0.67647, saving model to weights.best.hdf5 37/37 [==============================] - 0s 4ms/step - loss: 1.2375 - accuracy: 0.5190 - val_loss: 1.0193 - val_accuracy: 0.6765 Epoch 3/200 20/37 [===============>..............] - ETA: 0s - loss: 1.0596 - accuracy: 0.5469 Epoch 00003: val_accuracy improved from 0.67647 to 0.75490, saving model to weights.best.hdf5 37/37 [==============================] - 0s 4ms/step - loss: 1.0096 - accuracy: 0.5796 - val_loss: 0.8397 - val_accuracy: 0.7549 Epoch 4/200 21/37 [================>.............] - ETA: 0s - loss: 0.8922 - accuracy: 0.6220 Epoch 00004: val_accuracy improved from 0.75490 to 0.81373, saving model to weights.best.hdf5 37/37 [==============================] - 0s 4ms/step - loss: 0.8798 - accuracy: 0.6349 - val_loss: 0.7103 - val_accuracy: 0.8137 Epoch 5/200 20/37 [===============>..............] - ETA: 0s - loss: 0.7895 - accuracy: 0.6875 Epoch 00005: val_accuracy improved from 0.81373 to 0.82353, saving model to weights.best.hdf5 37/37 [==============================] - 0s 4ms/step - loss: 0.7810 - accuracy: 0.6903 - val_loss: 0.6120 - val_accuracy: 0.8235 Epoch 6/200 20/37 [===============>..............] - ETA: 0s - loss: 0.7324 - accuracy: 0.7250 Epoch 00006: val_accuracy improved from 0.82353 to 0.92157, saving model to weights.best.hdf5 37/37 [==============================] - 0s 4ms/step - loss: 0.7263 - accuracy: 0.7093 - val_loss: 0.5297 - val_accuracy: 0.9216 Epoch 7/200 19/37 [==============>...............] - ETA: 0s - loss: 0.6852 - accuracy: 0.7467 Epoch 00007: val_accuracy did not improve from 0.92157 37/37 [==============================] - 0s 4ms/step - loss: 0.6450 - accuracy: 0.7595 - val_loss: 0.4635 - val_accuracy: 0.8922 Epoch 8/200 20/37 [===============>..............] - ETA: 0s - loss: 0.6007 - accuracy: 0.7719 Epoch 00008: val_accuracy did not improve from 0.92157 37/37 [==============================] - 0s 4ms/step - loss: 0.5751 - accuracy: 0.7837 - val_loss: 0.4012 - val_accuracy: 0.9216 Epoch 9/200 20/37 [===============>..............] - ETA: 0s - loss: 0.5358 - accuracy: 0.8125 Epoch 00009: val_accuracy improved from 0.92157 to 0.93137, saving model to weights.best.hdf5 37/37 [==============================] - 0s 4ms/step - loss: 0.5272 - accuracy: 0.8097 - val_loss: 0.3547 - val_accuracy: 0.9314 Epoch 10/200 20/37 [===============>..............] - ETA: 0s - loss: 0.5200 - accuracy: 0.8094 Epoch 00010: val_accuracy improved from 0.93137 to 0.98039, saving model to weights.best.hdf5 37/37 [==============================] - 0s 5ms/step - loss: 0.5051 - accuracy: 0.8218 - val_loss: 0.3014 - val_accuracy: 0.9804 Epoch 11/200 19/37 [==============>...............] - ETA: 0s - loss: 0.4413 - accuracy: 0.8322 Epoch 00011: val_accuracy did not improve from 0.98039 37/37 [==============================] - 0s 4ms/step - loss: 0.4509 - accuracy: 0.8374 - val_loss: 0.2786 - val_accuracy: 0.9706 Epoch 12/200 20/37 [===============>..............] - ETA: 0s - loss: 0.4323 - accuracy: 0.8156 Epoch 00012: val_accuracy improved from 0.98039 to 0.99020, saving model to weights.best.hdf5 37/37 [==============================] - 0s 5ms/step - loss: 0.4377 - accuracy: 0.8253 - val_loss: 0.2440 - val_accuracy: 0.9902 Epoch 13/200 20/37 [===============>..............] - ETA: 0s - loss: 0.4037 - accuracy: 0.8719 Epoch 00013: val_accuracy did not improve from 0.99020 37/37 [==============================] - 0s 4ms/step - loss: 0.4187 - accuracy: 0.8668 - val_loss: 0.2109 - val_accuracy: 0.9804 Epoch 14/200 20/37 [===============>..............] - ETA: 0s - loss: 0.3664 - accuracy: 0.8813 Epoch 00014: val_accuracy did not improve from 0.99020 37/37 [==============================] - 0s 4ms/step - loss: 0.3733 - accuracy: 0.8772 - val_loss: 0.2030 - val_accuracy: 0.9804 Epoch 15/200 20/37 [===============>..............] - ETA: 0s - loss: 0.3708 - accuracy: 0.8781 Epoch 00015: val_accuracy did not improve from 0.99020 37/37 [==============================] - 0s 4ms/step - loss: 0.3684 - accuracy: 0.8754 - val_loss: 0.1765 - val_accuracy: 0.9902 Epoch 16/200 21/37 [================>.............] - ETA: 0s - loss: 0.3238 - accuracy: 0.9137 Epoch 00016: val_accuracy did not improve from 0.99020 37/37 [==============================] - 0s 4ms/step - loss: 0.3213 - accuracy: 0.9100 - val_loss: 0.1662 - val_accuracy: 0.9804 Epoch 17/200 20/37 [===============>..............] - ETA: 0s - loss: 0.2739 - accuracy: 0.9281 Epoch 00017: val_accuracy did not improve from 0.99020 37/37 [==============================] - 0s 4ms/step - loss: 0.3015 - accuracy: 0.9100 - val_loss: 0.1423 - val_accuracy: 0.9804 Epoch 18/200 20/37 [===============>..............] - ETA: 0s - loss: 0.3076 - accuracy: 0.9062 Epoch 00018: val_accuracy did not improve from 0.99020 37/37 [==============================] - 0s 4ms/step - loss: 0.3022 - accuracy: 0.9048 - val_loss: 0.1407 - val_accuracy: 0.9804 Epoch 19/200 20/37 [===============>..............] - ETA: 0s - loss: 0.2719 - accuracy: 0.9250 Epoch 00019: val_accuracy did not improve from 0.99020 37/37 [==============================] - 0s 4ms/step - loss: 0.2697 - accuracy: 0.9291 - val_loss: 0.1191 - val_accuracy: 0.9902 Epoch 20/200 20/37 [===============>..............] - ETA: 0s - loss: 0.2960 - accuracy: 0.9031 Epoch 00020: val_accuracy did not improve from 0.99020 37/37 [==============================] - 0s 4ms/step - loss: 0.2775 - accuracy: 0.9100 - val_loss: 0.1120 - val_accuracy: 0.9902 Epoch 21/200 20/37 [===============>..............] - ETA: 0s - loss: 0.2590 - accuracy: 0.9250 Epoch 00021: val_accuracy did not improve from 0.99020 37/37 [==============================] - 0s 4ms/step - loss: 0.2537 - accuracy: 0.9273 - val_loss: 0.1022 - val_accuracy: 0.9902 Epoch 22/200 20/37 [===============>..............] - ETA: 0s - loss: 0.2504 - accuracy: 0.9344 Epoch 00022: val_accuracy did not improve from 0.99020 37/37 [==============================] - 0s 4ms/step - loss: 0.2661 - accuracy: 0.9204 - val_loss: 0.0976 - val_accuracy: 0.9902 Epoch 23/200 20/37 [===============>..............] - ETA: 0s - loss: 0.2384 - accuracy: 0.9156 Epoch 00023: val_accuracy did not improve from 0.99020 37/37 [==============================] - 0s 4ms/step - loss: 0.2182 - accuracy: 0.9308 - val_loss: 0.0944 - val_accuracy: 0.9902 Epoch 24/200 20/37 [===============>..............] - ETA: 0s - loss: 0.2157 - accuracy: 0.9375 Epoch 00024: val_accuracy did not improve from 0.99020 37/37 [==============================] - 0s 4ms/step - loss: 0.2031 - accuracy: 0.9412 - val_loss: 0.0844 - val_accuracy: 0.9902 Epoch 25/200 20/37 [===============>..............] - ETA: 0s - loss: 0.1944 - accuracy: 0.9469 Epoch 00025: val_accuracy did not improve from 0.99020 37/37 [==============================] - 0s 4ms/step - loss: 0.2080 - accuracy: 0.9343 - val_loss: 0.0811 - val_accuracy: 0.9902 Epoch 26/200 20/37 [===============>..............] - ETA: 0s - loss: 0.2232 - accuracy: 0.9312 Epoch 00026: val_accuracy did not improve from 0.99020 37/37 [==============================] - 0s 4ms/step - loss: 0.2033 - accuracy: 0.9394 - val_loss: 0.0703 - val_accuracy: 0.9902 Epoch 27/200 20/37 [===============>..............] - ETA: 0s - loss: 0.2120 - accuracy: 0.9281 Epoch 00027: val_accuracy did not improve from 0.99020 37/37 [==============================] - 0s 4ms/step - loss: 0.1845 - accuracy: 0.9481 - val_loss: 0.0708 - val_accuracy: 0.9902 Epoch 28/200 20/37 [===============>..............] - ETA: 0s - loss: 0.2696 - accuracy: 0.9156 Epoch 00028: val_accuracy did not improve from 0.99020 37/37 [==============================] - 0s 4ms/step - loss: 0.2355 - accuracy: 0.9273 - val_loss: 0.0679 - val_accuracy: 0.9902 Epoch 29/200 20/37 [===============>..............] - ETA: 0s - loss: 0.1794 - accuracy: 0.9531 Epoch 00029: val_accuracy did not improve from 0.99020 37/37 [==============================] - 0s 4ms/step - loss: 0.1938 - accuracy: 0.9498 - val_loss: 0.0623 - val_accuracy: 0.9902 Epoch 30/200 20/37 [===============>..............] - ETA: 0s - loss: 0.1831 - accuracy: 0.9406 Epoch 00030: val_accuracy did not improve from 0.99020 37/37 [==============================] - 0s 4ms/step - loss: 0.1758 - accuracy: 0.9498 - val_loss: 0.0599 - val_accuracy: 0.9902 Epoch 31/200 20/37 [===============>..............] - ETA: 0s - loss: 0.1967 - accuracy: 0.9375 Epoch 00031: val_accuracy did not improve from 0.99020 37/37 [==============================] - 0s 4ms/step - loss: 0.1724 - accuracy: 0.9516 - val_loss: 0.0565 - val_accuracy: 0.9902 Epoch 32/200 20/37 [===============>..............] - ETA: 0s - loss: 0.1868 - accuracy: 0.9219 Epoch 00032: val_accuracy did not improve from 0.99020 37/37 [==============================] - 0s 4ms/step - loss: 0.1676 - accuracy: 0.9360 - val_loss: 0.0503 - val_accuracy: 0.9902

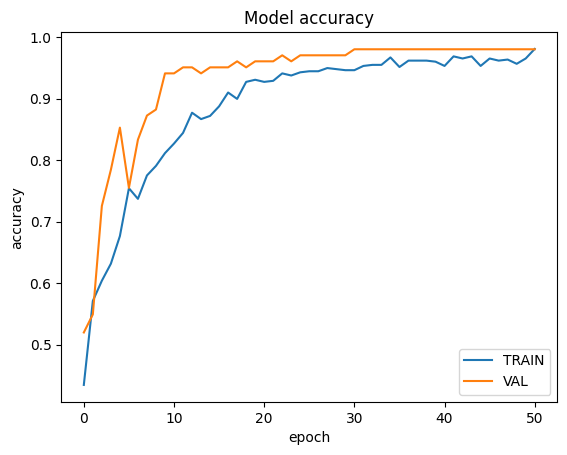

# Visualize the training history to see whether you're overfitting.

plt.plot(history.history['accuracy'])

plt.plot(history.history['val_accuracy'])

plt.title('Model accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['TRAIN', 'VAL'], loc='lower right')

plt.show()

# Evaluate the model using the TEST dataset

loss, accuracy = model.evaluate(X_test, y_test)

14/14 [==============================] - 0s 2ms/step - loss: 0.0612 - accuracy: 0.9976

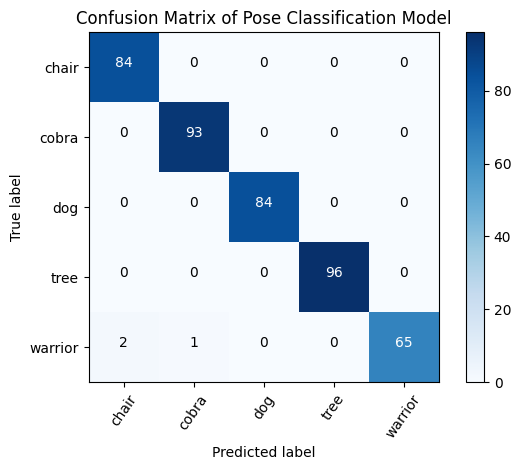

Vẽ ma trận nhầm lẫn để hiểu rõ hơn về hiệu suất của mô hình

def plot_confusion_matrix(cm, classes,

normalize=False,

title='Confusion matrix',

cmap=plt.cm.Blues):

"""Plots the confusion matrix."""

if normalize:

cm = cm.astype('float') / cm.sum(axis=1)[:, np.newaxis]

print("Normalized confusion matrix")

else:

print('Confusion matrix, without normalization')

plt.imshow(cm, interpolation='nearest', cmap=cmap)

plt.title(title)

plt.colorbar()

tick_marks = np.arange(len(classes))

plt.xticks(tick_marks, classes, rotation=55)

plt.yticks(tick_marks, classes)

fmt = '.2f' if normalize else 'd'

thresh = cm.max() / 2.

for i, j in itertools.product(range(cm.shape[0]), range(cm.shape[1])):

plt.text(j, i, format(cm[i, j], fmt),

horizontalalignment="center",

color="white" if cm[i, j] > thresh else "black")

plt.ylabel('True label')

plt.xlabel('Predicted label')

plt.tight_layout()

# Classify pose in the TEST dataset using the trained model

y_pred = model.predict(X_test)

# Convert the prediction result to class name

y_pred_label = [class_names[i] for i in np.argmax(y_pred, axis=1)]

y_true_label = [class_names[i] for i in np.argmax(y_test, axis=1)]

# Plot the confusion matrix

cm = confusion_matrix(np.argmax(y_test, axis=1), np.argmax(y_pred, axis=1))

plot_confusion_matrix(cm,

class_names,

title ='Confusion Matrix of Pose Classification Model')

# Print the classification report

print('\nClassification Report:\n', classification_report(y_true_label,

y_pred_label))

Confusion matrix, without normalization

Classification Report:

precision recall f1-score support

chair 1.00 1.00 1.00 84

cobra 0.99 1.00 0.99 93

dog 1.00 1.00 1.00 84

tree 1.00 1.00 1.00 96

warrior 1.00 0.99 0.99 68

accuracy 1.00 425

macro avg 1.00 1.00 1.00 425

weighted avg 1.00 1.00 1.00 425

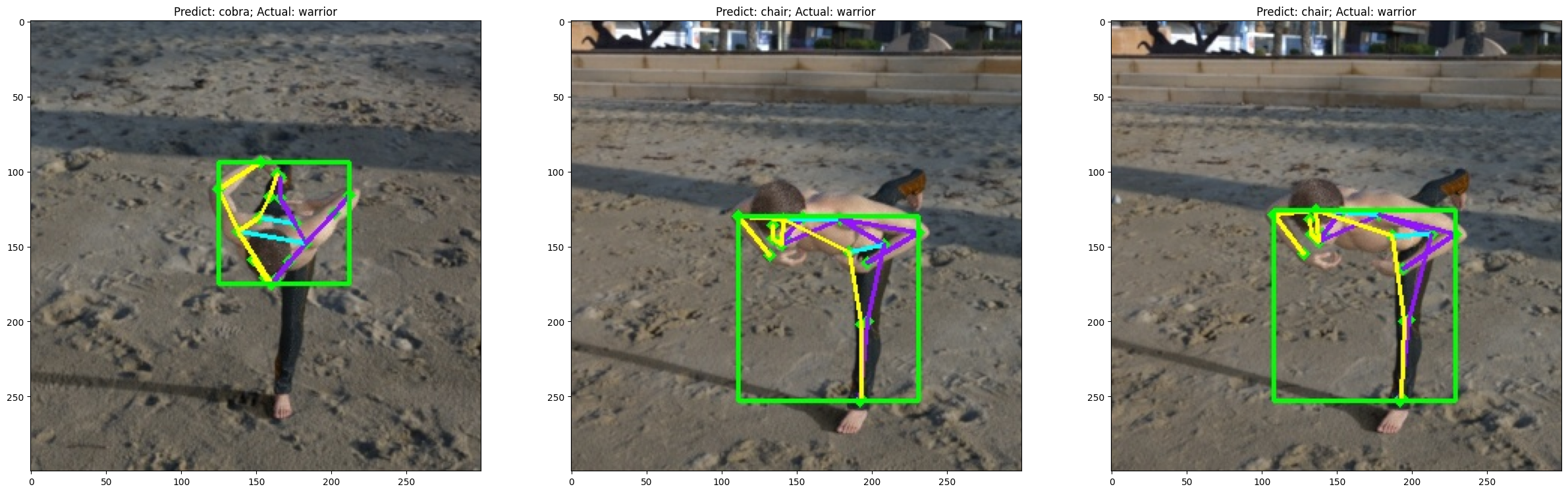

(Tùy chọn) Điều tra các dự đoán không chính xác

Bạn có thể nhìn vào tư thế từ TEST bộ dữ liệu đã được dự đoán không chính xác để xem liệu chính xác mô hình có thể được cải thiện.

if is_skip_step_1:

raise RuntimeError('You must have run step 1 to run this cell.')

# If step 1 was skipped, skip this step.

IMAGE_PER_ROW = 3

MAX_NO_OF_IMAGE_TO_PLOT = 30

# Extract the list of incorrectly predicted poses