TensorFlow.org에서 보기 TensorFlow.org에서 보기 |

Google Colab에서 실행 Google Colab에서 실행 |

GitHub에서 보기 GitHub에서 보기 |

노트북 다운로드 노트북 다운로드 |

TF Hub 모델 보기 TF Hub 모델 보기 |

이 Colab은 문장 유사성 작업에 Universal Sentence Encoder-Lite를 사용하는 방법을 보여줍니다. 이 모듈은 입력 문장에서 SentencePiece 처리를 실행해야 한다는 점만 제외하면 Universal Sentence Encoder와 매우 유사합니다.

Universal Sentence Encoder를 사용하면 기존에 개별 단어에 대한 임베딩을 조회하는 것처럼 쉽게 문장 수준 임베딩을 얻을 수 있습니다. 그러면 문장 임베딩을 간단히 사용하여 문장 수준의 의미론적 유사성을 계산할 수 있을 뿐만 아니라 감독되지 않은 더 적은 훈련 데이터를 사용하여 다운스트림 분류 작업의 성능을 높일 수 있습니다.

시작하기

설정

# Install seaborn for pretty visualizationspip3 install --quiet seaborn# Install SentencePiece package# SentencePiece package is needed for Universal Sentence Encoder Lite. We'll# use it for all the text processing and sentence feature ID lookup.pip3 install --quiet sentencepiece

from absl import logging

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

import tensorflow_hub as hub

import sentencepiece as spm

import matplotlib.pyplot as plt

import numpy as np

import os

import pandas as pd

import re

import seaborn as sns

2022-12-14 20:09:58.357038: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer.so.7'; dlerror: libnvinfer.so.7: cannot open shared object file: No such file or directory 2022-12-14 20:09:58.357150: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer_plugin.so.7'; dlerror: libnvinfer_plugin.so.7: cannot open shared object file: No such file or directory 2022-12-14 20:09:58.357160: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Cannot dlopen some TensorRT libraries. If you would like to use Nvidia GPU with TensorRT, please make sure the missing libraries mentioned above are installed properly. WARNING:tensorflow:From /tmpfs/src/tf_docs_env/lib/python3.9/site-packages/tensorflow/python/compat/v2_compat.py:107: disable_resource_variables (from tensorflow.python.ops.variable_scope) is deprecated and will be removed in a future version. Instructions for updating: non-resource variables are not supported in the long term

TF-Hub에서 모듈 로드하기

module = hub.Module("https://tfhub.dev/google/universal-sentence-encoder-lite/2")

input_placeholder = tf.sparse_placeholder(tf.int64, shape=[None, None])

encodings = module(

inputs=dict(

values=input_placeholder.values,

indices=input_placeholder.indices,

dense_shape=input_placeholder.dense_shape))

INFO:tensorflow:Saver not created because there are no variables in the graph to restore INFO:tensorflow:Saver not created because there are no variables in the graph to restore

TF-Hub 모듈에서 SentencePiece 모델 로드하기

SentencePiece 모델은 모듈의 자산 내에 편리하게 저장됩니다. 프로세서를 초기화하려면 이 모델을 로드해야 합니다.

with tf.Session() as sess:

spm_path = sess.run(module(signature="spm_path"))

sp = spm.SentencePieceProcessor()

with tf.io.gfile.GFile(spm_path, mode="rb") as f:

sp.LoadFromSerializedProto(f.read())

print("SentencePiece model loaded at {}.".format(spm_path))

INFO:tensorflow:Saver not created because there are no variables in the graph to restore INFO:tensorflow:Saver not created because there are no variables in the graph to restore SentencePiece model loaded at b'/tmpfs/tmp/tfhub_modules/539544f0a997d91c327c23285ea00c37588d92cc/assets/universal_encoder_8k_spm.model'.

def process_to_IDs_in_sparse_format(sp, sentences):

# An utility method that processes sentences with the sentence piece processor

# 'sp' and returns the results in tf.SparseTensor-similar format:

# (values, indices, dense_shape)

ids = [sp.EncodeAsIds(x) for x in sentences]

max_len = max(len(x) for x in ids)

dense_shape=(len(ids), max_len)

values=[item for sublist in ids for item in sublist]

indices=[[row,col] for row in range(len(ids)) for col in range(len(ids[row]))]

return (values, indices, dense_shape)

몇 가지 예를 통해 모듈 테스트하기

# Compute a representation for each message, showing various lengths supported.

word = "Elephant"

sentence = "I am a sentence for which I would like to get its embedding."

paragraph = (

"Universal Sentence Encoder embeddings also support short paragraphs. "

"There is no hard limit on how long the paragraph is. Roughly, the longer "

"the more 'diluted' the embedding will be.")

messages = [word, sentence, paragraph]

values, indices, dense_shape = process_to_IDs_in_sparse_format(sp, messages)

# Reduce logging output.

logging.set_verbosity(logging.ERROR)

with tf.Session() as session:

session.run([tf.global_variables_initializer(), tf.tables_initializer()])

message_embeddings = session.run(

encodings,

feed_dict={input_placeholder.values: values,

input_placeholder.indices: indices,

input_placeholder.dense_shape: dense_shape})

for i, message_embedding in enumerate(np.array(message_embeddings).tolist()):

print("Message: {}".format(messages[i]))

print("Embedding size: {}".format(len(message_embedding)))

message_embedding_snippet = ", ".join(

(str(x) for x in message_embedding[:3]))

print("Embedding: [{}, ...]\n".format(message_embedding_snippet))

Message: Elephant Embedding size: 512 Embedding: [0.053387485444545746, 0.05319438502192497, -0.052356019616127014, ...] Message: I am a sentence for which I would like to get its embedding. Embedding size: 512 Embedding: [0.03533294424414635, -0.047149717807769775, 0.012305551208555698, ...] Message: Universal Sentence Encoder embeddings also support short paragraphs. There is no hard limit on how long the paragraph is. Roughly, the longer the more 'diluted' the embedding will be. Embedding size: 512 Embedding: [-0.004081601742655039, -0.08954868465662003, 0.037371981889009476, ...]

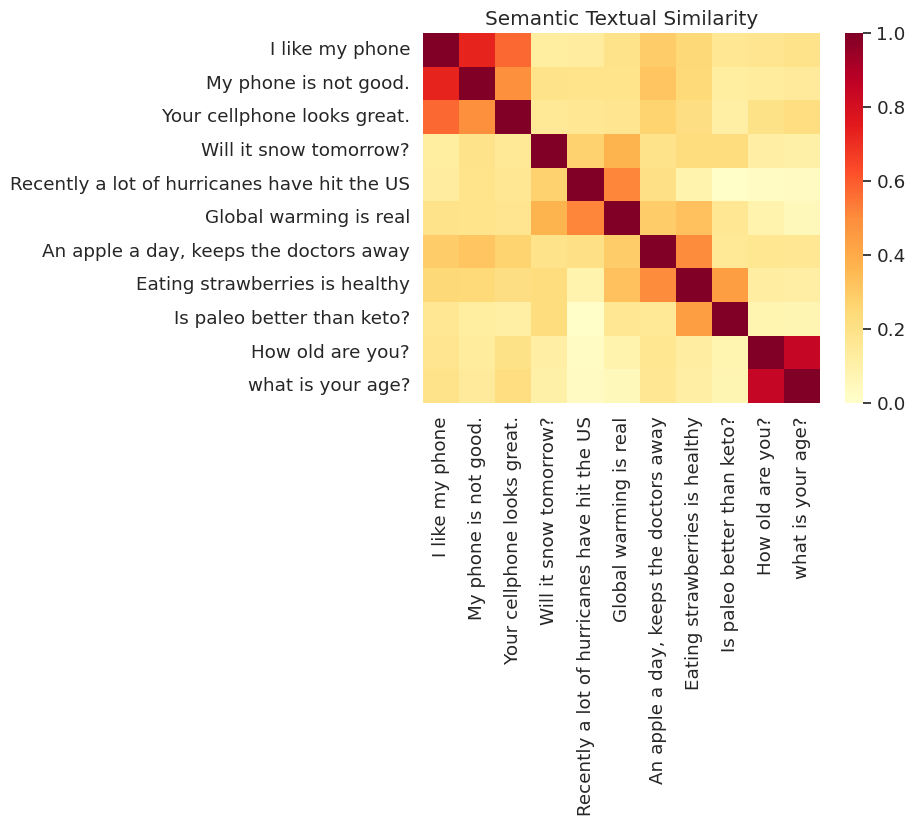

의미론적 텍스트 유사성(STS) 작업 예제

Universal Sentence Encoder에 의해 생성된 임베딩은 대략적으로 정규화됩니다. 두 문장의 의미론적 유사성은 인코딩의 내적으로 간편하게 계산될 수 있습니다.

def plot_similarity(labels, features, rotation):

corr = np.inner(features, features)

sns.set(font_scale=1.2)

g = sns.heatmap(

corr,

xticklabels=labels,

yticklabels=labels,

vmin=0,

vmax=1,

cmap="YlOrRd")

g.set_xticklabels(labels, rotation=rotation)

g.set_title("Semantic Textual Similarity")

def run_and_plot(session, input_placeholder, messages):

values, indices, dense_shape = process_to_IDs_in_sparse_format(sp,messages)

message_embeddings = session.run(

encodings,

feed_dict={input_placeholder.values: values,

input_placeholder.indices: indices,

input_placeholder.dense_shape: dense_shape})

plot_similarity(messages, message_embeddings, 90)

시각화된 유사성

여기서는 히트 맵으로 유사성을 나타냅니다. 최종 그래프는 9x9 행렬이며, 각 항 [i, j]는 문장 i 및 j에 대한 인코딩의 내적을 바탕으로 색상이 지정됩니다.

messages = [

# Smartphones

"I like my phone",

"My phone is not good.",

"Your cellphone looks great.",

# Weather

"Will it snow tomorrow?",

"Recently a lot of hurricanes have hit the US",

"Global warming is real",

# Food and health

"An apple a day, keeps the doctors away",

"Eating strawberries is healthy",

"Is paleo better than keto?",

# Asking about age

"How old are you?",

"what is your age?",

]

with tf.Session() as session:

session.run(tf.global_variables_initializer())

session.run(tf.tables_initializer())

run_and_plot(session, input_placeholder, messages)

평가: 의미론적 텍스트 유사성(STS) 벤치마크

STS 벤치마크는 문장 임베딩을 사용하여 계산된 유사성 점수가 사람의 판단과 일치하는 정도에 대한 내재적 평가를 제공합니다. 벤치마크를 위해 시스템이 다양한 문장 쌍 선택에 대한 유사성 점수를 반환해야 합니다. 그런 다음 Pearson 상관 관계를 사용하여 사람의 판단에 대한 머신 유사성 점수의 품질을 평가합니다.

데이터 다운로드하기

import pandas

import scipy

import math

def load_sts_dataset(filename):

# Loads a subset of the STS dataset into a DataFrame. In particular both

# sentences and their human rated similarity score.

sent_pairs = []

with tf.gfile.GFile(filename, "r") as f:

for line in f:

ts = line.strip().split("\t")

# (sent_1, sent_2, similarity_score)

sent_pairs.append((ts[5], ts[6], float(ts[4])))

return pandas.DataFrame(sent_pairs, columns=["sent_1", "sent_2", "sim"])

def download_and_load_sts_data():

sts_dataset = tf.keras.utils.get_file(

fname="Stsbenchmark.tar.gz",

origin="http://ixa2.si.ehu.es/stswiki/images/4/48/Stsbenchmark.tar.gz",

extract=True)

sts_dev = load_sts_dataset(

os.path.join(os.path.dirname(sts_dataset), "stsbenchmark", "sts-dev.csv"))

sts_test = load_sts_dataset(

os.path.join(

os.path.dirname(sts_dataset), "stsbenchmark", "sts-test.csv"))

return sts_dev, sts_test

sts_dev, sts_test = download_and_load_sts_data()

Downloading data from http://ixa2.si.ehu.es/stswiki/images/4/48/Stsbenchmark.tar.gz 409630/409630 [==============================] - 1s 3us/step

평가 그래프 빌드하기

sts_input1 = tf.sparse_placeholder(tf.int64, shape=(None, None))

sts_input2 = tf.sparse_placeholder(tf.int64, shape=(None, None))

# For evaluation we use exactly normalized rather than

# approximately normalized.

sts_encode1 = tf.nn.l2_normalize(

module(

inputs=dict(values=sts_input1.values,

indices=sts_input1.indices,

dense_shape=sts_input1.dense_shape)),

axis=1)

sts_encode2 = tf.nn.l2_normalize(

module(

inputs=dict(values=sts_input2.values,

indices=sts_input2.indices,

dense_shape=sts_input2.dense_shape)),

axis=1)

sim_scores = -tf.acos(tf.reduce_sum(tf.multiply(sts_encode1, sts_encode2), axis=1))

INFO:tensorflow:Saver not created because there are no variables in the graph to restore INFO:tensorflow:Saver not created because there are no variables in the graph to restore INFO:tensorflow:Saver not created because there are no variables in the graph to restore INFO:tensorflow:Saver not created because there are no variables in the graph to restore

문장 임베딩 평가하기

Choose dataset for benchmark

dataset = sts_dev

values1, indices1, dense_shape1 = process_to_IDs_in_sparse_format(sp, dataset['sent_1'].tolist())

values2, indices2, dense_shape2 = process_to_IDs_in_sparse_format(sp, dataset['sent_2'].tolist())

similarity_scores = dataset['sim'].tolist()

def run_sts_benchmark(session):

"""Returns the similarity scores"""

scores = session.run(

sim_scores,

feed_dict={

sts_input1.values: values1,

sts_input1.indices: indices1,

sts_input1.dense_shape: dense_shape1,

sts_input2.values: values2,

sts_input2.indices: indices2,

sts_input2.dense_shape: dense_shape2,

})

return scores

with tf.Session() as session:

session.run(tf.global_variables_initializer())

session.run(tf.tables_initializer())

scores = run_sts_benchmark(session)

pearson_correlation = scipy.stats.pearsonr(scores, similarity_scores)

print('Pearson correlation coefficient = {0}\np-value = {1}'.format(

pearson_correlation[0], pearson_correlation[1]))

Pearson correlation coefficient = 0.7856484834952554 p-value = 1.0658075485e-314